注意

点击 这里 下载完整的示例代码

音频特征提取¶

作者:Moto Hira

torchaudio 实现了一些音频领域常用的特征提取方法。它们可以在 torchaudio.functional 和 torchaudio.transforms 中找到。

functional 将特征实现为独立的函数。它们是无状态的。

transforms 将特征实现为对象,使用 functional 和 torch.nn.Module 的实现。它们可以使用 TorchScript 进行序列化。

import torch

import torchaudio

import torchaudio.functional as F

import torchaudio.transforms as T

print(torch.__version__)

print(torchaudio.__version__)

import matplotlib.pyplot as plt

2.10.0.dev20251013+cu126

2.8.0a0+1d65bbe

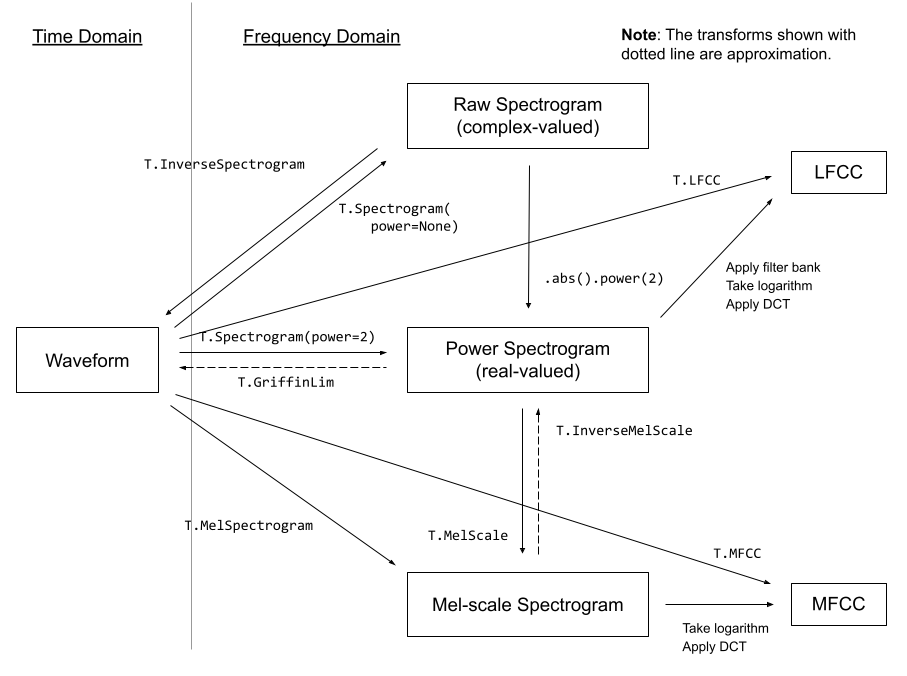

音频特征概述¶

下图展示了常用音频特征与生成它们的 torchaudio API 之间的关系。

有关可用功能的完整列表,请参阅文档。

准备¶

from IPython.display import Audio

from matplotlib.patches import Rectangle

from torchaudio.utils import _download_asset

torch.random.manual_seed(0)

SAMPLE_SPEECH = _download_asset("tutorial-assets/Lab41-SRI-VOiCES-src-sp0307-ch127535-sg0042.wav")

def plot_waveform(waveform, sr, title="Waveform", ax=None):

waveform = waveform.numpy()

num_channels, num_frames = waveform.shape

time_axis = torch.arange(0, num_frames) / sr

if ax is None:

_, ax = plt.subplots(num_channels, 1)

ax.plot(time_axis, waveform[0], linewidth=1)

ax.grid(True)

ax.set_xlim([0, time_axis[-1]])

ax.set_title(title)

def plot_spectrogram(specgram, title=None, ylabel="freq_bin", ax=None):

if ax is None:

_, ax = plt.subplots(1, 1)

if title is not None:

ax.set_title(title)

ax.set_ylabel(ylabel)

power_to_db = T.AmplitudeToDB("power", 80.0)

ax.imshow(power_to_db(specgram), origin="lower", aspect="auto", interpolation="nearest")

def plot_fbank(fbank, title=None):

fig, axs = plt.subplots(1, 1)

axs.set_title(title or "Filter bank")

axs.imshow(fbank, aspect="auto")

axs.set_ylabel("frequency bin")

axs.set_xlabel("mel bin")

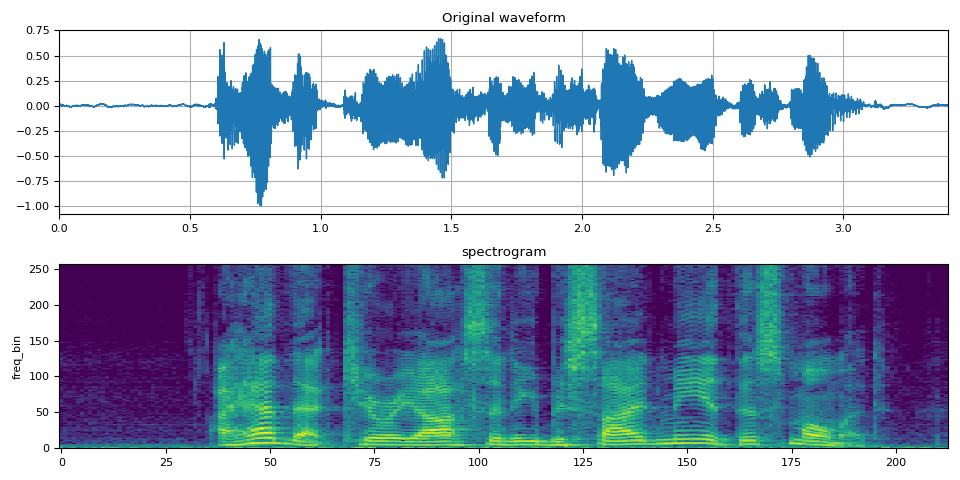

频谱图¶

要获取音频信号随时间变化的频率构成,可以使用 torchaudio.transforms.Spectrogram()。

# Load audio

SPEECH_WAVEFORM, SAMPLE_RATE = torchaudio.load(SAMPLE_SPEECH)

# Define transform

spectrogram = T.Spectrogram(n_fft=512)

# Perform transform

spec = spectrogram(SPEECH_WAVEFORM)

fig, axs = plt.subplots(2, 1)

plot_waveform(SPEECH_WAVEFORM, SAMPLE_RATE, title="Original waveform", ax=axs[0])

plot_spectrogram(spec[0], title="spectrogram", ax=axs[1])

fig.tight_layout()

Audio(SPEECH_WAVEFORM.numpy(), rate=SAMPLE_RATE)

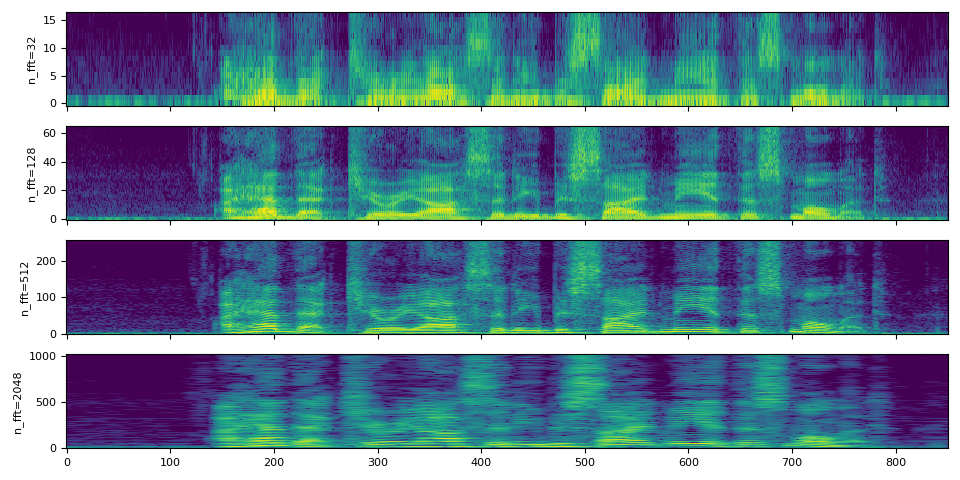

n_fft 参数的影响¶

频谱图计算的核心是(短时)傅里叶变换,n_fft 参数对应于离散傅里叶变换以下定义的 \(N\)。

$$ X_k = \sum_{n=0}^{N-1} x_n e^{-\frac{2\pi i}{N} nk} $$

(有关傅里叶变换的详细信息,请参阅 Wikipedia。

设置 n_fft 的值决定了频率轴的分辨率。然而,当 n_fft 值越高时,能量会分布在更多的 bin 中,所以在可视化时,即使分辨率更高,它也可能看起来更模糊。

以下是对此的说明;

注意

hop_length 决定了时间轴的分辨率。默认情况下(即 hop_length=None 且 win_length=None),使用 n_fft // 4 的值。此处我们对不同的 n_fft 使用相同的 hop_length 值,以便它们在时间轴上有相同数量的元素。

n_ffts = [32, 128, 512, 2048]

hop_length = 64

specs = []

for n_fft in n_ffts:

spectrogram = T.Spectrogram(n_fft=n_fft, hop_length=hop_length)

spec = spectrogram(SPEECH_WAVEFORM)

specs.append(spec)

在比较信号时,最好使用相同的采样率,但是如果你必须使用不同的采样率,则在解释 n_fft 的含义时必须小心。回想一下,n_fft 决定了给定采样率下频率轴的分辨率。换句话说,频率轴上的每个 bin 代表什么取决于采样率。

正如我们在上面看到的,对于相同的输入信号,更改 n_fft 的值不会改变频率范围的覆盖。

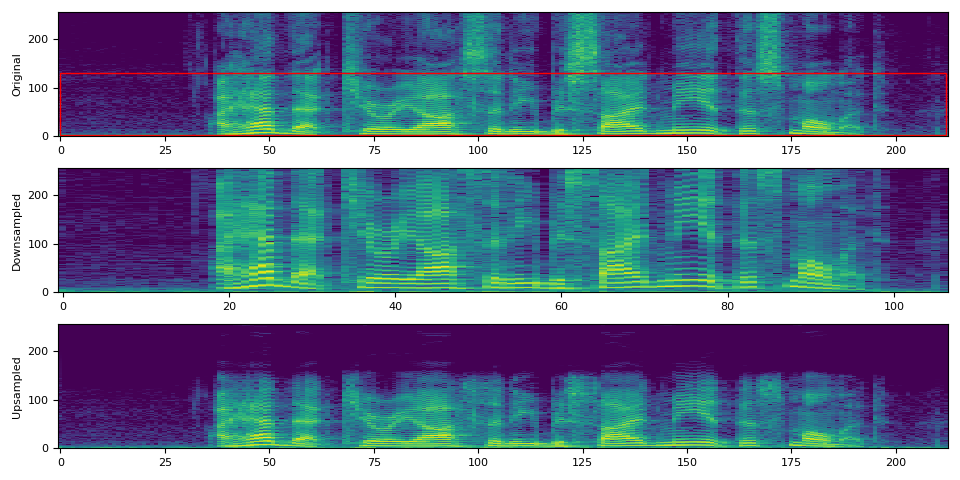

让我们对音频进行降采样,并使用相同的 n_fft 值应用频谱图。

# Downsample to half of the original sample rate

speech2 = torchaudio.functional.resample(SPEECH_WAVEFORM, SAMPLE_RATE, SAMPLE_RATE // 2)

# Upsample to the original sample rate

speech3 = torchaudio.functional.resample(speech2, SAMPLE_RATE // 2, SAMPLE_RATE)

# Apply the same spectrogram

spectrogram = T.Spectrogram(n_fft=512)

spec0 = spectrogram(SPEECH_WAVEFORM)

spec2 = spectrogram(speech2)

spec3 = spectrogram(speech3)

# Visualize it

fig, axs = plt.subplots(3, 1)

plot_spectrogram(spec0[0], ylabel="Original", ax=axs[0])

axs[0].add_patch(Rectangle((0, 3), 212, 128, edgecolor="r", facecolor="none"))

plot_spectrogram(spec2[0], ylabel="Downsampled", ax=axs[1])

plot_spectrogram(spec3[0], ylabel="Upsampled", ax=axs[2])

fig.tight_layout()

在上面的可视化中,“Downsampled”(降采样)的第二个图可能会给人一种频谱图被拉伸的印象。这是因为频率 bin 的含义与原始信号不同。即使它们具有相同的 bin 数量,在第二个图中,频率仅覆盖到原始采样率的一半。如果我们再次对降采样信号进行重采样,使其具有与原始信号相同的采样率,这一点会更清楚。

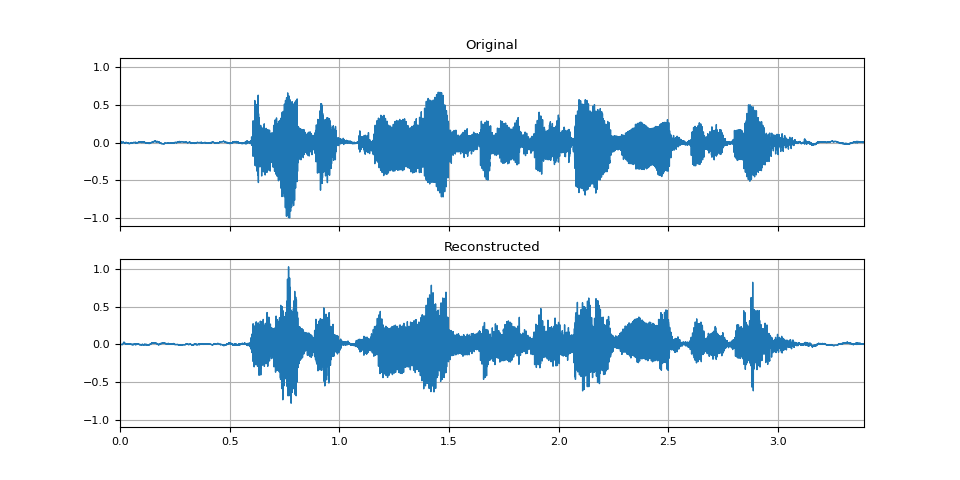

GriffinLim¶

要从频谱图中恢复波形,可以使用 torchaudio.transforms.GriffinLim。

必须使用与频谱图相同的参数集。

# Define transforms

n_fft = 1024

spectrogram = T.Spectrogram(n_fft=n_fft)

griffin_lim = T.GriffinLim(n_fft=n_fft)

# Apply the transforms

spec = spectrogram(SPEECH_WAVEFORM)

reconstructed_waveform = griffin_lim(spec)

_, axes = plt.subplots(2, 1, sharex=True, sharey=True)

plot_waveform(SPEECH_WAVEFORM, SAMPLE_RATE, title="Original", ax=axes[0])

plot_waveform(reconstructed_waveform, SAMPLE_RATE, title="Reconstructed", ax=axes[1])

Audio(reconstructed_waveform, rate=SAMPLE_RATE)

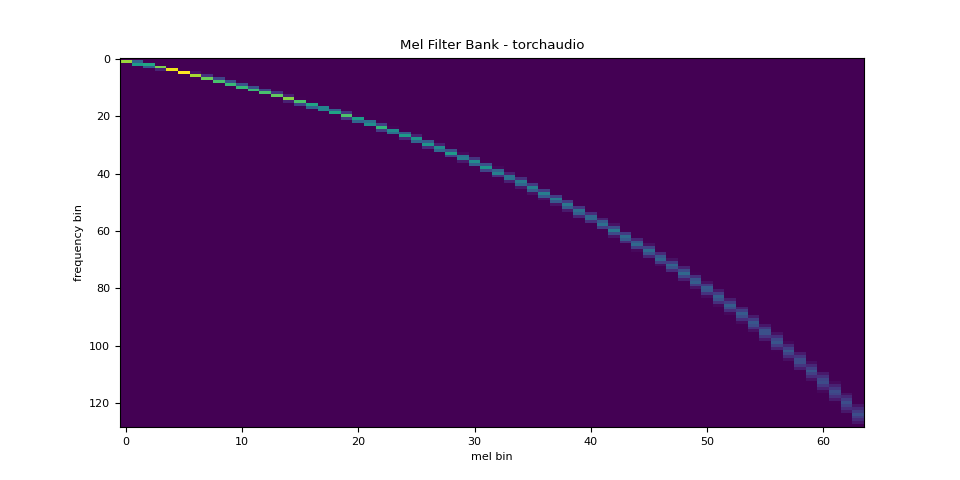

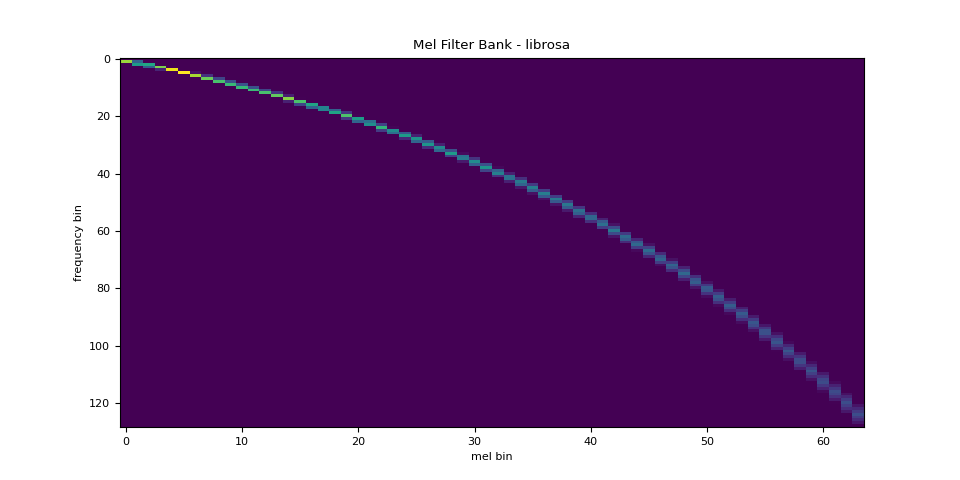

Mel 滤波器组¶

torchaudio.functional.melscale_fbanks() 生成用于将频率 bin 转换为 mel-scale bin 的滤波器组。

由于此函数不需要输入音频/特征,因此在 torchaudio.transforms() 中没有等效的转换。

n_fft = 256

n_mels = 64

sample_rate = 6000

mel_filters = F.melscale_fbanks(

int(n_fft // 2 + 1),

n_mels=n_mels,

f_min=0.0,

f_max=sample_rate / 2.0,

sample_rate=sample_rate,

norm="slaney",

)

plot_fbank(mel_filters, "Mel Filter Bank - torchaudio")

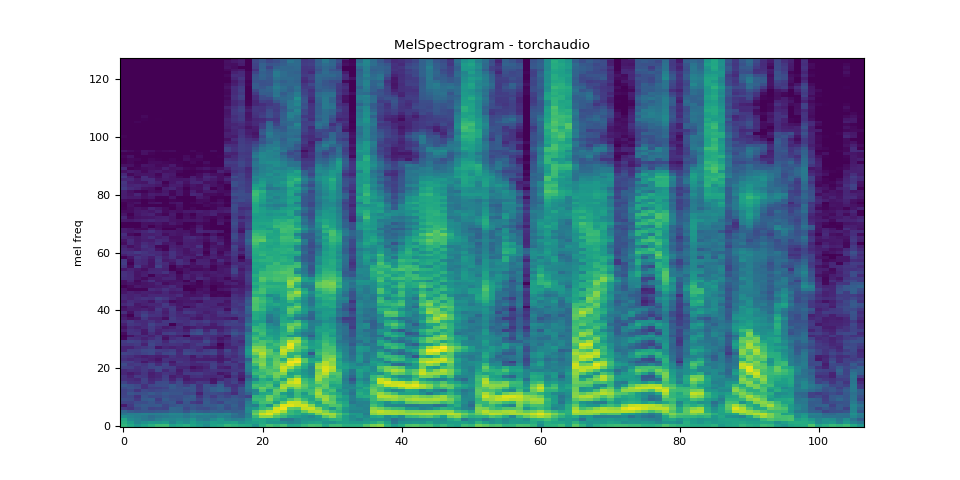

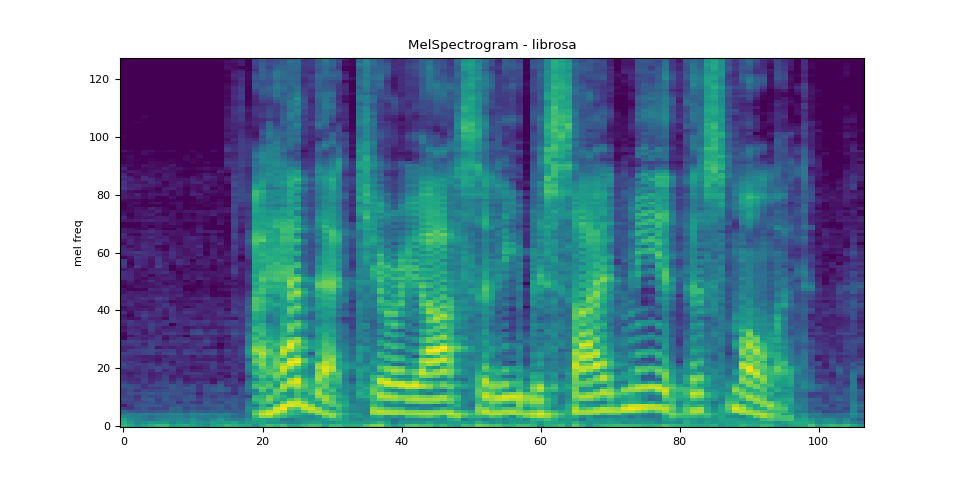

Mel 频谱图¶

生成 mel-scale 频谱图涉及生成频谱图并执行 mel-scale 转换。在 torchaudio 中,torchaudio.transforms.MelSpectrogram() 提供了此功能。

n_fft = 1024

win_length = None

hop_length = 512

n_mels = 128

mel_spectrogram = T.MelSpectrogram(

sample_rate=sample_rate,

n_fft=n_fft,

win_length=win_length,

hop_length=hop_length,

center=True,

pad_mode="reflect",

power=2.0,

norm="slaney",

n_mels=n_mels,

mel_scale="htk",

)

melspec = mel_spectrogram(SPEECH_WAVEFORM)

plot_spectrogram(melspec[0], title="MelSpectrogram - torchaudio", ylabel="mel freq")

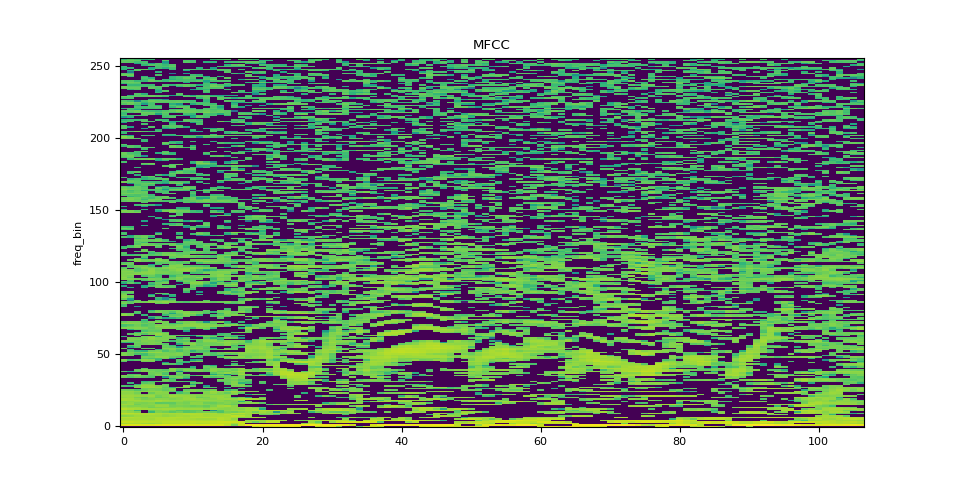

MFCC¶

n_fft = 2048

win_length = None

hop_length = 512

n_mels = 256

n_mfcc = 256

mfcc_transform = T.MFCC(

sample_rate=sample_rate,

n_mfcc=n_mfcc,

melkwargs={

"n_fft": n_fft,

"n_mels": n_mels,

"hop_length": hop_length,

"mel_scale": "htk",

},

)

mfcc = mfcc_transform(SPEECH_WAVEFORM)

plot_spectrogram(mfcc[0], title="MFCC")

LFCC¶

n_fft = 2048

win_length = None

hop_length = 512

n_lfcc = 256

lfcc_transform = T.LFCC(

sample_rate=sample_rate,

n_lfcc=n_lfcc,

speckwargs={

"n_fft": n_fft,

"win_length": win_length,

"hop_length": hop_length,

},

)

lfcc = lfcc_transform(SPEECH_WAVEFORM)

plot_spectrogram(lfcc[0], title="LFCC")

音高¶

pitch = F.detect_pitch_frequency(SPEECH_WAVEFORM, SAMPLE_RATE)

def plot_pitch(waveform, sr, pitch):

figure, axis = plt.subplots(1, 1)

axis.set_title("Pitch Feature")

axis.grid(True)

end_time = waveform.shape[1] / sr

time_axis = torch.linspace(0, end_time, waveform.shape[1])

axis.plot(time_axis, waveform[0], linewidth=1, color="gray", alpha=0.3)

axis2 = axis.twinx()

time_axis = torch.linspace(0, end_time, pitch.shape[1])

axis2.plot(time_axis, pitch[0], linewidth=2, label="Pitch", color="green")

axis2.legend(loc=0)

plot_pitch(SPEECH_WAVEFORM, SAMPLE_RATE, pitch)

脚本总运行时间: ( 0 分 5.693 秒)