注意

点击 这里 下载完整的示例代码

多语言数据的强制对齐¶

作者: Xiaohui Zhang, Moto Hira.

警告

从 2.8 版本开始,我们正在重构 TorchAudio,以使其进入维护阶段。因此:

本教程中描述的 API 在 2.8 版本中已被弃用,并将在 2.9 版本中移除。

PyTorch 用于音频和视频的解码和编码功能正在被整合到 TorchCodec 中。

请参阅 https://github.com/pytorch/audio/issues/3902 获取更多信息。

本教程展示了如何为非英语语言对齐文本到语音。

对非英语(已标准化)文本进行对齐的过程与对英语(已标准化)文本进行对齐的过程相同,英语的处理在 CTC 强制对齐教程 中有详细介绍。在本教程中,我们使用 TorchAudio 的高级 API torchaudio.pipelines.Wav2Vec2FABundle,它打包了预训练模型、分词器和对齐器,以更少的代码执行强制对齐。

import torch

import torchaudio

print(torch.__version__)

print(torchaudio.__version__)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print(device)

2.10.0.dev20251013+cu126

2.8.0a0+1d65bbe

cuda

from typing import List

import IPython

import matplotlib.pyplot as plt

创建管道¶

首先,我们实例化模型和预/后处理管道。

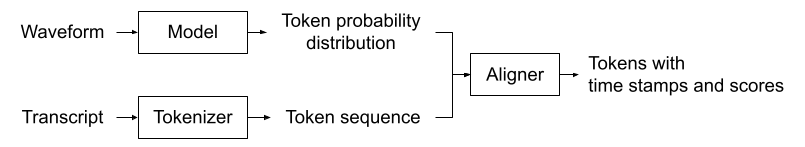

下图说明了对齐过程。

音频波形被输入到声学模型,该模型产生 token 的概率分布序列。文本被输入到分词器,分词器将文本转换为 token 序列。对齐器接收声学模型和分词器的结果,并为每个 token 生成时间戳。

注意

此过程要求输入的文本已经被标准化。标准化过程(包括非英语语言的罗马化)是语言相关的,因此在本教程中不作介绍,但我们会简要提及。

声学模型和分词器必须使用相同的 token 集合。为了方便创建匹配的处理器,Wav2Vec2FABundle 将预训练的声学模型和分词器关联起来。torchaudio.pipelines.MMS_FA 就是这样一个实例。

以下代码实例化了一个预训练的声学模型,一个使用与模型相同 token 集合的分词器,以及一个对齐器。

注意

由 MMS_FA 的 get_model() 方法实例化的模型默认包含 `

MMS_FA 的声学模型是在名为 “Scaling Speech Technology to 1,000+ Languages” 的研究项目中创建并开源的。它使用了来自 1100 多种语言的 23,000 小时的音频进行训练。

分词器只是将标准化后的字符映射到整数。你可以通过以下方式检查映射关系:

print(bundle.get_dict())

{'-': 0, 'a': 1, 'i': 2, 'e': 3, 'n': 4, 'o': 5, 'u': 6, 't': 7, 's': 8, 'r': 9, 'm': 10, 'k': 11, 'l': 12, 'd': 13, 'g': 14, 'h': 15, 'y': 16, 'b': 17, 'p': 18, 'w': 19, 'c': 20, 'v': 21, 'j': 22, 'z': 23, 'f': 24, "'": 25, 'q': 26, 'x': 27, '*': 28}

对齐器在内部使用 torchaudio.functional.forced_align() 和 torchaudio.functional.merge_tokens() 来推断输入 token 的时间戳。

底层机制的详细信息在 CTC 强制对齐 API 教程 中进行了介绍,请参考该教程。

我们定义了一个实用函数,使用上述模型、分词器和对齐器来执行强制对齐。

def compute_alignments(waveform: torch.Tensor, transcript: List[str]):

with torch.inference_mode():

emission, _ = model(waveform.to(device))

token_spans = aligner(emission[0], tokenizer(transcript))

return emission, token_spans

我们还定义了用于绘制结果和预览音频片段的实用函数。

# Compute average score weighted by the span length

def _score(spans):

return sum(s.score * len(s) for s in spans) / sum(len(s) for s in spans)

def plot_alignments(waveform, token_spans, emission, transcript, sample_rate=bundle.sample_rate):

ratio = waveform.size(1) / emission.size(1) / sample_rate

fig, axes = plt.subplots(2, 1)

axes[0].imshow(emission[0].detach().cpu().T, aspect="auto")

axes[0].set_title("Emission")

axes[0].set_xticks([])

axes[1].specgram(waveform[0], Fs=sample_rate)

for t_spans, chars in zip(token_spans, transcript):

t0, t1 = t_spans[0].start, t_spans[-1].end

axes[0].axvspan(t0 - 0.5, t1 - 0.5, facecolor="None", hatch="/", edgecolor="white")

axes[1].axvspan(ratio * t0, ratio * t1, facecolor="None", hatch="/", edgecolor="white")

axes[1].annotate(f"{_score(t_spans):.2f}", (ratio * t0, sample_rate * 0.51), annotation_clip=False)

for span, char in zip(t_spans, chars):

t0 = span.start * ratio

axes[1].annotate(char, (t0, sample_rate * 0.55), annotation_clip=False)

axes[1].set_xlabel("time [second]")

fig.tight_layout()

def preview_word(waveform, spans, num_frames, transcript, sample_rate=bundle.sample_rate):

ratio = waveform.size(1) / num_frames

x0 = int(ratio * spans[0].start)

x1 = int(ratio * spans[-1].end)

print(f"{transcript} ({_score(spans):.2f}): {x0 / sample_rate:.3f} - {x1 / sample_rate:.3f} sec")

segment = waveform[:, x0:x1]

return IPython.display.Audio(segment.numpy(), rate=sample_rate)

标准化文本¶

传递给管道的文本必须提前进行标准化。标准化的具体过程取决于语言。

没有明确词语边界的语言(如中文、日文和韩文)需要先进行分词。有专门的工具用于此目的,但我们假设我们已经分词了文本。

标准化的第一步是罗马化。 uroman 是一个支持多种语言的工具。

以下是一个 BASH 命令,使用 `uroman` 对输入文本文件进行罗马化,并将输出写入另一个文本文件。

$ echo "des événements d'actualité qui se sont produits durant l'année 1882" > text.txt

$ uroman/bin/uroman.pl < text.txt > text_romanized.txt

$ cat text_romanized.txt

Cette page concerne des evenements d'actualite qui se sont produits durant l'annee 1882

下一步是移除非字母和标点符号。以下代码片段对罗马化后的文本进行标准化。

import re

def normalize_uroman(text):

text = text.lower()

text = text.replace("’", "'")

text = re.sub("([^a-z' ])", " ", text)

text = re.sub(' +', ' ', text)

return text.strip()

with open("text_romanized.txt", "r") as f:

for line in f:

text_normalized = normalize_uroman(line)

print(text_normalized)

在上述示例上运行脚本会产生以下结果。

cette page concerne des evenements d'actualite qui se sont produits durant l'annee

请注意,在此示例中,由于 “1882” 没有被 `uroman` 罗马化,因此在标准化步骤中被删除了。为了避免这种情况,需要对数字进行罗马化,但这被认为是棘手的任务。

将文本与语音对齐¶

现在,我们对多种语言执行强制对齐。

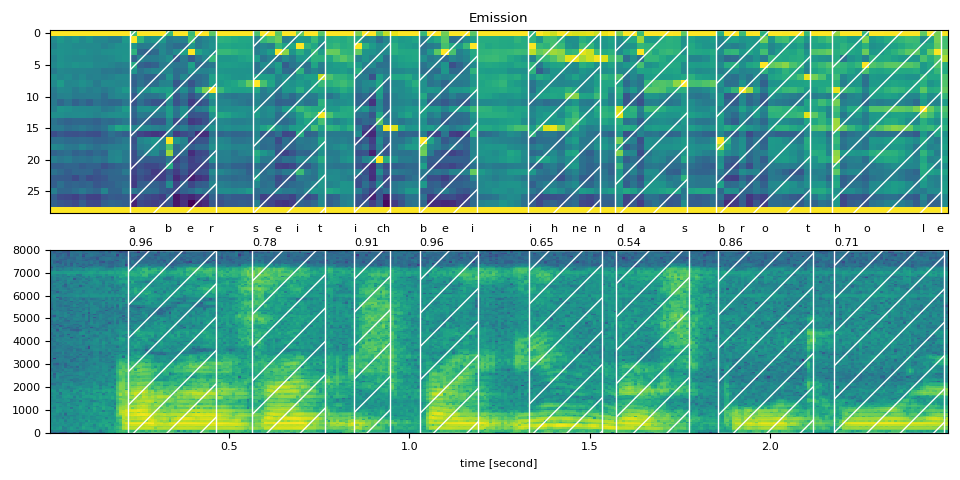

德语¶

text_raw = "aber seit ich bei ihnen das brot hole"

text_normalized = "aber seit ich bei ihnen das brot hole"

url = "https://download.pytorch.org/torchaudio/tutorial-assets/10349_8674_000087.flac"

waveform, sample_rate = torchaudio.load(

url, frame_offset=int(0.5 * bundle.sample_rate), num_frames=int(2.5 * bundle.sample_rate)

)

assert sample_rate == bundle.sample_rate

transcript = text_normalized.split()

tokens = tokenizer(transcript)

emission, token_spans = compute_alignments(waveform, transcript)

num_frames = emission.size(1)

plot_alignments(waveform, token_spans, emission, transcript)

print("Raw Transcript: ", text_raw)

print("Normalized Transcript: ", text_normalized)

IPython.display.Audio(waveform, rate=sample_rate)

/pytorch/audio/ci_env/lib/python3.11/site-packages/torchaudio/pipelines/_wav2vec2/aligner.py:40: UserWarning: torchaudio.functional._alignment.forced_align has been deprecated. This deprecation is part of a large refactoring effort to transition TorchAudio into a maintenance phase. Please see https://github.com/pytorch/audio/issues/3902 for more information. It will be removed from the 2.9 release.

aligned_tokens, scores = F.forced_align(emission, targets, blank=blank)

Raw Transcript: aber seit ich bei ihnen das brot hole

Normalized Transcript: aber seit ich bei ihnen das brot hole

preview_word(waveform, token_spans[0], num_frames, transcript[0])

aber (0.96): 0.222 - 0.464 sec

preview_word(waveform, token_spans[1], num_frames, transcript[1])

seit (0.78): 0.565 - 0.766 sec

preview_word(waveform, token_spans[2], num_frames, transcript[2])

ich (0.91): 0.847 - 0.948 sec

preview_word(waveform, token_spans[3], num_frames, transcript[3])

bei (0.96): 1.028 - 1.190 sec

preview_word(waveform, token_spans[4], num_frames, transcript[4])

ihnen (0.65): 1.331 - 1.532 sec

preview_word(waveform, token_spans[5], num_frames, transcript[5])

das (0.54): 1.573 - 1.774 sec

preview_word(waveform, token_spans[6], num_frames, transcript[6])

brot (0.86): 1.855 - 2.117 sec

preview_word(waveform, token_spans[7], num_frames, transcript[7])

hole (0.71): 2.177 - 2.480 sec

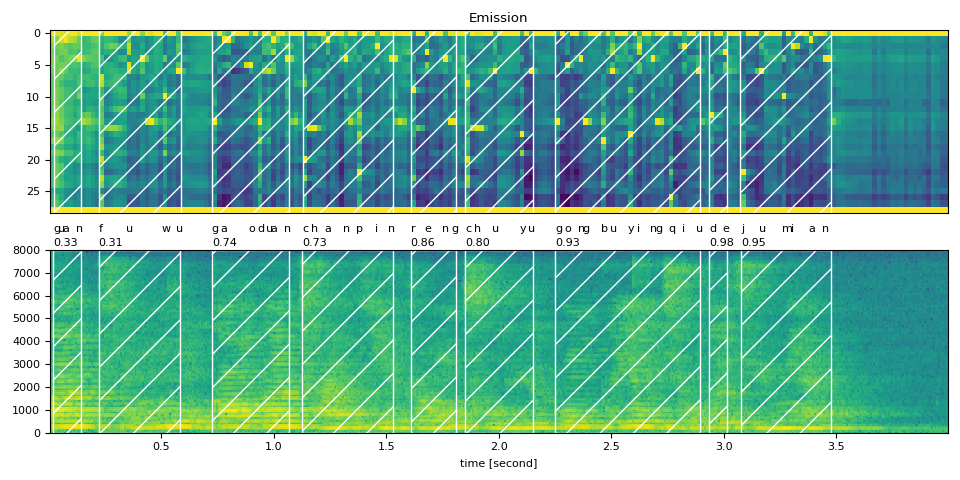

中文¶

中文是一种基于字符的语言,其原始书写形式没有明确的词级分词(以空格分隔)。为了获得词级对齐,您需要先使用词分词器(如 “Stanford Tokenizer”)对文本进行词级分词。但是,如果您只需要字符级对齐,则不需要这样做。

text_raw = "关 服务 高端 产品 仍 处于 供不应求 的 局面"

text_normalized = "guan fuwu gaoduan chanpin reng chuyu gongbuyingqiu de jumian"

assert sample_rate == bundle.sample_rate

transcript = text_normalized.split()

emission, token_spans = compute_alignments(waveform, transcript)

num_frames = emission.size(1)

plot_alignments(waveform, token_spans, emission, transcript)

print("Raw Transcript: ", text_raw)

print("Normalized Transcript: ", text_normalized)

IPython.display.Audio(waveform, rate=sample_rate)

/pytorch/audio/ci_env/lib/python3.11/site-packages/torchaudio/pipelines/_wav2vec2/aligner.py:40: UserWarning: torchaudio.functional._alignment.forced_align has been deprecated. This deprecation is part of a large refactoring effort to transition TorchAudio into a maintenance phase. Please see https://github.com/pytorch/audio/issues/3902 for more information. It will be removed from the 2.9 release.

aligned_tokens, scores = F.forced_align(emission, targets, blank=blank)

Raw Transcript: 关 服务 高端 产品 仍 处于 供不应求 的 局面

Normalized Transcript: guan fuwu gaoduan chanpin reng chuyu gongbuyingqiu de jumian

preview_word(waveform, token_spans[0], num_frames, transcript[0])

guan (0.33): 0.020 - 0.141 sec

preview_word(waveform, token_spans[1], num_frames, transcript[1])

fuwu (0.31): 0.221 - 0.583 sec

preview_word(waveform, token_spans[2], num_frames, transcript[2])

gaoduan (0.74): 0.724 - 1.065 sec

preview_word(waveform, token_spans[3], num_frames, transcript[3])

chanpin (0.73): 1.126 - 1.528 sec

preview_word(waveform, token_spans[4], num_frames, transcript[4])

reng (0.86): 1.608 - 1.809 sec

preview_word(waveform, token_spans[5], num_frames, transcript[5])

chuyu (0.80): 1.849 - 2.151 sec

preview_word(waveform, token_spans[6], num_frames, transcript[6])

gongbuyingqiu (0.93): 2.251 - 2.894 sec

preview_word(waveform, token_spans[7], num_frames, transcript[7])

de (0.98): 2.935 - 3.015 sec

preview_word(waveform, token_spans[8], num_frames, transcript[8])

jumian (0.95): 3.075 - 3.477 sec

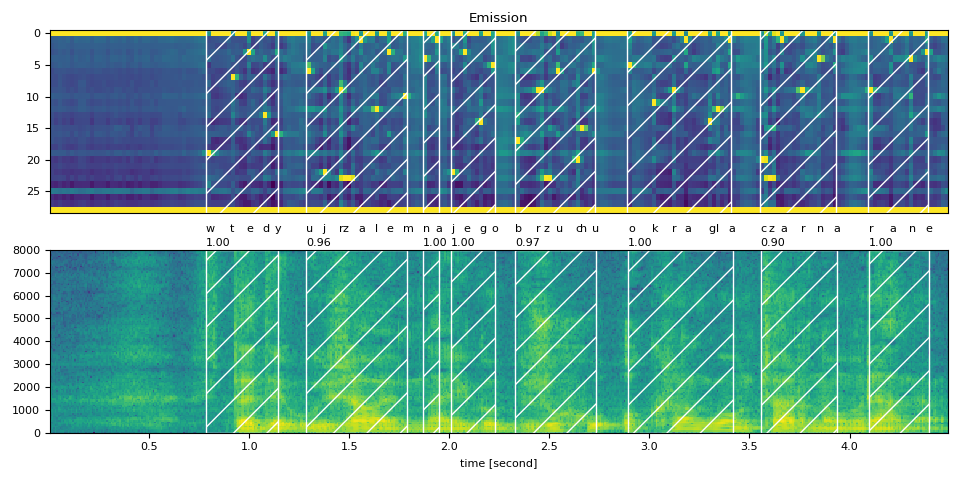

波兰语¶

text_raw = "wtedy ujrzałem na jego brzuchu okrągłą czarną ranę"

text_normalized = "wtedy ujrzalem na jego brzuchu okragla czarna rane"

url = "https://download.pytorch.org/torchaudio/tutorial-assets/5090_1447_000088.flac"

waveform, sample_rate = torchaudio.load(url, num_frames=int(4.5 * bundle.sample_rate))

assert sample_rate == bundle.sample_rate

transcript = text_normalized.split()

emission, token_spans = compute_alignments(waveform, transcript)

num_frames = emission.size(1)

plot_alignments(waveform, token_spans, emission, transcript)

print("Raw Transcript: ", text_raw)

print("Normalized Transcript: ", text_normalized)

IPython.display.Audio(waveform, rate=sample_rate)

/pytorch/audio/ci_env/lib/python3.11/site-packages/torchaudio/pipelines/_wav2vec2/aligner.py:40: UserWarning: torchaudio.functional._alignment.forced_align has been deprecated. This deprecation is part of a large refactoring effort to transition TorchAudio into a maintenance phase. Please see https://github.com/pytorch/audio/issues/3902 for more information. It will be removed from the 2.9 release.

aligned_tokens, scores = F.forced_align(emission, targets, blank=blank)

Raw Transcript: wtedy ujrzałem na jego brzuchu okrągłą czarną ranę

Normalized Transcript: wtedy ujrzalem na jego brzuchu okragla czarna rane

preview_word(waveform, token_spans[0], num_frames, transcript[0])

wtedy (1.00): 0.783 - 1.145 sec

preview_word(waveform, token_spans[1], num_frames, transcript[1])

ujrzalem (0.96): 1.286 - 1.788 sec

preview_word(waveform, token_spans[2], num_frames, transcript[2])

na (1.00): 1.868 - 1.949 sec

preview_word(waveform, token_spans[3], num_frames, transcript[3])

jego (1.00): 2.009 - 2.230 sec

preview_word(waveform, token_spans[4], num_frames, transcript[4])

brzuchu (0.97): 2.330 - 2.732 sec

preview_word(waveform, token_spans[5], num_frames, transcript[5])

okragla (1.00): 2.893 - 3.415 sec

preview_word(waveform, token_spans[6], num_frames, transcript[6])

czarna (0.90): 3.556 - 3.938 sec

preview_word(waveform, token_spans[7], num_frames, transcript[7])

rane (1.00): 4.098 - 4.399 sec

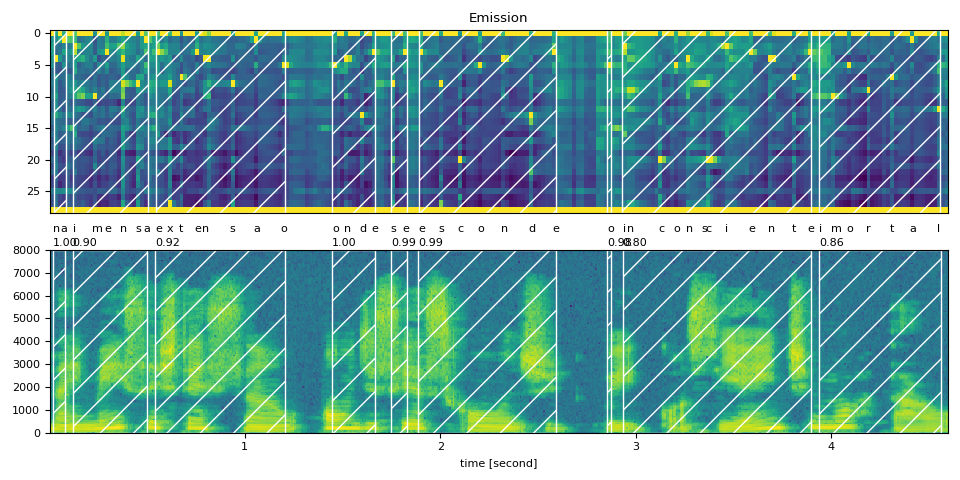

葡萄牙语¶

text_raw = "na imensa extensão onde se esconde o inconsciente imortal"

text_normalized = "na imensa extensao onde se esconde o inconsciente imortal"

url = "https://download.pytorch.org/torchaudio/tutorial-assets/6566_5323_000027.flac"

waveform, sample_rate = torchaudio.load(

url, frame_offset=int(bundle.sample_rate), num_frames=int(4.6 * bundle.sample_rate)

)

assert sample_rate == bundle.sample_rate

transcript = text_normalized.split()

emission, token_spans = compute_alignments(waveform, transcript)

num_frames = emission.size(1)

plot_alignments(waveform, token_spans, emission, transcript)

print("Raw Transcript: ", text_raw)

print("Normalized Transcript: ", text_normalized)

IPython.display.Audio(waveform, rate=sample_rate)

/pytorch/audio/ci_env/lib/python3.11/site-packages/torchaudio/pipelines/_wav2vec2/aligner.py:40: UserWarning: torchaudio.functional._alignment.forced_align has been deprecated. This deprecation is part of a large refactoring effort to transition TorchAudio into a maintenance phase. Please see https://github.com/pytorch/audio/issues/3902 for more information. It will be removed from the 2.9 release.

aligned_tokens, scores = F.forced_align(emission, targets, blank=blank)

Raw Transcript: na imensa extensão onde se esconde o inconsciente imortal

Normalized Transcript: na imensa extensao onde se esconde o inconsciente imortal

preview_word(waveform, token_spans[0], num_frames, transcript[0])

na (1.00): 0.020 - 0.080 sec

preview_word(waveform, token_spans[1], num_frames, transcript[1])

imensa (0.90): 0.120 - 0.502 sec

preview_word(waveform, token_spans[2], num_frames, transcript[2])

extensao (0.92): 0.542 - 1.205 sec

preview_word(waveform, token_spans[3], num_frames, transcript[3])

onde (1.00): 1.446 - 1.667 sec

preview_word(waveform, token_spans[4], num_frames, transcript[4])

se (0.99): 1.748 - 1.828 sec

preview_word(waveform, token_spans[5], num_frames, transcript[5])

esconde (0.99): 1.888 - 2.591 sec

preview_word(waveform, token_spans[6], num_frames, transcript[6])

o (0.98): 2.852 - 2.872 sec

preview_word(waveform, token_spans[7], num_frames, transcript[7])

inconsciente (0.80): 2.933 - 3.897 sec

preview_word(waveform, token_spans[8], num_frames, transcript[8])

imortal (0.86): 3.937 - 4.560 sec

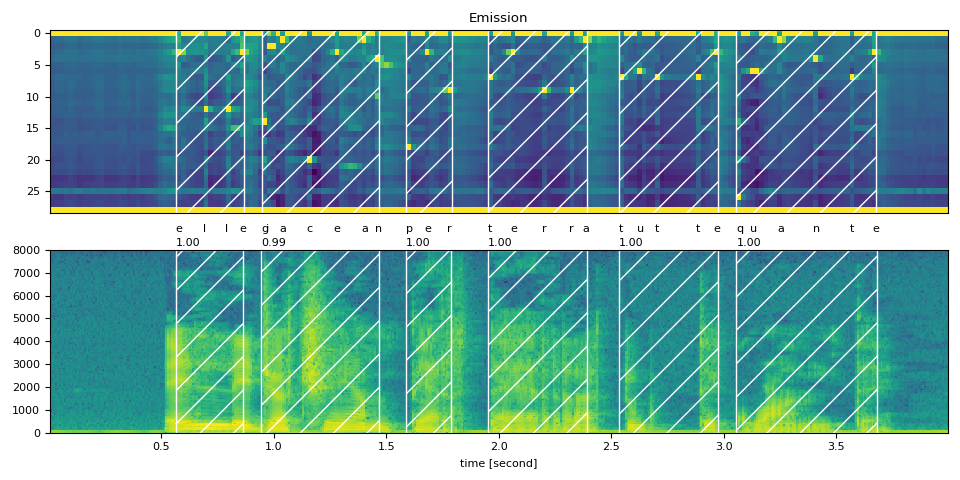

意大利语¶

text_raw = "elle giacean per terra tutte quante"

text_normalized = "elle giacean per terra tutte quante"

url = "https://download.pytorch.org/torchaudio/tutorial-assets/642_529_000025.flac"

waveform, sample_rate = torchaudio.load(url, num_frames=int(4 * bundle.sample_rate))

assert sample_rate == bundle.sample_rate

transcript = text_normalized.split()

emission, token_spans = compute_alignments(waveform, transcript)

num_frames = emission.size(1)

plot_alignments(waveform, token_spans, emission, transcript)

print("Raw Transcript: ", text_raw)

print("Normalized Transcript: ", text_normalized)

IPython.display.Audio(waveform, rate=sample_rate)

/pytorch/audio/ci_env/lib/python3.11/site-packages/torchaudio/pipelines/_wav2vec2/aligner.py:40: UserWarning: torchaudio.functional._alignment.forced_align has been deprecated. This deprecation is part of a large refactoring effort to transition TorchAudio into a maintenance phase. Please see https://github.com/pytorch/audio/issues/3902 for more information. It will be removed from the 2.9 release.

aligned_tokens, scores = F.forced_align(emission, targets, blank=blank)

Raw Transcript: elle giacean per terra tutte quante

Normalized Transcript: elle giacean per terra tutte quante

preview_word(waveform, token_spans[0], num_frames, transcript[0])

elle (1.00): 0.563 - 0.864 sec

preview_word(waveform, token_spans[1], num_frames, transcript[1])

giacean (0.99): 0.945 - 1.467 sec

preview_word(waveform, token_spans[2], num_frames, transcript[2])

per (1.00): 1.588 - 1.789 sec

preview_word(waveform, token_spans[3], num_frames, transcript[3])

terra (1.00): 1.950 - 2.392 sec

preview_word(waveform, token_spans[4], num_frames, transcript[4])

tutte (1.00): 2.533 - 2.975 sec

preview_word(waveform, token_spans[5], num_frames, transcript[5])

quante (1.00): 3.055 - 3.678 sec

结论¶

在本教程中,我们学习了如何使用 torchaudio 的强制对齐 API 和 Wav2Vec2 的多语言预训练声学模型来对五种语言的语音数据与文本进行对齐。

致谢¶

感谢 Vineel Pratap 和 Zhaoheng Ni 开发并开源了强制对齐 API。

脚本总运行时间: (0 分钟 4.862 秒)