注意

单击 此处 下载完整的示例代码

Tacotron2 文本转语音¶

作者: Yao-Yuan Yang, Moto Hira

概述¶

本教程展示如何使用 torchaudio 中预训练的 Tacotron2 构建文本转语音管道。

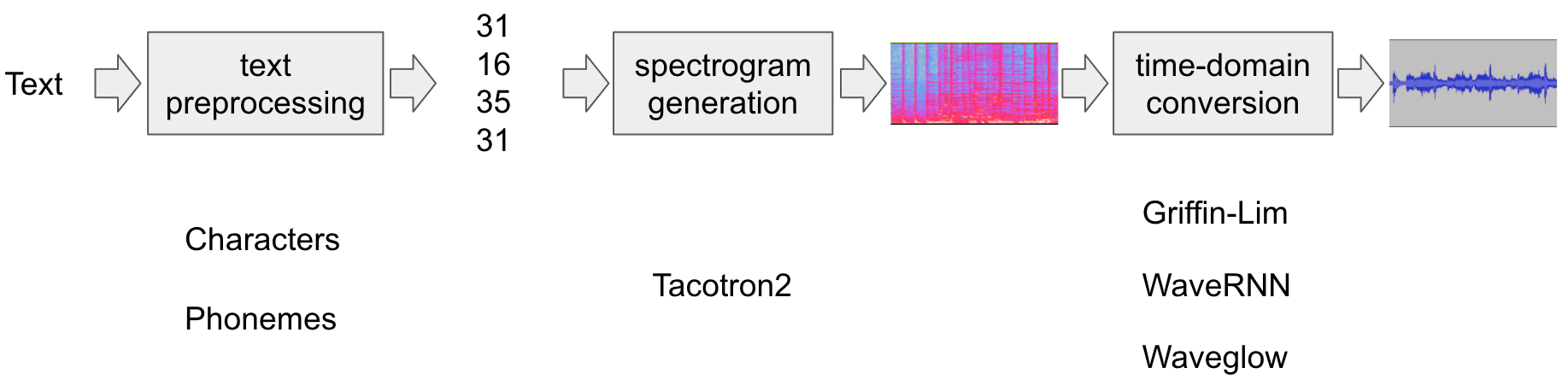

文本转语音管道流程如下:

文本预处理

首先,输入文本被编码成一个符号列表。在本教程中,我们将使用英文字母作为符号。

频谱图生成

从编码后的文本生成频谱图。我们使用

Tacotron2模型来实现这一点。时域转换

最后一步是将频谱图转换为波形。从频谱图生成语音的过程也称为声码器。在本教程中,使用了三种不同的声码器:

WaveRNN、GriffinLim和 Nvidia 的 WaveGlow。

下图说明了整个过程。

torchaudio.pipelines.Tacotron2TTSBundle 封装了所有相关的组件,但本教程也将涵盖其内部工作流程。

准备¶

import torch

import torchaudio

torch.random.manual_seed(0)

device = "cuda" if torch.cuda.is_available() else "cpu"

print(torch.__version__)

print(torchaudio.__version__)

print(device)

2.10.0.dev20251013+cu126

2.8.0a0+1d65bbe

cuda

import IPython

import matplotlib.pyplot as plt

文本处理¶

基于字符的编码¶

本节将介绍基于字符的编码工作原理。

由于预训练的 Tacotron2 模型需要特定的符号表,因此 torchaudio 提供了相同的功能。然而,为了便于理解,我们将首先手动实现编码。

首先,我们定义符号集 '_-!\'(),.:;? abcdefghijklmnopqrstuvwxyz'。然后,我们将输入文本中的每个字符映射到表中相应符号的索引。表中不存在的符号将被忽略。

[19, 16, 23, 23, 26, 11, 34, 26, 29, 23, 15, 2, 11, 31, 16, 35, 31, 11, 31, 26, 11, 30, 27, 16, 16, 14, 19, 2]

如上所述,符号表和索引必须与预训练的 Tacotron2 模型期望的一致。torchaudio 随预训练模型一起提供了相同的转换。您可以像这样实例化并使用该转换。

tensor([[19, 16, 23, 23, 26, 11, 34, 26, 29, 23, 15, 2, 11, 31, 16, 35, 31, 11,

31, 26, 11, 30, 27, 16, 16, 14, 19, 2]])

tensor([28], dtype=torch.int32)

注意:我们手动编码的输出与 torchaudio 的 text_processor 输出匹配(这意味着我们正确地重新实现了库的内部工作方式)。它接受单个文本或文本列表作为输入。当提供文本列表时,返回的 lengths 变量表示输出批次中每个已处理令牌的有效长度。

可以如下检索中间表示

['h', 'e', 'l', 'l', 'o', ' ', 'w', 'o', 'r', 'l', 'd', '!', ' ', 't', 'e', 'x', 't', ' ', 't', 'o', ' ', 's', 'p', 'e', 'e', 'c', 'h', '!']

频谱图生成¶

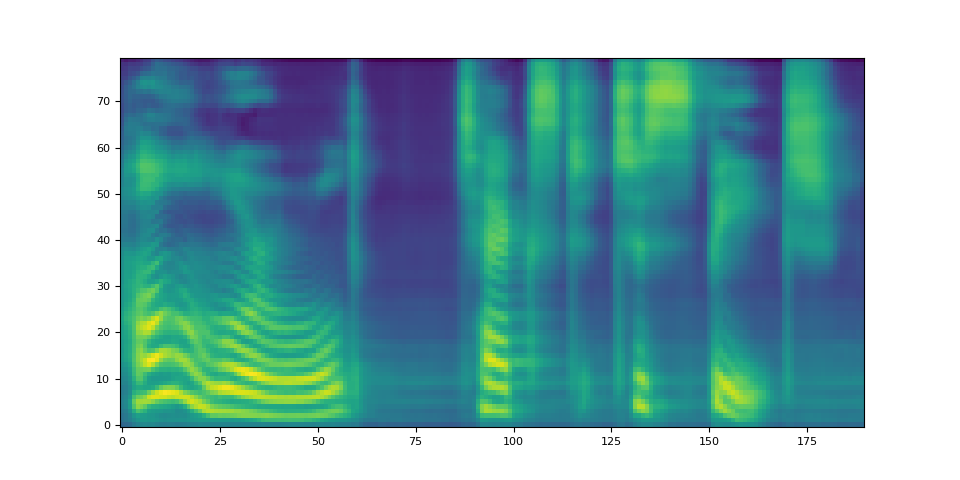

Tacotron2 是我们用来从编码文本生成频谱图的模型。有关模型的详细信息,请参阅 论文。

使用预训练权重实例化 Tacotron2 模型很容易,但请注意,Tacotron2 模型需要由匹配的文本处理器进行处理。

torchaudio.pipelines.Tacotron2TTSBundle 将匹配的模型和处理器捆绑在一起,以便于创建管道。

有关可用的 bundle 及其用法,请参阅 Tacotron2TTSBundle。

bundle = torchaudio.pipelines.TACOTRON2_WAVERNN_CHAR_LJSPEECH

processor = bundle.get_text_processor()

tacotron2 = bundle.get_tacotron2().to(device)

text = "Hello world! Text to speech!"

with torch.inference_mode():

processed, lengths = processor(text)

processed = processed.to(device)

lengths = lengths.to(device)

spec, _, _ = tacotron2.infer(processed, lengths)

_ = plt.imshow(spec[0].cpu().detach(), origin="lower", aspect="auto")

Downloading: "https://download.pytorch.org/torchaudio/models/tacotron2_english_characters_1500_epochs_wavernn_ljspeech.pth" to /root/.cache/torch/hub/checkpoints/tacotron2_english_characters_1500_epochs_wavernn_ljspeech.pth

0.1%

0.2%

0.3%

0.5%

0.6%

0.7%

0.8%

0.9%

1.0%

1.2%

1.3%

1.4%

1.5%

1.6%

1.7%

1.9%

2.0%

2.1%

2.2%

2.3%

2.4%

2.6%

2.7%

2.8%

2.9%

3.0%

3.1%

3.3%

3.4%

3.5%

3.6%

3.7%

3.8%

4.0%

4.1%

4.2%

4.3%

4.4%

4.5%

4.7%

4.8%

4.9%

5.0%

5.1%

5.2%

5.4%

5.5%

5.6%

5.7%

5.8%

5.9%

6.1%

6.2%

6.3%

6.4%

6.5%

6.6%

6.8%

6.9%

7.0%

7.1%

7.2%

7.3%

7.4%

7.6%

7.7%

7.8%

7.9%

8.0%

8.1%

8.3%

8.4%

8.5%

8.6%

8.7%

8.8%

9.0%

9.1%

9.2%

9.3%

9.4%

9.5%

9.7%

9.8%

9.9%

10.0%

10.1%

10.2%

10.4%

10.5%

10.6%

10.7%

10.8%

10.9%

11.1%

11.2%

11.3%

11.4%

11.5%

11.6%

11.8%

11.9%

12.0%

12.1%

12.2%

12.3%

12.5%

12.6%

12.7%

12.8%

12.9%

13.0%

13.2%

13.3%

13.4%

13.5%

13.6%

13.7%

13.9%

14.0%

14.1%

14.2%

14.3%

14.4%

14.6%

14.7%

14.8%

14.9%

15.0%

15.1%

15.2%

15.4%

15.5%

15.6%

15.7%

15.8%

15.9%

16.1%

16.2%

16.3%

16.4%

16.5%

16.6%

16.8%

16.9%

17.0%

17.1%

17.2%

17.3%

17.5%

17.6%

17.7%

17.8%

17.9%

18.0%

18.2%

18.3%

18.4%

18.5%

18.6%

18.7%

18.9%

19.0%

19.1%

19.2%

19.3%

19.4%

19.6%

19.7%

19.8%

19.9%

20.0%

20.1%

20.3%

20.4%

20.5%

20.6%

20.7%

20.8%

21.0%

21.1%

21.2%

21.3%

21.4%

21.5%

21.7%

21.8%

21.9%

22.0%

22.1%

22.2%

22.3%

22.5%

22.6%

22.7%

22.8%

22.9%

23.0%

23.2%

23.3%

23.4%

23.5%

23.6%

23.7%

23.9%

24.0%

24.1%

24.2%

24.3%

24.4%

24.6%

24.7%

24.8%

24.9%

25.0%

25.1%

25.3%

25.4%

25.5%

25.6%

25.7%

25.8%

26.0%

26.1%

26.2%

26.3%

26.4%

26.5%

26.7%

26.8%

26.9%

27.0%

27.1%

27.2%

27.4%

27.5%

27.6%

27.7%

27.8%

27.9%

28.1%

28.2%

28.3%

28.4%

28.5%

28.6%

28.8%

28.9%

29.0%

29.1%

29.2%

29.3%

29.5%

29.6%

29.7%

29.8%

29.9%

30.0%

30.1%

30.3%

30.4%

30.5%

30.6%

30.7%

30.8%

31.0%

31.1%

31.2%

31.3%

31.4%

31.5%

31.7%

31.8%

31.9%

32.0%

32.1%

32.2%

32.4%

32.5%

32.6%

32.7%

32.8%

32.9%

33.1%

33.2%

33.3%

33.4%

33.5%

33.6%

33.8%

33.9%

34.0%

34.1%

34.2%

34.3%

34.5%

34.6%

34.7%

34.8%

34.9%

35.0%

35.2%

35.3%

35.4%

35.5%

35.6%

35.7%

35.9%

36.0%

36.1%

36.2%

36.3%

36.4%

36.6%

36.7%

36.8%

36.9%

37.0%

37.1%

37.2%

37.4%

37.5%

37.6%

37.7%

37.8%

37.9%

38.1%

38.2%

38.3%

38.4%

38.5%

38.6%

38.8%

38.9%

39.0%

39.1%

39.2%

39.3%

39.5%

39.6%

39.7%

39.8%

39.9%

40.0%

40.2%

40.3%

40.4%

40.5%

40.6%

40.7%

40.9%

41.0%

41.1%

41.2%

41.3%

41.4%

41.6%

41.7%

41.8%

41.9%

42.0%

42.1%

42.3%

42.4%

42.5%

42.6%

42.7%

42.8%

43.0%

43.1%

43.2%

43.3%

43.4%

43.5%

43.7%

43.8%

43.9%

44.0%

44.1%

44.2%

44.4%

44.5%

44.6%

44.7%

44.8%

44.9%

45.0%

45.2%

45.3%

45.4%

45.5%

45.6%

45.7%

45.9%

46.0%

46.1%

46.2%

46.3%

46.4%

46.6%

46.7%

46.8%

46.9%

47.0%

47.1%

47.3%

47.4%

47.5%

47.6%

47.7%

47.8%

48.0%

48.1%

48.2%

48.3%

48.4%

48.5%

48.7%

48.8%

48.9%

49.0%

49.1%

49.2%

49.4%

49.5%

49.6%

49.7%

49.8%

49.9%

50.1%

50.2%

50.3%

50.4%

50.5%

50.6%

50.8%

50.9%

51.0%

51.1%

51.2%

51.3%

51.5%

51.6%

51.7%

51.8%

51.9%

52.0%

52.1%

52.3%

52.4%

52.5%

52.6%

52.7%

52.8%

53.0%

53.1%

53.2%

53.3%

53.4%

53.5%

53.7%

53.8%

53.9%

54.0%

54.1%

54.2%

54.4%

54.5%

54.6%

54.7%

54.8%

54.9%

55.1%

55.2%

55.3%

55.4%

55.5%

55.6%

55.8%

55.9%

56.0%

56.1%

56.2%

56.3%

56.5%

56.6%

56.7%

56.8%

56.9%

57.0%

57.2%

57.3%

57.4%

57.5%

57.6%

57.7%

57.9%

58.0%

58.1%

58.2%

58.3%

58.4%

58.6%

58.7%

58.8%

58.9%

59.0%

59.1%

59.2%

59.4%

59.5%

59.6%

59.7%

59.8%

59.9%

60.1%

60.2%

60.3%

60.4%

60.5%

60.6%

60.8%

60.9%

61.0%

61.1%

61.2%

61.3%

61.5%

61.6%

61.7%

61.8%

61.9%

62.0%

62.2%

62.3%

62.4%

62.5%

62.6%

62.7%

62.9%

63.0%

63.1%

63.2%

63.3%

63.4%

63.6%

63.7%

63.8%

63.9%

64.0%

64.1%

64.3%

64.4%

64.5%

64.6%

64.7%

64.8%

65.0%

65.1%

65.2%

65.3%

65.4%

65.5%

65.7%

65.8%

65.9%

66.0%

66.1%

66.2%

66.4%

66.5%

66.6%

66.7%

66.8%

66.9%

67.0%

67.2%

67.3%

67.4%

67.5%

67.6%

67.7%

67.9%

68.0%

68.1%

68.2%

68.3%

68.4%

68.6%

68.7%

68.8%

68.9%

69.0%

69.1%

69.3%

69.4%

69.5%

69.6%

69.7%

69.8%

70.0%

70.1%

70.2%

70.3%

70.4%

70.5%

70.7%

70.8%

70.9%

71.0%

71.1%

71.2%

71.4%

71.5%

71.6%

71.7%

71.8%

71.9%

72.1%

72.2%

72.3%

72.4%

72.5%

72.6%

72.8%

72.9%

73.0%

73.1%

73.2%

73.3%

73.5%

73.6%

73.7%

73.8%

73.9%

74.0%

74.1%

74.3%

74.4%

74.5%

74.6%

74.7%

74.8%

75.0%

75.1%

75.2%

75.3%

75.4%

75.5%

75.7%

75.8%

75.9%

76.0%

76.1%

76.2%

76.4%

76.5%

76.6%

76.7%

76.8%

76.9%

77.1%

77.2%

77.3%

77.4%

77.5%

77.6%

77.8%

77.9%

78.0%

78.1%

78.2%

78.3%

78.5%

78.6%

78.7%

78.8%

78.9%

79.0%

79.2%

79.3%

79.4%

79.5%

79.6%

79.7%

79.9%

80.0%

80.1%

80.2%

80.3%

80.4%

80.6%

80.7%

80.8%

80.9%

81.0%

81.1%

81.3%

81.4%

81.5%

81.6%

81.7%

81.8%

81.9%

82.1%

82.2%

82.3%

82.4%

82.5%

82.6%

82.8%

82.9%

83.0%

83.1%

83.2%

83.3%

83.5%

83.6%

83.7%

83.8%

83.9%

84.0%

84.2%

84.3%

84.4%

84.5%

84.6%

84.7%

84.9%

85.0%

85.1%

85.2%

85.3%

85.4%

85.6%

85.7%

85.8%

85.9%

86.0%

86.1%

86.3%

86.4%

86.5%

86.6%

86.7%

86.8%

87.0%

87.1%

87.2%

87.3%

87.4%

87.5%

87.7%

87.8%

87.9%

88.0%

88.1%

88.2%

88.4%

88.5%

88.6%

88.7%

88.8%

88.9%

89.0%

89.2%

89.3%

89.4%

89.5%

89.6%

89.7%

89.9%

90.0%

90.1%

90.2%

90.3%

90.4%

90.6%

90.7%

90.8%

90.9%

91.0%

91.1%

91.3%

91.4%

91.5%

91.6%

91.7%

91.8%

92.0%

92.1%

92.2%

92.3%

92.4%

92.5%

92.7%

92.8%

92.9%

93.0%

93.1%

93.2%

93.4%

93.5%

93.6%

93.7%

93.8%

93.9%

94.1%

94.2%

94.3%

94.4%

94.5%

94.6%

94.8%

94.9%

95.0%

95.1%

95.2%

95.3%

95.5%

95.6%

95.7%

95.8%

95.9%

96.0%

96.2%

96.3%

96.4%

96.5%

96.6%

96.7%

96.8%

97.0%

97.1%

97.2%

97.3%

97.4%

97.5%

97.7%

97.8%

97.9%

98.0%

98.1%

98.2%

98.4%

98.5%

98.6%

98.7%

98.8%

98.9%

99.1%

99.2%

99.3%

99.4%

99.5%

99.6%

99.8%

99.9%

100.0%

100.0%

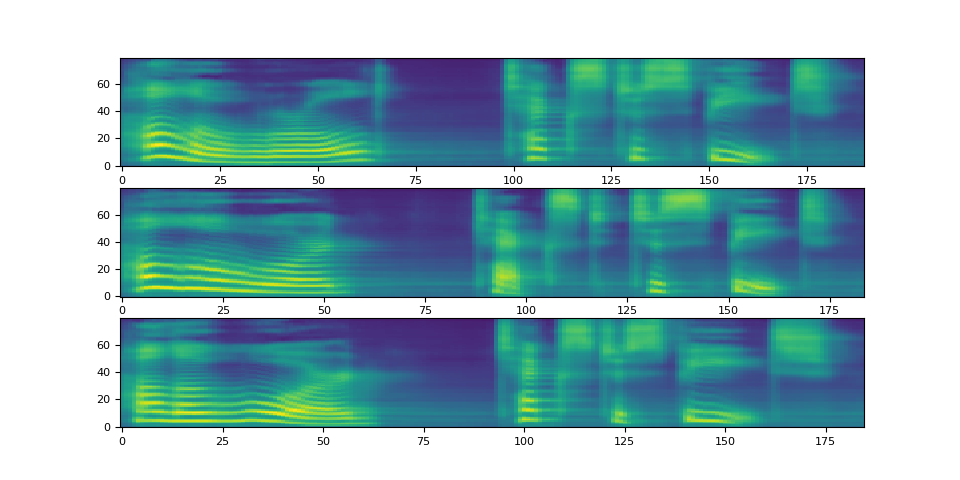

请注意,Tacotron2.infer 方法执行多项式采样,因此频谱图生成过程会产生随机性。

def plot():

fig, ax = plt.subplots(3, 1)

for i in range(3):

with torch.inference_mode():

spec, spec_lengths, _ = tacotron2.infer(processed, lengths)

print(spec[0].shape)

ax[i].imshow(spec[0].cpu().detach(), origin="lower", aspect="auto")

plot()

torch.Size([80, 183])

torch.Size([80, 196])

torch.Size([80, 184])

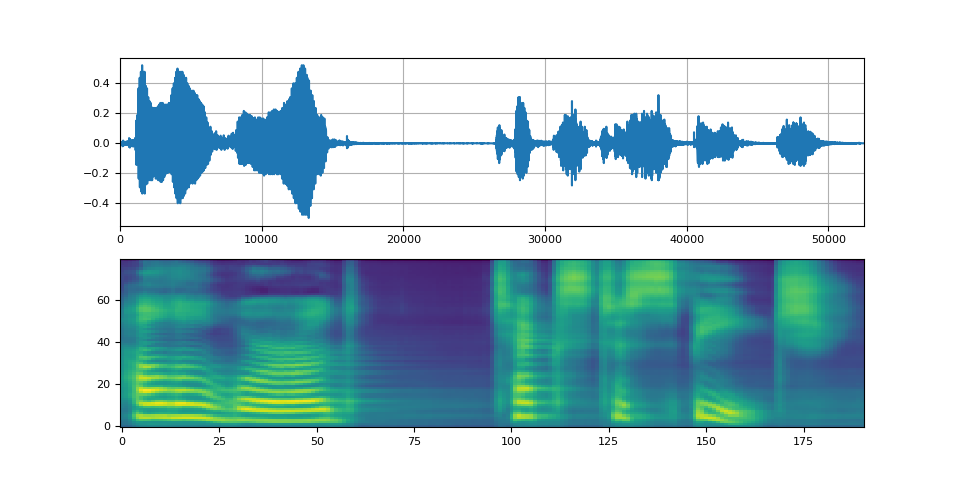

波形生成¶

生成频谱图后,最后一个过程是使用声码器从频谱图中恢复波形。

torchaudio 提供了基于 GriffinLim 和 WaveRNN 的声码器。

WaveRNN 声码器¶

继续上一节,我们可以从同一个 bundle 中实例化匹配的 WaveRNN 模型。

bundle = torchaudio.pipelines.TACOTRON2_WAVERNN_CHAR_LJSPEECH

processor = bundle.get_text_processor()

tacotron2 = bundle.get_tacotron2().to(device)

vocoder = bundle.get_vocoder().to(device)

text = "Hello world! Text to speech!"

with torch.inference_mode():

processed, lengths = processor(text)

processed = processed.to(device)

lengths = lengths.to(device)

spec, spec_lengths, _ = tacotron2.infer(processed, lengths)

waveforms, lengths = vocoder(spec, spec_lengths)

Downloading: "https://download.pytorch.org/torchaudio/models/wavernn_10k_epochs_8bits_ljspeech.pth" to /root/.cache/torch/hub/checkpoints/wavernn_10k_epochs_8bits_ljspeech.pth

0.8%

1.5%

2.3%

3.0%

3.8%

4.5%

5.3%

6.0%

6.8%

7.5%

8.3%

9.0%

9.8%

10.5%

11.3%

12.0%

12.8%

13.5%

14.3%

15.0%

15.8%

16.5%

17.3%

18.0%

18.8%

19.5%

20.3%

21.0%

21.8%

22.5%

23.3%

24.0%

24.8%

25.5%

26.3%

27.0%

27.8%

28.5%

29.3%

30.0%

30.8%

31.5%

32.3%

33.0%

33.8%

34.5%

35.3%

36.0%

36.8%

37.5%

38.3%

39.0%

39.8%

40.5%

41.3%

42.0%

42.8%

43.5%

44.3%

45.0%

45.8%

46.5%

47.3%

48.0%

48.8%

49.5%

50.3%

51.0%

51.8%

52.5%

53.3%

54.0%

54.8%

55.5%

56.3%

57.0%

57.8%

58.5%

59.3%

60.0%

60.8%

61.5%

62.3%

63.0%

63.8%

64.5%

65.3%

66.0%

66.8%

67.5%

68.3%

69.0%

69.8%

70.5%

71.3%

72.0%

72.8%

73.5%

74.3%

75.0%

75.8%

76.5%

77.3%

78.0%

78.8%

79.5%

80.3%

81.0%

81.8%

82.5%

83.3%

84.0%

84.8%

85.5%

86.3%

87.0%

87.8%

88.5%

89.3%

90.0%

90.8%

91.5%

92.3%

93.0%

93.8%

94.5%

95.3%

96.0%

96.8%

97.5%

98.3%

99.0%

99.8%

100.0%

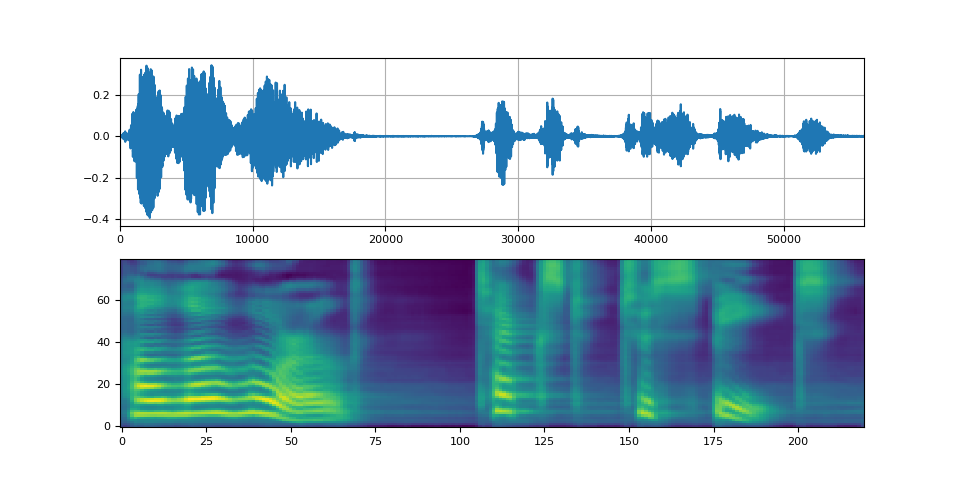

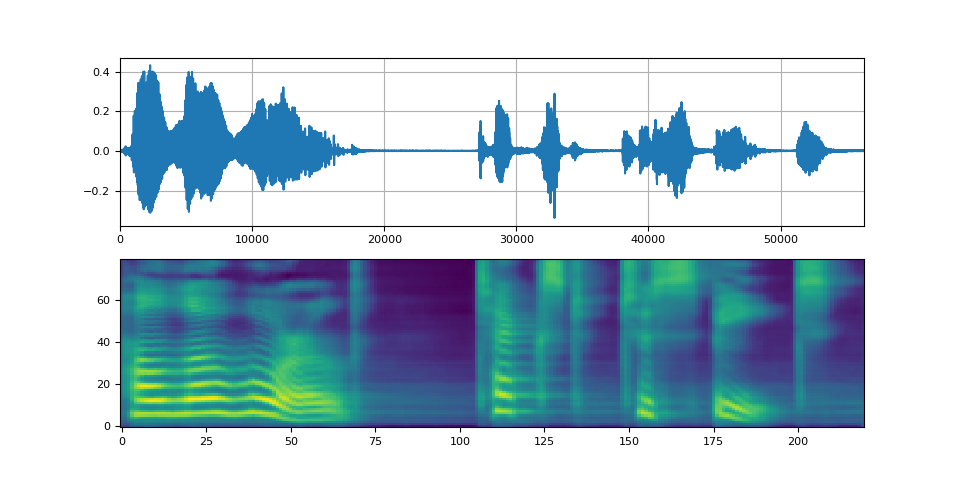

def plot(waveforms, spec, sample_rate):

waveforms = waveforms.cpu().detach()

fig, [ax1, ax2] = plt.subplots(2, 1)

ax1.plot(waveforms[0])

ax1.set_xlim(0, waveforms.size(-1))

ax1.grid(True)

ax2.imshow(spec[0].cpu().detach(), origin="lower", aspect="auto")

return IPython.display.Audio(waveforms[0:1], rate=sample_rate)

plot(waveforms, spec, vocoder.sample_rate)

Griffin-Lim 声码器¶

使用 Griffin-Lim 声码器与 WaveRNN 相同。您可以使用 get_vocoder() 方法实例化声码器对象,然后传入频谱图。

bundle = torchaudio.pipelines.TACOTRON2_GRIFFINLIM_CHAR_LJSPEECH

processor = bundle.get_text_processor()

tacotron2 = bundle.get_tacotron2().to(device)

vocoder = bundle.get_vocoder().to(device)

with torch.inference_mode():

processed, lengths = processor(text)

processed = processed.to(device)

lengths = lengths.to(device)

spec, spec_lengths, _ = tacotron2.infer(processed, lengths)

waveforms, lengths = vocoder(spec, spec_lengths)

Downloading: "https://download.pytorch.org/torchaudio/models/tacotron2_english_characters_1500_epochs_ljspeech.pth" to /root/.cache/torch/hub/checkpoints/tacotron2_english_characters_1500_epochs_ljspeech.pth

0.1%

0.2%

0.3%

0.5%

0.6%

0.7%

0.8%

0.9%

1.0%

1.2%

1.3%

1.4%

1.5%

1.6%

1.7%

1.9%

2.0%

2.1%

2.2%

2.3%

2.4%

2.6%

2.7%

2.8%

2.9%

3.0%

3.1%

3.3%

3.4%

3.5%

3.6%

3.7%

3.8%

4.0%

4.1%

4.2%

4.3%

4.4%

4.5%

4.7%

4.8%

4.9%

5.0%

5.1%

5.2%

5.4%

5.5%

5.6%

5.7%

5.8%

5.9%

6.1%

6.2%

6.3%

6.4%

6.5%

6.6%

6.8%

6.9%

7.0%

7.1%

7.2%

7.3%

7.4%

7.6%

7.7%

7.8%

7.9%

8.0%

8.1%

8.3%

8.4%

8.5%

8.6%

8.7%

8.8%

9.0%

9.1%

9.2%

9.3%

9.4%

9.5%

9.7%

9.8%

9.9%

10.0%

10.1%

10.2%

10.4%

10.5%

10.6%

10.7%

10.8%

10.9%

11.1%

11.2%

11.3%

11.4%

11.5%

11.6%

11.8%

11.9%

12.0%

12.1%

12.2%

12.3%

12.5%

12.6%

12.7%

12.8%

12.9%

13.0%

13.2%

13.3%

13.4%

13.5%

13.6%

13.7%

13.9%

14.0%

14.1%

14.2%

14.3%

14.4%

14.6%

14.7%

14.8%

14.9%

15.0%

15.1%

15.2%

15.4%

15.5%

15.6%

15.7%

15.8%

15.9%

16.1%

16.2%

16.3%

16.4%

16.5%

16.6%

16.8%

16.9%

17.0%

17.1%

17.2%

17.3%

17.5%

17.6%

17.7%

17.8%

17.9%

18.0%

18.2%

18.3%

18.4%

18.5%

18.6%

18.7%

18.9%

19.0%

19.1%

19.2%

19.3%

19.4%

19.6%

19.7%

19.8%

19.9%

20.0%

20.1%

20.3%

20.4%

20.5%

20.6%

20.7%

20.8%

21.0%

21.1%

21.2%

21.3%

21.4%

21.5%

21.7%

21.8%

21.9%

22.0%

22.1%

22.2%

22.3%

22.5%

22.6%

22.7%

22.8%

22.9%

23.0%

23.2%

23.3%

23.4%

23.5%

23.6%

23.7%

23.9%

24.0%

24.1%

24.2%

24.3%

24.4%

24.6%

24.7%

24.8%

24.9%

25.0%

25.1%

25.3%

25.4%

25.5%

25.6%

25.7%

25.8%

26.0%

26.1%

26.2%

26.3%

26.4%

26.5%

26.7%

26.8%

26.9%

27.0%

27.1%

27.2%

27.4%

27.5%

27.6%

27.7%

27.8%

27.9%

28.1%

28.2%

28.3%

28.4%

28.5%

28.6%

28.8%

28.9%

29.0%

29.1%

29.2%

29.3%

29.5%

29.6%

29.7%

29.8%

29.9%

30.0%

30.1%

30.3%

30.4%

30.5%

30.6%

30.7%

30.8%

31.0%

31.1%

31.2%

31.3%

31.4%

31.5%

31.7%

31.8%

31.9%

32.0%

32.1%

32.2%

32.4%

32.5%

32.6%

32.7%

32.8%

32.9%

33.1%

33.2%

33.3%

33.4%

33.5%

33.6%

33.8%

33.9%

34.0%

34.1%

34.2%

34.3%

34.5%

34.6%

34.7%

34.8%

34.9%

35.0%

35.2%

35.3%

35.4%

35.5%

35.6%

35.7%

35.9%

36.0%

36.1%

36.2%

36.3%

36.4%

36.6%

36.7%

36.8%

36.9%

37.0%

37.1%

37.2%

37.4%

37.5%

37.6%

37.7%

37.8%

37.9%

38.1%

38.2%

38.3%

38.4%

38.5%

38.6%

38.8%

38.9%

39.0%

39.1%

39.2%

39.3%

39.5%

39.6%

39.7%

39.8%

39.9%

40.0%

40.2%

40.3%

40.4%

40.5%

40.6%

40.7%

40.9%

41.0%

41.1%

41.2%

41.3%

41.4%

41.6%

41.7%

41.8%

41.9%

42.0%

42.1%

42.3%

42.4%

42.5%

42.6%

42.7%

42.8%

43.0%

43.1%

43.2%

43.3%

43.4%

43.5%

43.7%

43.8%

43.9%

44.0%

44.1%

44.2%

44.4%

44.5%

44.6%

44.7%

44.8%

44.9%

45.0%

45.2%

45.3%

45.4%

45.5%

45.6%

45.7%

45.9%

46.0%

46.1%

46.2%

46.3%

46.4%

46.6%

46.7%

46.8%

46.9%

47.0%

47.1%

47.3%

47.4%

47.5%

47.6%

47.7%

47.8%

48.0%

48.1%

48.2%

48.3%

48.4%

48.5%

48.7%

48.8%

48.9%

49.0%

49.1%

49.2%

49.4%

49.5%

49.6%

49.7%

49.8%

49.9%

50.1%

50.2%

50.3%

50.4%

50.5%

50.6%

50.8%

50.9%

51.0%

51.1%

51.2%

51.3%

51.5%

51.6%

51.7%

51.8%

51.9%

52.0%

52.1%

52.3%

52.4%

52.5%

52.6%

52.7%

52.8%

53.0%

53.1%

53.2%

53.3%

53.4%

53.5%

53.7%

53.8%

53.9%

54.0%

54.1%

54.2%

54.4%

54.5%

54.6%

54.7%

54.8%

54.9%

55.1%

55.2%

55.3%

55.4%

55.5%

55.6%

55.8%

55.9%

56.0%

56.1%

56.2%

56.3%

56.5%

56.6%

56.7%

56.8%

56.9%

57.0%

57.2%

57.3%

57.4%

57.5%

57.6%

57.7%

57.9%

58.0%

58.1%

58.2%

58.3%

58.4%

58.6%

58.7%

58.8%

58.9%

59.0%

59.1%

59.2%

59.4%

59.5%

59.6%

59.7%

59.8%

59.9%

60.1%

60.2%

60.3%

60.4%

60.5%

60.6%

60.8%

60.9%

61.0%

61.1%

61.2%

61.3%

61.5%

61.6%

61.7%

61.8%

61.9%

62.0%

62.2%

62.3%

62.4%

62.5%

62.6%

62.7%

62.9%

63.0%

63.1%

63.2%

63.3%

63.4%

63.6%

63.7%

63.8%

63.9%

64.0%

64.1%

64.3%

64.4%

64.5%

64.6%

64.7%

64.8%

65.0%

65.1%

65.2%

65.3%

65.4%

65.5%

65.7%

65.8%

65.9%

66.0%

66.1%

66.2%

66.4%

66.5%

66.6%

66.7%

66.8%

66.9%

67.0%

67.2%

67.3%

67.4%

67.5%

67.6%

67.7%

67.9%

68.0%

68.1%

68.2%

68.3%

68.4%

68.6%

68.7%

68.8%

68.9%

69.0%

69.1%

69.3%

69.4%

69.5%

69.6%

69.7%

69.8%

70.0%

70.1%

70.2%

70.3%

70.4%

70.5%

70.7%

70.8%

70.9%

71.0%

71.1%

71.2%

71.4%

71.5%

71.6%

71.7%

71.8%

71.9%

72.1%

72.2%

72.3%

72.4%

72.5%

72.6%

72.8%

72.9%

73.0%

73.1%

73.2%

73.3%

73.5%

73.6%

73.7%

73.8%

73.9%

74.0%

74.1%

74.3%

74.4%

74.5%

74.6%

74.7%

74.8%

75.0%

75.1%

75.2%

75.3%

75.4%

75.5%

75.7%

75.8%

75.9%

76.0%

76.1%

76.2%

76.4%

76.5%

76.6%

76.7%

76.8%

76.9%

77.1%

77.2%

77.3%

77.4%

77.5%

77.6%

77.8%

77.9%

78.0%

78.1%

78.2%

78.3%

78.5%

78.6%

78.7%

78.8%

78.9%

79.0%

79.2%

79.3%

79.4%

79.5%

79.6%

79.7%

79.9%

80.0%

80.1%

80.2%

80.3%

80.4%

80.6%

80.7%

80.8%

80.9%

81.0%

81.1%

81.3%

81.4%

81.5%

81.6%

81.7%

81.8%

81.9%

82.1%

82.2%

82.3%

82.4%

82.5%

82.6%

82.8%

82.9%

83.0%

83.1%

83.2%

83.3%

83.5%

83.6%

83.7%

83.8%

83.9%

84.0%

84.2%

84.3%

84.4%

84.5%

84.6%

84.7%

84.9%

85.0%

85.1%

85.2%

85.3%

85.4%

85.6%

85.7%

85.8%

85.9%

86.0%

86.1%

86.3%

86.4%

86.5%

86.6%

86.7%

86.8%

87.0%

87.1%

87.2%

87.3%

87.4%

87.5%

87.7%

87.8%

87.9%

88.0%

88.1%

88.2%

88.4%

88.5%

88.6%

88.7%

88.8%

88.9%

89.0%

89.2%

89.3%

89.4%

89.5%

89.6%

89.7%

89.9%

90.0%

90.1%

90.2%

90.3%

90.4%

90.6%

90.7%

90.8%

90.9%

91.0%

91.1%

91.3%

91.4%

91.5%

91.6%

91.7%

91.8%

92.0%

92.1%

92.2%

92.3%

92.4%

92.5%

92.7%

92.8%

92.9%

93.0%

93.1%

93.2%

93.4%

93.5%

93.6%

93.7%

93.8%

93.9%

94.1%

94.2%

94.3%

94.4%

94.5%

94.6%

94.8%

94.9%

95.0%

95.1%

95.2%

95.3%

95.5%

95.6%

95.7%

95.8%

95.9%

96.0%

96.2%

96.3%

96.4%

96.5%

96.6%

96.7%

96.8%

97.0%

97.1%

97.2%

97.3%

97.4%

97.5%

97.7%

97.8%

97.9%

98.0%

98.1%

98.2%

98.4%

98.5%

98.6%

98.7%

98.8%

98.9%

99.1%

99.2%

99.3%

99.4%

99.5%

99.6%

99.8%

99.9%

100.0%

100.0%

Waveglow 声码器¶

Waveglow 是 Nvidia 发布的一款声码器。其预训练权重已在 Torch Hub 上发布。可以使用 torch.hub 模块来实例化模型。

# Workaround to load model mapped on GPU

# https://stackoverflow.com/a/61840832

waveglow = torch.hub.load(

"NVIDIA/DeepLearningExamples:torchhub",

"nvidia_waveglow",

model_math="fp32",

pretrained=False,

)

checkpoint = torch.hub.load_state_dict_from_url(

"https://api.ngc.nvidia.com/v2/models/nvidia/waveglowpyt_fp32/versions/1/files/nvidia_waveglowpyt_fp32_20190306.pth", # noqa: E501

progress=False,

map_location=device,

)

state_dict = {key.replace("module.", ""): value for key, value in checkpoint["state_dict"].items()}

waveglow.load_state_dict(state_dict)

waveglow = waveglow.remove_weightnorm(waveglow)

waveglow = waveglow.to(device)

waveglow.eval()

with torch.no_grad():

waveforms = waveglow.infer(spec)

/pytorch/audio/ci_env/lib/python3.11/site-packages/torch/hub.py:335: UserWarning: You are about to download and run code from an untrusted repository. In a future release, this won't be allowed. To add the repository to your trusted list, change the command to load(..., trust_repo=False) and a command prompt will appear asking for an explicit confirmation of trust, or load(..., trust_repo=True), which will assume that the prompt is to be answered with 'yes'. You can also use load(..., trust_repo='check') which will only prompt for confirmation if the repo is not already trusted. This will eventually be the default behaviour

warnings.warn(

Downloading: "https://github.com/NVIDIA/DeepLearningExamples/zipball/torchhub" to /root/.cache/torch/hub/torchhub.zip

/root/.cache/torch/hub/NVIDIA_DeepLearningExamples_torchhub/PyTorch/Classification/ConvNets/image_classification/models/common.py:13: UserWarning: pytorch_quantization module not found, quantization will not be available

warnings.warn(

/root/.cache/torch/hub/NVIDIA_DeepLearningExamples_torchhub/PyTorch/Classification/ConvNets/image_classification/models/efficientnet.py:17: UserWarning: pytorch_quantization module not found, quantization will not be available

warnings.warn(

/pytorch/audio/ci_env/lib/python3.11/site-packages/torch/nn/utils/weight_norm.py:144: FutureWarning: `torch.nn.utils.weight_norm` is deprecated in favor of `torch.nn.utils.parametrizations.weight_norm`.

WeightNorm.apply(module, name, dim)

Downloading: "https://api.ngc.nvidia.com/v2/models/nvidia/waveglowpyt_fp32/versions/1/files/nvidia_waveglowpyt_fp32_20190306.pth" to /root/.cache/torch/hub/checkpoints/nvidia_waveglowpyt_fp32_20190306.pth

脚本总运行时间: ( 1 分钟 16.512 秒)