注意

转至末尾 下载完整的示例代码。

聊天机器人教程#

创建于:2018年8月14日 | 最后更新:2025年1月24日 | 最后验证:2024年11月05日

在本教程中,我们将探索循环序列到序列模型的有趣且有用的用例。我们将使用来自 Cornell Movie-Dialogs Corpus 的电影剧本训练一个简单的聊天机器人。

对话模型是人工智能研究的热门话题。聊天机器人在各种场景中都可以找到,包括客户服务应用程序和在线帮助台。这些机器人通常由基于检索的模型驱动,这些模型会输出预定义的响应来回答特定形式的问题。在像公司 IT 帮助台这样高度受限的领域,这些模型可能就足够了,但对于更通用的用例,它们不够健壮。教机器人在多个领域与人类进行有意义的对话,这是一个远未解决的研究问题。最近,深度学习的蓬勃发展催生了像 Google 的 Neural Conversational Model 这样强大的生成模型,这标志着朝着多领域生成式对话模型迈出了重要一步。在本教程中,我们将用 PyTorch 实现这种模型。

> hello?

Bot: hello .

> where am I?

Bot: you re in a hospital .

> who are you?

Bot: i m a lawyer .

> how are you doing?

Bot: i m fine .

> are you my friend?

Bot: no .

> you're under arrest

Bot: i m trying to help you !

> i'm just kidding

Bot: i m sorry .

> where are you from?

Bot: san francisco .

> it's time for me to leave

Bot: i know .

> goodbye

Bot: goodbye .

教程亮点

处理 Cornell Movie-Dialogs Corpus 数据集的加载和预处理

实现带有 Luong 注意力机制 的序列到序列模型

使用小批量联合训练编码器和解码器模型

实现贪婪搜索解码模块

与训练好的聊天机器人交互

致谢

本教程借鉴了以下来源的代码

Yuan-Kuei Wu 的 pytorch-chatbot 实现: ywk991112/pytorch-chatbot

Sean Robertson 的 practical-pytorch seq2seq-translation 示例: spro/practical-pytorch

FloydHub Cornell Movie Corpus 预处理代码: floydhub/textutil-preprocess-cornell-movie-corpus

准备工作#

首先,请 下载 Movie-Dialogs Corpus 压缩文件。

# and put in a ``data/`` directory under the current directory.

#

# After that, let’s import some necessities.

#

import torch

from torch.jit import script, trace

import torch.nn as nn

from torch import optim

import torch.nn.functional as F

import csv

import random

import re

import os

import unicodedata

import codecs

from io import open

import itertools

import math

import json

# If the current `accelerator <https://pytorch.ac.cn/docs/stable/torch.html#accelerators>`__ is available,

# we will use it. Otherwise, we use the CPU.

device = torch.accelerator.current_accelerator().type if torch.accelerator.is_available() else "cpu"

print(f"Using {device} device")

Using cuda device

加载与预处理数据#

下一步是重新格式化我们的数据文件,并将数据加载到我们可以使用的结构中。

Cornell Movie-Dialogs Corpus 是一个丰富的电影角色对话数据集,包含

220,579 对电影角色之间的对话交流

来自 617 部电影的 9,035 个角色

304,713 条总发言

该数据集庞大且多样,语言的正式程度、时期、情感等存在很大差异。我们希望这种多样性使我们的模型能够应对各种形式的输入和查询。

首先,让我们看看数据文件中的一些行,以了解原始格式。

corpus_name = "movie-corpus"

corpus = os.path.join("data", corpus_name)

def printLines(file, n=10):

with open(file, 'rb') as datafile:

lines = datafile.readlines()

for line in lines[:n]:

print(line)

printLines(os.path.join(corpus, "utterances.jsonl"))

b'{"id": "L1045", "conversation_id": "L1044", "text": "They do not!", "speaker": "u0", "meta": {"movie_id": "m0", "parsed": [{"rt": 1, "toks": [{"tok": "They", "tag": "PRP", "dep": "nsubj", "up": 1, "dn": []}, {"tok": "do", "tag": "VBP", "dep": "ROOT", "dn": [0, 2, 3]}, {"tok": "not", "tag": "RB", "dep": "neg", "up": 1, "dn": []}, {"tok": "!", "tag": ".", "dep": "punct", "up": 1, "dn": []}]}]}, "reply-to": "L1044", "timestamp": null, "vectors": []}\n'

b'{"id": "L1044", "conversation_id": "L1044", "text": "They do to!", "speaker": "u2", "meta": {"movie_id": "m0", "parsed": [{"rt": 1, "toks": [{"tok": "They", "tag": "PRP", "dep": "nsubj", "up": 1, "dn": []}, {"tok": "do", "tag": "VBP", "dep": "ROOT", "dn": [0, 2, 3]}, {"tok": "to", "tag": "TO", "dep": "dobj", "up": 1, "dn": []}, {"tok": "!", "tag": ".", "dep": "punct", "up": 1, "dn": []}]}]}, "reply-to": null, "timestamp": null, "vectors": []}\n'

b'{"id": "L985", "conversation_id": "L984", "text": "I hope so.", "speaker": "u0", "meta": {"movie_id": "m0", "parsed": [{"rt": 1, "toks": [{"tok": "I", "tag": "PRP", "dep": "nsubj", "up": 1, "dn": []}, {"tok": "hope", "tag": "VBP", "dep": "ROOT", "dn": [0, 2, 3]}, {"tok": "so", "tag": "RB", "dep": "advmod", "up": 1, "dn": []}, {"tok": ".", "tag": ".", "dep": "punct", "up": 1, "dn": []}]}]}, "reply-to": "L984", "timestamp": null, "vectors": []}\n'

b'{"id": "L984", "conversation_id": "L984", "text": "She okay?", "speaker": "u2", "meta": {"movie_id": "m0", "parsed": [{"rt": 1, "toks": [{"tok": "She", "tag": "PRP", "dep": "nsubj", "up": 1, "dn": []}, {"tok": "okay", "tag": "RB", "dep": "ROOT", "dn": [0, 2]}, {"tok": "?", "tag": ".", "dep": "punct", "up": 1, "dn": []}]}]}, "reply-to": null, "timestamp": null, "vectors": []}\n'

b'{"id": "L925", "conversation_id": "L924", "text": "Let\'s go.", "speaker": "u0", "meta": {"movie_id": "m0", "parsed": [{"rt": 0, "toks": [{"tok": "Let", "tag": "VB", "dep": "ROOT", "dn": [2, 3]}, {"tok": "\'s", "tag": "PRP", "dep": "nsubj", "up": 2, "dn": []}, {"tok": "go", "tag": "VB", "dep": "ccomp", "up": 0, "dn": [1]}, {"tok": ".", "tag": ".", "dep": "punct", "up": 0, "dn": []}]}]}, "reply-to": "L924", "timestamp": null, "vectors": []}\n'

b'{"id": "L924", "conversation_id": "L924", "text": "Wow", "speaker": "u2", "meta": {"movie_id": "m0", "parsed": [{"rt": 0, "toks": [{"tok": "Wow", "tag": "UH", "dep": "ROOT", "dn": []}]}]}, "reply-to": null, "timestamp": null, "vectors": []}\n'

b'{"id": "L872", "conversation_id": "L870", "text": "Okay -- you\'re gonna need to learn how to lie.", "speaker": "u0", "meta": {"movie_id": "m0", "parsed": [{"rt": 4, "toks": [{"tok": "Okay", "tag": "UH", "dep": "intj", "up": 4, "dn": []}, {"tok": "--", "tag": ":", "dep": "punct", "up": 4, "dn": []}, {"tok": "you", "tag": "PRP", "dep": "nsubj", "up": 4, "dn": []}, {"tok": "\'re", "tag": "VBP", "dep": "aux", "up": 4, "dn": []}, {"tok": "gon", "tag": "VBG", "dep": "ROOT", "dn": [0, 1, 2, 3, 6, 12]}, {"tok": "na", "tag": "TO", "dep": "aux", "up": 6, "dn": []}, {"tok": "need", "tag": "VB", "dep": "xcomp", "up": 4, "dn": [5, 8]}, {"tok": "to", "tag": "TO", "dep": "aux", "up": 8, "dn": []}, {"tok": "learn", "tag": "VB", "dep": "xcomp", "up": 6, "dn": [7, 11]}, {"tok": "how", "tag": "WRB", "dep": "advmod", "up": 11, "dn": []}, {"tok": "to", "tag": "TO", "dep": "aux", "up": 11, "dn": []}, {"tok": "lie", "tag": "VB", "dep": "xcomp", "up": 8, "dn": [9, 10]}, {"tok": ".", "tag": ".", "dep": "punct", "up": 4, "dn": []}]}]}, "reply-to": "L871", "timestamp": null, "vectors": []}\n'

b'{"id": "L871", "conversation_id": "L870", "text": "No", "speaker": "u2", "meta": {"movie_id": "m0", "parsed": [{"rt": 0, "toks": [{"tok": "No", "tag": "UH", "dep": "ROOT", "dn": []}]}]}, "reply-to": "L870", "timestamp": null, "vectors": []}\n'

b'{"id": "L870", "conversation_id": "L870", "text": "I\'m kidding. You know how sometimes you just become this \\"persona\\"? And you don\'t know how to quit?", "speaker": "u0", "meta": {"movie_id": "m0", "parsed": [{"rt": 2, "toks": [{"tok": "I", "tag": "PRP", "dep": "nsubj", "up": 2, "dn": []}, {"tok": "\'m", "tag": "VBP", "dep": "aux", "up": 2, "dn": []}, {"tok": "kidding", "tag": "VBG", "dep": "ROOT", "dn": [0, 1, 3]}, {"tok": ".", "tag": ".", "dep": "punct", "up": 2, "dn": [4]}, {"tok": " ", "tag": "_SP", "dep": "", "up": 3, "dn": []}]}, {"rt": 1, "toks": [{"tok": "You", "tag": "PRP", "dep": "nsubj", "up": 1, "dn": []}, {"tok": "know", "tag": "VBP", "dep": "ROOT", "dn": [0, 6, 11]}, {"tok": "how", "tag": "WRB", "dep": "advmod", "up": 3, "dn": []}, {"tok": "sometimes", "tag": "RB", "dep": "advmod", "up": 6, "dn": [2]}, {"tok": "you", "tag": "PRP", "dep": "nsubj", "up": 6, "dn": []}, {"tok": "just", "tag": "RB", "dep": "advmod", "up": 6, "dn": []}, {"tok": "become", "tag": "VBP", "dep": "ccomp", "up": 1, "dn": [3, 4, 5, 9]}, {"tok": "this", "tag": "DT", "dep": "det", "up": 9, "dn": []}, {"tok": "\\"", "tag": "``", "dep": "punct", "up": 9, "dn": []}, {"tok": "persona", "tag": "NN", "dep": "attr", "up": 6, "dn": [7, 8, 10]}, {"tok": "\\"", "tag": "\'\'", "dep": "punct", "up": 9, "dn": []}, {"tok": "?", "tag": ".", "dep": "punct", "up": 1, "dn": [12]}, {"tok": " ", "tag": "_SP", "dep": "", "up": 11, "dn": []}]}, {"rt": 4, "toks": [{"tok": "And", "tag": "CC", "dep": "cc", "up": 4, "dn": []}, {"tok": "you", "tag": "PRP", "dep": "nsubj", "up": 4, "dn": []}, {"tok": "do", "tag": "VBP", "dep": "aux", "up": 4, "dn": []}, {"tok": "n\'t", "tag": "RB", "dep": "neg", "up": 4, "dn": []}, {"tok": "know", "tag": "VB", "dep": "ROOT", "dn": [0, 1, 2, 3, 7, 8]}, {"tok": "how", "tag": "WRB", "dep": "advmod", "up": 7, "dn": []}, {"tok": "to", "tag": "TO", "dep": "aux", "up": 7, "dn": []}, {"tok": "quit", "tag": "VB", "dep": "xcomp", "up": 4, "dn": [5, 6]}, {"tok": "?", "tag": ".", "dep": "punct", "up": 4, "dn": []}]}]}, "reply-to": null, "timestamp": null, "vectors": []}\n'

b'{"id": "L869", "conversation_id": "L866", "text": "Like my fear of wearing pastels?", "speaker": "u0", "meta": {"movie_id": "m0", "parsed": [{"rt": 0, "toks": [{"tok": "Like", "tag": "IN", "dep": "ROOT", "dn": [2, 6]}, {"tok": "my", "tag": "PRP$", "dep": "poss", "up": 2, "dn": []}, {"tok": "fear", "tag": "NN", "dep": "pobj", "up": 0, "dn": [1, 3]}, {"tok": "of", "tag": "IN", "dep": "prep", "up": 2, "dn": [4]}, {"tok": "wearing", "tag": "VBG", "dep": "pcomp", "up": 3, "dn": [5]}, {"tok": "pastels", "tag": "NNS", "dep": "dobj", "up": 4, "dn": []}, {"tok": "?", "tag": ".", "dep": "punct", "up": 0, "dn": []}]}]}, "reply-to": "L868", "timestamp": null, "vectors": []}\n'

创建格式化的数据文件#

为了方便起见,我们将创建一个格式良好的数据文件,其中每行包含一个制表符分隔的查询句子和响应句子对。

以下函数有助于解析原始的 utterances.jsonl 数据文件。

loadLinesAndConversations将文件中的每一行拆分为一个行字典,其中包含:lineID、characterID和文本,然后将它们分组为对话,其中包含:conversationID、movieID和行。extractSentencePairs从对话中提取句子对。

# Splits each line of the file to create lines and conversations

def loadLinesAndConversations(fileName):

lines = {}

conversations = {}

with open(fileName, 'r', encoding='iso-8859-1') as f:

for line in f:

lineJson = json.loads(line)

# Extract fields for line object

lineObj = {}

lineObj["lineID"] = lineJson["id"]

lineObj["characterID"] = lineJson["speaker"]

lineObj["text"] = lineJson["text"]

lines[lineObj['lineID']] = lineObj

# Extract fields for conversation object

if lineJson["conversation_id"] not in conversations:

convObj = {}

convObj["conversationID"] = lineJson["conversation_id"]

convObj["movieID"] = lineJson["meta"]["movie_id"]

convObj["lines"] = [lineObj]

else:

convObj = conversations[lineJson["conversation_id"]]

convObj["lines"].insert(0, lineObj)

conversations[convObj["conversationID"]] = convObj

return lines, conversations

# Extracts pairs of sentences from conversations

def extractSentencePairs(conversations):

qa_pairs = []

for conversation in conversations.values():

# Iterate over all the lines of the conversation

for i in range(len(conversation["lines"]) - 1): # We ignore the last line (no answer for it)

inputLine = conversation["lines"][i]["text"].strip()

targetLine = conversation["lines"][i+1]["text"].strip()

# Filter wrong samples (if one of the lists is empty)

if inputLine and targetLine:

qa_pairs.append([inputLine, targetLine])

return qa_pairs

现在我们将调用这些函数并创建文件。我们将其命名为 formatted_movie_lines.txt。

# Define path to new file

datafile = os.path.join(corpus, "formatted_movie_lines.txt")

delimiter = '\t'

# Unescape the delimiter

delimiter = str(codecs.decode(delimiter, "unicode_escape"))

# Initialize lines dict and conversations dict

lines = {}

conversations = {}

# Load lines and conversations

print("\nProcessing corpus into lines and conversations...")

lines, conversations = loadLinesAndConversations(os.path.join(corpus, "utterances.jsonl"))

# Write new csv file

print("\nWriting newly formatted file...")

with open(datafile, 'w', encoding='utf-8') as outputfile:

writer = csv.writer(outputfile, delimiter=delimiter, lineterminator='\n')

for pair in extractSentencePairs(conversations):

writer.writerow(pair)

# Print a sample of lines

print("\nSample lines from file:")

printLines(datafile)

Processing corpus into lines and conversations...

Writing newly formatted file...

Sample lines from file:

b'They do to!\tThey do not!\n'

b'She okay?\tI hope so.\n'

b"Wow\tLet's go.\n"

b'"I\'m kidding. You know how sometimes you just become this ""persona""? And you don\'t know how to quit?"\tNo\n'

b"No\tOkay -- you're gonna need to learn how to lie.\n"

b"I figured you'd get to the good stuff eventually.\tWhat good stuff?\n"

b'What good stuff?\t"The ""real you""."\n'

b'"The ""real you""."\tLike my fear of wearing pastels?\n'

b'do you listen to this crap?\tWhat crap?\n'

b"What crap?\tMe. This endless ...blonde babble. I'm like, boring myself.\n"

加载和修剪数据#

我们的下一步是构建词汇表并将查询/响应句子对加载到内存中。

请注意,我们处理的是**单词**序列,这些单词没有隐式映射到离散的数值空间。因此,我们必须通过将数据集中遇到的每个唯一单词映射到一个索引值来创建一个映射。

为此,我们定义了一个 Voc 类,它维护一个从单词到索引的映射,一个从索引到单词的反向映射,每个单词的计数以及总单词数。该类提供了向词汇表添加单词(addWord)、添加句子中的所有单词(addSentence)以及修剪不常出现的单词(trim)的方法。稍后将详细介绍修剪。

# Default word tokens

PAD_token = 0 # Used for padding short sentences

SOS_token = 1 # Start-of-sentence token

EOS_token = 2 # End-of-sentence token

class Voc:

def __init__(self, name):

self.name = name

self.trimmed = False

self.word2index = {}

self.word2count = {}

self.index2word = {PAD_token: "PAD", SOS_token: "SOS", EOS_token: "EOS"}

self.num_words = 3 # Count SOS, EOS, PAD

def addSentence(self, sentence):

for word in sentence.split(' '):

self.addWord(word)

def addWord(self, word):

if word not in self.word2index:

self.word2index[word] = self.num_words

self.word2count[word] = 1

self.index2word[self.num_words] = word

self.num_words += 1

else:

self.word2count[word] += 1

# Remove words below a certain count threshold

def trim(self, min_count):

if self.trimmed:

return

self.trimmed = True

keep_words = []

for k, v in self.word2count.items():

if v >= min_count:

keep_words.append(k)

print('keep_words {} / {} = {:.4f}'.format(

len(keep_words), len(self.word2index), len(keep_words) / len(self.word2index)

))

# Reinitialize dictionaries

self.word2index = {}

self.word2count = {}

self.index2word = {PAD_token: "PAD", SOS_token: "SOS", EOS_token: "EOS"}

self.num_words = 3 # Count default tokens

for word in keep_words:

self.addWord(word)

现在我们可以组装我们的词汇表和查询/响应句子对。在我们准备好使用这些数据之前,必须执行一些预处理。

首先,我们必须使用 unicodeToAscii 将 Unicode 字符串转换为 ASCII。接下来,我们应该将所有字母转换为小写,并删除所有非字母字符,除了基本的标点符号(normalizeString)。最后,为了帮助模型收敛,我们将过滤掉长度超过 MAX_LENGTH 阈值的句子(filterPairs)。

MAX_LENGTH = 10 # Maximum sentence length to consider

# Turn a Unicode string to plain ASCII, thanks to

# https://stackoverflow.com/a/518232/2809427

def unicodeToAscii(s):

return ''.join(

c for c in unicodedata.normalize('NFD', s)

if unicodedata.category(c) != 'Mn'

)

# Lowercase, trim, and remove non-letter characters

def normalizeString(s):

s = unicodeToAscii(s.lower().strip())

s = re.sub(r"([.!?])", r" \1", s)

s = re.sub(r"[^a-zA-Z.!?]+", r" ", s)

s = re.sub(r"\s+", r" ", s).strip()

return s

# Read query/response pairs and return a voc object

def readVocs(datafile, corpus_name):

print("Reading lines...")

# Read the file and split into lines

lines = open(datafile, encoding='utf-8').\

read().strip().split('\n')

# Split every line into pairs and normalize

pairs = [[normalizeString(s) for s in l.split('\t')] for l in lines]

voc = Voc(corpus_name)

return voc, pairs

# Returns True if both sentences in a pair 'p' are under the MAX_LENGTH threshold

def filterPair(p):

# Input sequences need to preserve the last word for EOS token

return len(p[0].split(' ')) < MAX_LENGTH and len(p[1].split(' ')) < MAX_LENGTH

# Filter pairs using the ``filterPair`` condition

def filterPairs(pairs):

return [pair for pair in pairs if filterPair(pair)]

# Using the functions defined above, return a populated voc object and pairs list

def loadPrepareData(corpus, corpus_name, datafile, save_dir):

print("Start preparing training data ...")

voc, pairs = readVocs(datafile, corpus_name)

print("Read {!s} sentence pairs".format(len(pairs)))

pairs = filterPairs(pairs)

print("Trimmed to {!s} sentence pairs".format(len(pairs)))

print("Counting words...")

for pair in pairs:

voc.addSentence(pair[0])

voc.addSentence(pair[1])

print("Counted words:", voc.num_words)

return voc, pairs

# Load/Assemble voc and pairs

save_dir = os.path.join("data", "save")

voc, pairs = loadPrepareData(corpus, corpus_name, datafile, save_dir)

# Print some pairs to validate

print("\npairs:")

for pair in pairs[:10]:

print(pair)

Start preparing training data ...

Reading lines...

Read 221282 sentence pairs

Trimmed to 64313 sentence pairs

Counting words...

Counted words: 18082

pairs:

['they do to !', 'they do not !']

['she okay ?', 'i hope so .']

['wow', 'let s go .']

['what good stuff ?', 'the real you .']

['the real you .', 'like my fear of wearing pastels ?']

['do you listen to this crap ?', 'what crap ?']

['well no . . .', 'then that s all you had to say .']

['then that s all you had to say .', 'but']

['but', 'you always been this selfish ?']

['have fun tonight ?', 'tons']

另一个有助于实现更快收敛的技巧是修剪掉我们词汇表中很少使用的单词。减小特征空间也将软化模型必须学习逼近的函数的难度。我们将分两步完成此操作:

使用

voc.trim函数修剪使用次数低于MIN_COUNT阈值的单词。过滤掉包含修剪后单词的对。

MIN_COUNT = 3 # Minimum word count threshold for trimming

def trimRareWords(voc, pairs, MIN_COUNT):

# Trim words used under the MIN_COUNT from the voc

voc.trim(MIN_COUNT)

# Filter out pairs with trimmed words

keep_pairs = []

for pair in pairs:

input_sentence = pair[0]

output_sentence = pair[1]

keep_input = True

keep_output = True

# Check input sentence

for word in input_sentence.split(' '):

if word not in voc.word2index:

keep_input = False

break

# Check output sentence

for word in output_sentence.split(' '):

if word not in voc.word2index:

keep_output = False

break

# Only keep pairs that do not contain trimmed word(s) in their input or output sentence

if keep_input and keep_output:

keep_pairs.append(pair)

print("Trimmed from {} pairs to {}, {:.4f} of total".format(len(pairs), len(keep_pairs), len(keep_pairs) / len(pairs)))

return keep_pairs

# Trim voc and pairs

pairs = trimRareWords(voc, pairs, MIN_COUNT)

keep_words 7833 / 18079 = 0.4333

Trimmed from 64313 pairs to 53131, 0.8261 of total

为模型准备数据#

尽管我们在准备和整理数据以构建一个良好的词汇表对象和句子对列表方面付出了很多努力,但我们的模型最终期望输入是数值型的 torch tensor。准备好处理数据的一种方法可以在 seq2seq 翻译教程 中找到。在该教程中,我们使用的批量大小为 1,这意味着我们只需将句子对中的单词转换为它们在词汇表中的相应索引,然后将其馈送到模型。

但是,如果您想加快训练速度和/或想利用 GPU 并行化功能,您将需要使用小批量进行训练。

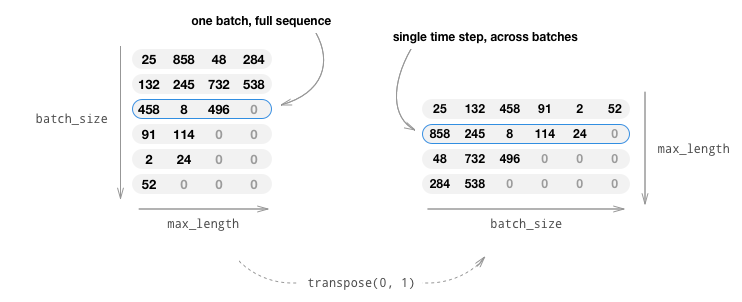

使用小批量训练也意味着我们必须注意批量中句子长度的变化。为了适应同一批次中不同大小的句子,我们将把输入的批量张量形状设置为(max_length, batch_size),其中比max_length短的句子在EOS_token之后用零填充。

如果我们仅通过将单词转换为索引(indexesFromSentence)并进行零填充来将英语句子转换为张量,则我们的张量将具有形状(batch_size, max_length),并且索引第一个维度将返回跨所有时间步的完整序列。然而,我们需要能够按时间步索引我们的批次,并跨批次中的所有序列进行索引。因此,我们将输入批次的形状转置为(max_length, batch_size),以便按第一个维度索引时,可以获得批次中所有句子的时间步。我们在 zeroPadding 函数中隐式处理了这一点。

inputVar 函数处理将句子转换为张量的过程,最终创建一个形状正确的零填充张量。它还返回一个包含批次中每个序列长度的 lengths 张量,该张量稍后将传递给我们的解码器。

outputVar 函数执行与 inputVar 类似的功能,但它返回的不是 lengths 张量,而是返回一个二值掩码张量和一个最大目标句子长度。二值掩码张量的形状与输出目标张量相同,但所有对应于PAD_token的元素为 0,所有其他元素为 1。

batch2TrainData 简单地接收一批对,并使用上述函数返回输入和目标张量。

def indexesFromSentence(voc, sentence):

return [voc.word2index[word] for word in sentence.split(' ')] + [EOS_token]

def zeroPadding(l, fillvalue=PAD_token):

return list(itertools.zip_longest(*l, fillvalue=fillvalue))

def binaryMatrix(l, value=PAD_token):

m = []

for i, seq in enumerate(l):

m.append([])

for token in seq:

if token == PAD_token:

m[i].append(0)

else:

m[i].append(1)

return m

# Returns padded input sequence tensor and lengths

def inputVar(l, voc):

indexes_batch = [indexesFromSentence(voc, sentence) for sentence in l]

lengths = torch.tensor([len(indexes) for indexes in indexes_batch])

padList = zeroPadding(indexes_batch)

padVar = torch.LongTensor(padList)

return padVar, lengths

# Returns padded target sequence tensor, padding mask, and max target length

def outputVar(l, voc):

indexes_batch = [indexesFromSentence(voc, sentence) for sentence in l]

max_target_len = max([len(indexes) for indexes in indexes_batch])

padList = zeroPadding(indexes_batch)

mask = binaryMatrix(padList)

mask = torch.BoolTensor(mask)

padVar = torch.LongTensor(padList)

return padVar, mask, max_target_len

# Returns all items for a given batch of pairs

def batch2TrainData(voc, pair_batch):

pair_batch.sort(key=lambda x: len(x[0].split(" ")), reverse=True)

input_batch, output_batch = [], []

for pair in pair_batch:

input_batch.append(pair[0])

output_batch.append(pair[1])

inp, lengths = inputVar(input_batch, voc)

output, mask, max_target_len = outputVar(output_batch, voc)

return inp, lengths, output, mask, max_target_len

# Example for validation

small_batch_size = 5

batches = batch2TrainData(voc, [random.choice(pairs) for _ in range(small_batch_size)])

input_variable, lengths, target_variable, mask, max_target_len = batches

print("input_variable:", input_variable)

print("lengths:", lengths)

print("target_variable:", target_variable)

print("mask:", mask)

print("max_target_len:", max_target_len)

input_variable: tensor([[ 287, 26, 26, 36, 1040],

[ 288, 1012, 411, 17, 14],

[ 167, 235, 606, 358, 5455],

[ 14, 1012, 14, 14, 6],

[1361, 14, 2, 2, 2],

[ 92, 2, 0, 0, 0],

[ 14, 0, 0, 0, 0],

[ 2, 0, 0, 0, 0]])

lengths: tensor([8, 6, 5, 5, 5])

target_variable: tensor([[ 186, 11, 79, 6229, 6884],

[ 124, 44, 411, 19, 6],

[ 172, 228, 2716, 362, 2],

[ 141, 28, 105, 5, 0],

[ 14, 929, 2717, 22, 0],

[ 2, 14, 14, 1001, 0],

[ 0, 2, 2, 10, 0],

[ 0, 0, 0, 2, 0]])

mask: tensor([[ True, True, True, True, True],

[ True, True, True, True, True],

[ True, True, True, True, True],

[ True, True, True, True, False],

[ True, True, True, True, False],

[ True, True, True, True, False],

[False, True, True, True, False],

[False, False, False, True, False]])

max_target_len: 8

定义模型#

Seq2Seq 模型#

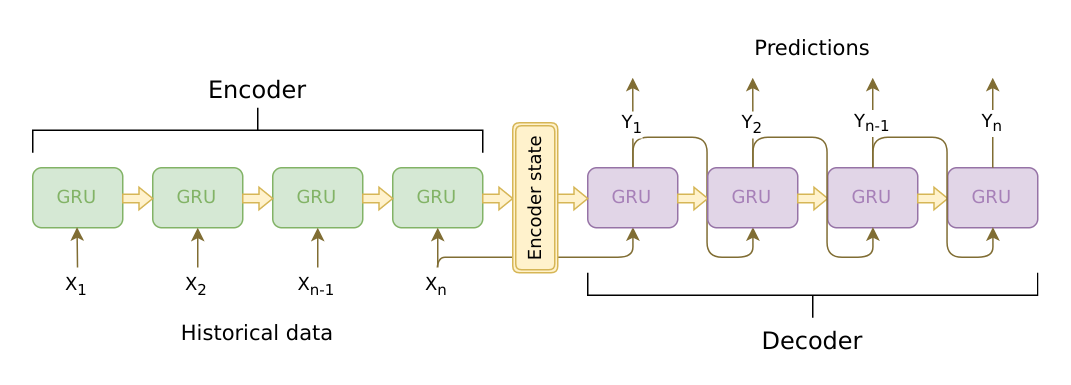

我们聊天机器人的核心是序列到序列(seq2seq)模型。seq2seq 模型的目标是接收一个可变长度的序列作为输入,并使用固定大小的模型返回一个可变长度的序列作为输出。

Sutskever 等人 发现,通过将两个独立的循环神经网络结合使用,我们可以完成这项任务。一个 RNN 充当**编码器**,它将可变长度的输入序列编码为固定长度的上下文向量。理论上,这个上下文向量(RNN 的最后一个隐藏层)将包含输入到机器人的查询句子的语义信息。第二个 RNN 是一个**解码器**,它接收一个输入单词和上下文向量,并返回对序列中下一个单词的猜测以及将在下一个迭代中使用的隐藏状态。

图片来源: https://jeddy92.github.io/JEddy92.github.io/ts_seq2seq_intro/

编码器#

编码器 RNN 一次处理输入句子中的一个 token(例如,单词),在每个时间步输出一个“输出”向量和一个“隐藏状态”向量。隐藏状态向量然后传递到下一个时间步,而输出向量被记录下来。编码器将序列中每个点的上下文转换为高维空间中的一组点,解码器将使用这些点来生成给定任务的有意义的输出。

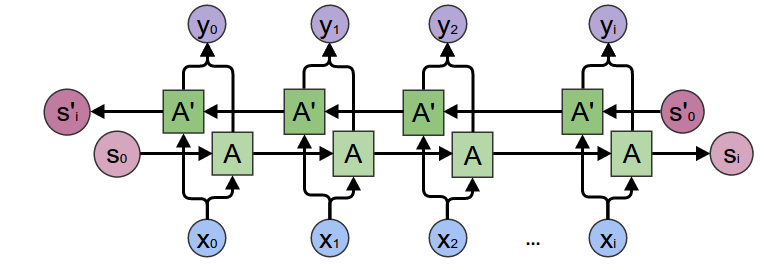

我们编码器的核心是多层门控循环单元(GRU),由 Cho 等人 于 2014 年发明。我们将使用 GRU 的双向变体,这意味着实际上有两个独立的 RNN:一个以正常顺序处理输入序列,另一个以反向顺序处理输入序列。每个网络的输出在每个时间步相加。使用双向 GRU 将使我们能够同时编码过去和未来的上下文。

双向 RNN

图片来源: https://colah.github.io/posts/2015-09-NN-Types-FP/

请注意,embedding 层用于将我们的单词索引编码到任意大小的特征空间中。对于我们的模型,此层会将每个单词映射到大小为 *hidden_size* 的特征空间。训练后,这些值应编码相似含义单词之间的语义相似性。

最后,如果将填充后的序列批次传递给 RNN 模块,我们必须在 RNN 传递周围使用 nn.utils.rnn.pack_padded_sequence 和 nn.utils.rnn.pad_packed_sequence 来打包和解包填充。

计算图

将单词索引转换为嵌入。

为 RNN 模块打包填充后的序列批次。

通过 GRU 进行前向传播。

解包填充。

对双向 GRU 输出进行求和。

返回输出和最终隐藏状态。

输入

input_seq:输入句子批次;形状=(max_length, batch_size)input_lengths:批次中对应于每个句子的句子长度列表;形状=(batch_size)hidden:隐藏状态;形状=(n_layers x num_directions, batch_size, hidden_size)

输出

outputs:GRU 最后一个隐藏层的输出特征(双向输出之和);形状=(max_length, batch_size, hidden_size)hidden:GRU 的更新隐藏状态;形状=(n_layers x num_directions, batch_size, hidden_size)

class EncoderRNN(nn.Module):

def __init__(self, hidden_size, embedding, n_layers=1, dropout=0):

super(EncoderRNN, self).__init__()

self.n_layers = n_layers

self.hidden_size = hidden_size

self.embedding = embedding

# Initialize GRU; the input_size and hidden_size parameters are both set to 'hidden_size'

# because our input size is a word embedding with number of features == hidden_size

self.gru = nn.GRU(hidden_size, hidden_size, n_layers,

dropout=(0 if n_layers == 1 else dropout), bidirectional=True)

def forward(self, input_seq, input_lengths, hidden=None):

# Convert word indexes to embeddings

embedded = self.embedding(input_seq)

# Pack padded batch of sequences for RNN module

packed = nn.utils.rnn.pack_padded_sequence(embedded, input_lengths)

# Forward pass through GRU

outputs, hidden = self.gru(packed, hidden)

# Unpack padding

outputs, _ = nn.utils.rnn.pad_packed_sequence(outputs)

# Sum bidirectional GRU outputs

outputs = outputs[:, :, :self.hidden_size] + outputs[:, : ,self.hidden_size:]

# Return output and final hidden state

return outputs, hidden

解码器#

解码器 RNN 以逐个 token 的方式生成响应句子。它使用编码器的上下文向量和内部隐藏状态来生成序列中的下一个单词。它继续生成单词,直到输出EOS_token,表示句子结束。标准的 seq2seq 解码器的一个常见问题是,如果我们仅依赖上下文向量来编码整个输入序列的含义,很可能会丢失信息。特别是当处理长输入序列时,这会极大地限制我们解码器的能力。

为了解决这个问题,Bahdanau 等人 创建了一个“注意力机制”,该机制允许解码器关注输入序列的某些部分,而不是在每一步都使用整个固定上下文。

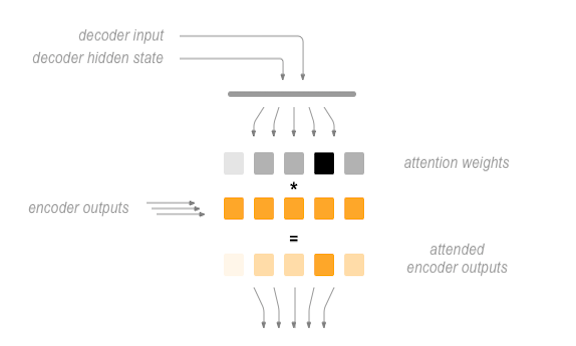

总的来说,注意力是通过解码器的当前隐藏状态和编码器的输出来计算的。输出的注意力权重与输入序列的形状相同,允许我们将它们乘以编码器的输岀,从而得到一个加权和,表示需要关注的编码器输出部分。 Sean Robertson 的图很好地描述了这一点。

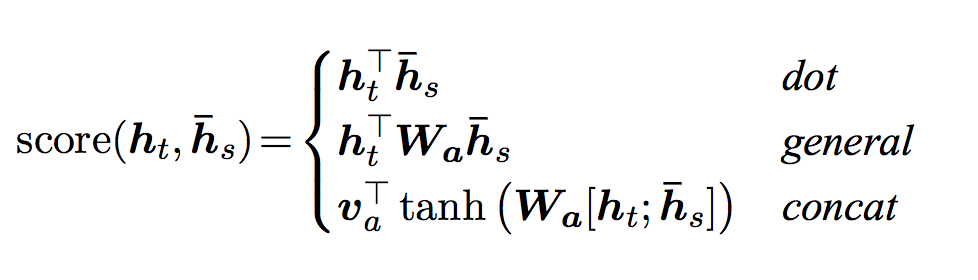

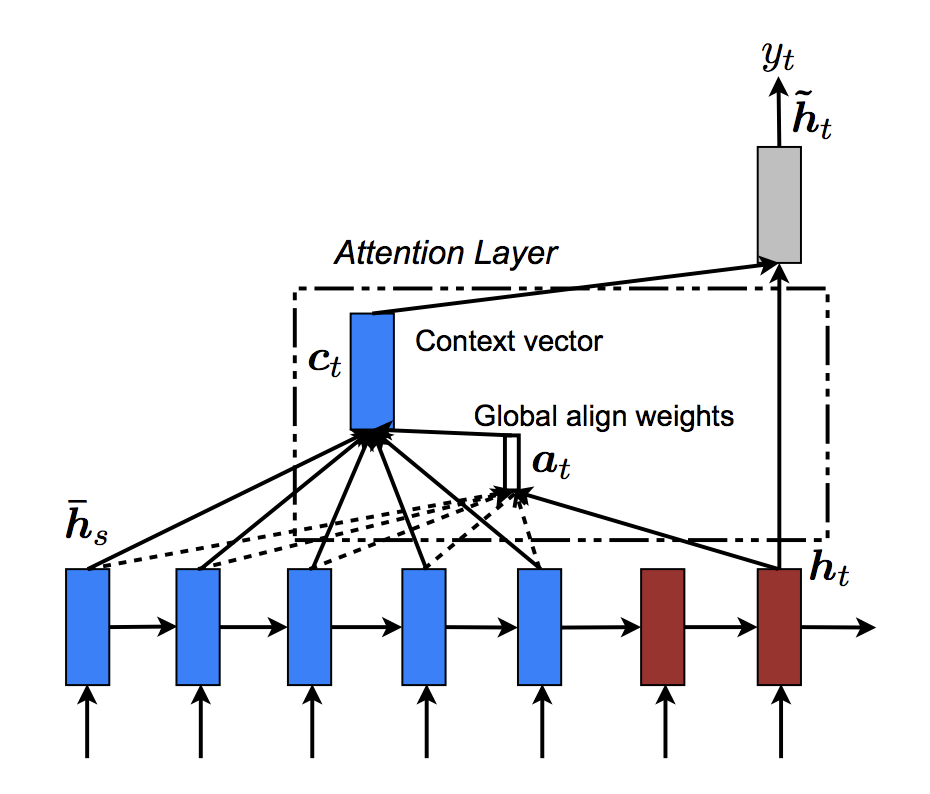

Luong 等人 在 Bahdanau 等人的基础上进行了改进,创建了“全局注意力”。关键区别在于,对于“全局注意力”,我们考虑编码器的所有隐藏状态,而 Bahdanau 等人的“局部注意力”仅考虑当前时间步的编码器隐藏状态。另一个区别是,对于“全局注意力”,我们仅使用当前时间步解码器的隐藏状态来计算注意力权重(或能量)。Bahdanau 等人的注意力计算需要了解前一个时间步解码器的状态。此外,Luong 等人提供了多种计算编码器输出和解码器输出之间注意力能量的方法,这些方法称为“得分函数”。

其中 \(h_t\) = 当前目标解码器状态,\(\bar{h}_s\) = 所有编码器状态。

总的来说,全局注意力机制可以通过下图来概括。请注意,我们将“注意力层”实现为一个单独的 nn.Module,名为 Attn。此模块的输出是一个形状为(batch_size, 1, max_length)的 softmax 归一化权重张量。

# Luong attention layer

class Attn(nn.Module):

def __init__(self, method, hidden_size):

super(Attn, self).__init__()

self.method = method

if self.method not in ['dot', 'general', 'concat']:

raise ValueError(self.method, "is not an appropriate attention method.")

self.hidden_size = hidden_size

if self.method == 'general':

self.attn = nn.Linear(self.hidden_size, hidden_size)

elif self.method == 'concat':

self.attn = nn.Linear(self.hidden_size * 2, hidden_size)

self.v = nn.Parameter(torch.FloatTensor(hidden_size))

def dot_score(self, hidden, encoder_output):

return torch.sum(hidden * encoder_output, dim=2)

def general_score(self, hidden, encoder_output):

energy = self.attn(encoder_output)

return torch.sum(hidden * energy, dim=2)

def concat_score(self, hidden, encoder_output):

energy = self.attn(torch.cat((hidden.expand(encoder_output.size(0), -1, -1), encoder_output), 2)).tanh()

return torch.sum(self.v * energy, dim=2)

def forward(self, hidden, encoder_outputs):

# Calculate the attention weights (energies) based on the given method

if self.method == 'general':

attn_energies = self.general_score(hidden, encoder_outputs)

elif self.method == 'concat':

attn_energies = self.concat_score(hidden, encoder_outputs)

elif self.method == 'dot':

attn_energies = self.dot_score(hidden, encoder_outputs)

# Transpose max_length and batch_size dimensions

attn_energies = attn_energies.t()

# Return the softmax normalized probability scores (with added dimension)

return F.softmax(attn_energies, dim=1).unsqueeze(1)

现在我们已经定义了注意力子模块,我们可以实现实际的解码器模型。对于解码器,我们将一次手动输入一批数据。这意味着我们的嵌入单词张量和 GRU 输出都将具有形状(1, batch_size, hidden_size)。

计算图

获取当前输入单词的嵌入。

通过单向 GRU 进行前向传播。

根据(2)中的当前 GRU 输出计算注意力权重。

将注意力权重乘以编码器输出,得到新的“加权和”上下文向量。

使用 Luong 方程 5 连接加权上下文向量和 GRU 输出。

使用 Luong 方程 6(不带 softmax)预测下一个单词。

返回输出和最终隐藏状态。

输入

input_step:输入序列批次的一个时间步(一个单词);形状=(1, batch_size)last_hidden:GRU 的最终隐藏层;形状=(n_layers x num_directions, batch_size, hidden_size)encoder_outputs:编码器模型的输出;形状=(max_length, batch_size, hidden_size)

输出

output:softmax 归一化张量,给出每个单词作为解码序列中正确下一个单词的概率;形状=(batch_size, voc.num_words)hidden:GRU 的最终隐藏状态;形状=(n_layers x num_directions, batch_size, hidden_size)

class LuongAttnDecoderRNN(nn.Module):

def __init__(self, attn_model, embedding, hidden_size, output_size, n_layers=1, dropout=0.1):

super(LuongAttnDecoderRNN, self).__init__()

# Keep for reference

self.attn_model = attn_model

self.hidden_size = hidden_size

self.output_size = output_size

self.n_layers = n_layers

self.dropout = dropout

# Define layers

self.embedding = embedding

self.embedding_dropout = nn.Dropout(dropout)

self.gru = nn.GRU(hidden_size, hidden_size, n_layers, dropout=(0 if n_layers == 1 else dropout))

self.concat = nn.Linear(hidden_size * 2, hidden_size)

self.out = nn.Linear(hidden_size, output_size)

self.attn = Attn(attn_model, hidden_size)

def forward(self, input_step, last_hidden, encoder_outputs):

# Note: we run this one step (word) at a time

# Get embedding of current input word

embedded = self.embedding(input_step)

embedded = self.embedding_dropout(embedded)

# Forward through unidirectional GRU

rnn_output, hidden = self.gru(embedded, last_hidden)

# Calculate attention weights from the current GRU output

attn_weights = self.attn(rnn_output, encoder_outputs)

# Multiply attention weights to encoder outputs to get new "weighted sum" context vector

context = attn_weights.bmm(encoder_outputs.transpose(0, 1))

# Concatenate weighted context vector and GRU output using Luong eq. 5

rnn_output = rnn_output.squeeze(0)

context = context.squeeze(1)

concat_input = torch.cat((rnn_output, context), 1)

concat_output = torch.tanh(self.concat(concat_input))

# Predict next word using Luong eq. 6

output = self.out(concat_output)

output = F.softmax(output, dim=1)

# Return output and final hidden state

return output, hidden

定义训练过程#

掩码损失#

由于我们处理的是填充序列的批次,因此不能简单地考虑张量的所有元素来计算损失。我们定义 maskNLLLoss 来根据解码器的输出张量、目标张量以及描述目标张量填充的二值掩码张量来计算我们的损失。此损失函数计算掩码张量中对应于 *1* 的元素的平均负对数似然。

def maskNLLLoss(inp, target, mask):

nTotal = mask.sum()

crossEntropy = -torch.log(torch.gather(inp, 1, target.view(-1, 1)).squeeze(1))

loss = crossEntropy.masked_select(mask).mean()

loss = loss.to(device)

return loss, nTotal.item()

单次训练迭代#

train 函数包含单次训练迭代(单个输入批次)的算法。

我们将使用一些巧妙的技巧来帮助模型收敛。

第一个技巧是使用**教师强制**。这意味着在由

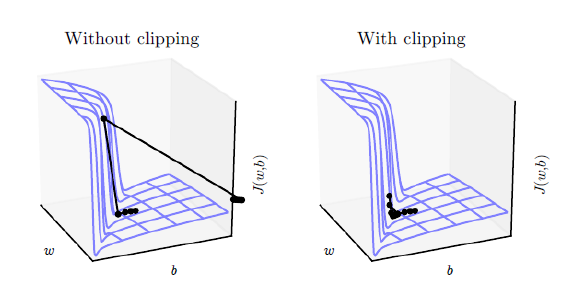

teacher_forcing_ratio设置的某个概率下,我们将当前目标单词用作解码器的下一个输入,而不是使用解码器当前的猜测。此技术充当解码器的“辅助轮”,有助于更有效地进行训练。但是,教师强制可能会导致模型在推理过程中不稳定,因为解码器在训练期间可能没有足够的机会真正生成自己的输出序列。因此,我们必须注意我们如何设置teacher_forcing_ratio,并且不要被快速收敛所迷惑。我们实现的第二个技巧是**梯度裁剪**。这是对抗“梯度爆炸”问题的常用技术。本质上,通过将梯度裁剪或阈值化到最大值,我们可以防止梯度呈指数增长,从而导致溢出(NaN)或在成本函数中越过陡峭的悬崖。

图片来源: Goodfellow 等人Deep Learning。2016。 https://www.deeplearningbook.org/

操作序列

将整个输入批次通过编码器进行前向传播。

将 SOS_token 初始化为解码器输入,并将隐藏状态初始化为编码器的最终隐藏状态。

一次一个时间步地将输入批次序列通过解码器进行前向传播。

如果使用教师强制:将下一个解码器输入设置为当前目标;否则:将下一个解码器输入设置为当前解码器输出。

计算并累积损失。

执行反向传播。

裁剪梯度。

更新编码器和解码器模型的参数。

注意

PyTorch 的 RNN 模块(RNN、LSTM、GRU)可以像任何其他非循环层一样使用,只需将整个输入序列(或序列批次)传递给它们即可。我们在 encoder 中像这样使用 GRU 层。实际上,底层有一个迭代过程,循环遍历每个时间步并计算隐藏状态。或者,您可以一次运行一个时间步来处理这些模块。在这种情况下,我们在训练过程中手动循环遍历序列,就像我们必须为 decoder 模型所做的那样。只要您保持对这些模块的正确概念模型,实现顺序模型就可以非常简单。

def train(input_variable, lengths, target_variable, mask, max_target_len, encoder, decoder, embedding,

encoder_optimizer, decoder_optimizer, batch_size, clip, max_length=MAX_LENGTH):

# Zero gradients

encoder_optimizer.zero_grad()

decoder_optimizer.zero_grad()

# Set device options

input_variable = input_variable.to(device)

target_variable = target_variable.to(device)

mask = mask.to(device)

# Lengths for RNN packing should always be on the CPU

lengths = lengths.to("cpu")

# Initialize variables

loss = 0

print_losses = []

n_totals = 0

# Forward pass through encoder

encoder_outputs, encoder_hidden = encoder(input_variable, lengths)

# Create initial decoder input (start with SOS tokens for each sentence)

decoder_input = torch.LongTensor([[SOS_token for _ in range(batch_size)]])

decoder_input = decoder_input.to(device)

# Set initial decoder hidden state to the encoder's final hidden state

decoder_hidden = encoder_hidden[:decoder.n_layers]

# Determine if we are using teacher forcing this iteration

use_teacher_forcing = True if random.random() < teacher_forcing_ratio else False

# Forward batch of sequences through decoder one time step at a time

if use_teacher_forcing:

for t in range(max_target_len):

decoder_output, decoder_hidden = decoder(

decoder_input, decoder_hidden, encoder_outputs

)

# Teacher forcing: next input is current target

decoder_input = target_variable[t].view(1, -1)

# Calculate and accumulate loss

mask_loss, nTotal = maskNLLLoss(decoder_output, target_variable[t], mask[t])

loss += mask_loss

print_losses.append(mask_loss.item() * nTotal)

n_totals += nTotal

else:

for t in range(max_target_len):

decoder_output, decoder_hidden = decoder(

decoder_input, decoder_hidden, encoder_outputs

)

# No teacher forcing: next input is decoder's own current output

_, topi = decoder_output.topk(1)

decoder_input = torch.LongTensor([[topi[i][0] for i in range(batch_size)]])

decoder_input = decoder_input.to(device)

# Calculate and accumulate loss

mask_loss, nTotal = maskNLLLoss(decoder_output, target_variable[t], mask[t])

loss += mask_loss

print_losses.append(mask_loss.item() * nTotal)

n_totals += nTotal

# Perform backpropagation

loss.backward()

# Clip gradients: gradients are modified in place

_ = nn.utils.clip_grad_norm_(encoder.parameters(), clip)

_ = nn.utils.clip_grad_norm_(decoder.parameters(), clip)

# Adjust model weights

encoder_optimizer.step()

decoder_optimizer.step()

return sum(print_losses) / n_totals

训练迭代#

终于到了将完整的训练过程与数据联系起来的时候了。 trainIters 函数负责在给定模型、优化器、数据等的情况下运行 *n_iterations* 次训练。此函数不言自明,因为我们已经在 train 函数中完成了繁重的工作。

需要注意的是,当保存模型时,我们保存了一个 tarball,其中包含编码器和解码器的 state_dicts(参数)、优化器的 state_dicts、损失、迭代等。以这种方式保存模型将为我们提供最大的检查点灵活性。加载检查点后,我们将能够使用模型参数进行推理,或者我们可以从上次中断的地方继续训练。

def trainIters(model_name, voc, pairs, encoder, decoder, encoder_optimizer, decoder_optimizer, embedding, encoder_n_layers, decoder_n_layers, save_dir, n_iteration, batch_size, print_every, save_every, clip, corpus_name, loadFilename):

# Load batches for each iteration

training_batches = [batch2TrainData(voc, [random.choice(pairs) for _ in range(batch_size)])

for _ in range(n_iteration)]

# Initializations

print('Initializing ...')

start_iteration = 1

print_loss = 0

if loadFilename:

start_iteration = checkpoint['iteration'] + 1

# Training loop

print("Training...")

for iteration in range(start_iteration, n_iteration + 1):

training_batch = training_batches[iteration - 1]

# Extract fields from batch

input_variable, lengths, target_variable, mask, max_target_len = training_batch

# Run a training iteration with batch

loss = train(input_variable, lengths, target_variable, mask, max_target_len, encoder,

decoder, embedding, encoder_optimizer, decoder_optimizer, batch_size, clip)

print_loss += loss

# Print progress

if iteration % print_every == 0:

print_loss_avg = print_loss / print_every

print("Iteration: {}; Percent complete: {:.1f}%; Average loss: {:.4f}".format(iteration, iteration / n_iteration * 100, print_loss_avg))

print_loss = 0

# Save checkpoint

if (iteration % save_every == 0):

directory = os.path.join(save_dir, model_name, corpus_name, '{}-{}_{}'.format(encoder_n_layers, decoder_n_layers, hidden_size))

if not os.path.exists(directory):

os.makedirs(directory)

torch.save({

'iteration': iteration,

'en': encoder.state_dict(),

'de': decoder.state_dict(),

'en_opt': encoder_optimizer.state_dict(),

'de_opt': decoder_optimizer.state_dict(),

'loss': loss,

'voc_dict': voc.__dict__,

'embedding': embedding.state_dict()

}, os.path.join(directory, '{}_{}.tar'.format(iteration, 'checkpoint')))

定义评估#

在训练完模型后,我们希望能够亲自与机器人交谈。首先,我们必须定义我们希望模型如何解码输入的编码。

贪婪解码#

贪婪解码是在我们**不**使用教师强制进行训练时所使用的解码方法。换句话说,对于每个时间步,我们只选择 decoder_output 中具有最高 softmax 值的单词。这种解码方法在单个时间步级别是最优的。

为了方便贪婪解码操作,我们定义了一个 GreedySearchDecoder 类。运行时,此类的一个对象接收一个形状为(input_seq length, 1)的输入序列(input_seq)、一个标量输入长度(input_length)张量和一个 max_length 来限制响应句子的长度。输入句子使用以下计算图进行评估:

计算图

将输入通过编码器模型进行前向传播。

准备编码器的最终隐藏层作为解码器的第一个隐藏输入。

将 SOS_token 初始化为解码器的第一个输入。

初始化用于附加已解码单词的张量。

- 迭代地一次解码一个单词 token

通过解码器进行前向传播。

获得最可能的单词 token 及其 softmax 分数。

记录 token 和分数。

将当前 token 准备为下一个解码器输入。

返回单词 token 和分数的集合。

class GreedySearchDecoder(nn.Module):

def __init__(self, encoder, decoder):

super(GreedySearchDecoder, self).__init__()

self.encoder = encoder

self.decoder = decoder

def forward(self, input_seq, input_length, max_length):

# Forward input through encoder model

encoder_outputs, encoder_hidden = self.encoder(input_seq, input_length)

# Prepare encoder's final hidden layer to be first hidden input to the decoder

decoder_hidden = encoder_hidden[:self.decoder.n_layers]

# Initialize decoder input with SOS_token

decoder_input = torch.ones(1, 1, device=device, dtype=torch.long) * SOS_token

# Initialize tensors to append decoded words to

all_tokens = torch.zeros([0], device=device, dtype=torch.long)

all_scores = torch.zeros([0], device=device)

# Iteratively decode one word token at a time

for _ in range(max_length):

# Forward pass through decoder

decoder_output, decoder_hidden = self.decoder(decoder_input, decoder_hidden, encoder_outputs)

# Obtain most likely word token and its softmax score

decoder_scores, decoder_input = torch.max(decoder_output, dim=1)

# Record token and score

all_tokens = torch.cat((all_tokens, decoder_input), dim=0)

all_scores = torch.cat((all_scores, decoder_scores), dim=0)

# Prepare current token to be next decoder input (add a dimension)

decoder_input = torch.unsqueeze(decoder_input, 0)

# Return collections of word tokens and scores

return all_tokens, all_scores

评估我的文本#

现在我们已经定义了解码方法,我们可以编写用于评估字符串输入句子的函数。 evaluate 函数管理处理输入句子的低级过程。我们首先将句子格式化为具有 *batch_size==1* 的单词索引输入批次。我们通过将句子的单词转换为它们相应的索引,并转置维度以准备张量供我们的模型使用来实现这一点。我们还创建一个包含输入句子长度的 lengths 张量。在这种情况下,lengths 是一个标量,因为我们一次只评估一个句子(batch_size==1)。接下来,我们使用我们的 GreedySearchDecoder 对象(searcher)获取已解码的响应句子张量。最后,我们将响应的索引转换为单词,并返回已解码单词的列表。

evaluateInput 作为我们聊天机器人的用户界面。调用时,将出现一个输入文本字段,我们可以在其中输入查询句子。键入输入句子并按Enter后,我们的文本将以与训练数据相同的方式进行规范化,并最终馈送到 evaluate 函数以获取已解码的输出句子。我们循环此过程,以便我们可以继续与我们的机器人聊天,直到我们输入“q”或“quit”。

最后,如果输入的句子包含词汇表中不存在的单词,我们会优雅地处理它,打印错误消息并提示用户输入另一个句子。

def evaluate(encoder, decoder, searcher, voc, sentence, max_length=MAX_LENGTH):

### Format input sentence as a batch

# words -> indexes

indexes_batch = [indexesFromSentence(voc, sentence)]

# Create lengths tensor

lengths = torch.tensor([len(indexes) for indexes in indexes_batch])

# Transpose dimensions of batch to match models' expectations

input_batch = torch.LongTensor(indexes_batch).transpose(0, 1)

# Use appropriate device

input_batch = input_batch.to(device)

lengths = lengths.to("cpu")

# Decode sentence with searcher

tokens, scores = searcher(input_batch, lengths, max_length)

# indexes -> words

decoded_words = [voc.index2word[token.item()] for token in tokens]

return decoded_words

def evaluateInput(encoder, decoder, searcher, voc):

input_sentence = ''

while(1):

try:

# Get input sentence

input_sentence = input('> ')

# Check if it is quit case

if input_sentence == 'q' or input_sentence == 'quit': break

# Normalize sentence

input_sentence = normalizeString(input_sentence)

# Evaluate sentence

output_words = evaluate(encoder, decoder, searcher, voc, input_sentence)

# Format and print response sentence

output_words[:] = [x for x in output_words if not (x == 'EOS' or x == 'PAD')]

print('Bot:', ' '.join(output_words))

except KeyError:

print("Error: Encountered unknown word.")

运行模型#

最后,是时候运行我们的模型了!

无论我们是想训练还是测试聊天机器人模型,都必须初始化各个编码器和解码器模型。在下面的代码块中,我们设置了所需的配置,选择从头开始或设置要加载的检查点,然后构建并初始化模型。请随意尝试不同的模型配置以优化性能。

# Configure models

model_name = 'cb_model'

attn_model = 'dot'

#``attn_model = 'general'``

#``attn_model = 'concat'``

hidden_size = 500

encoder_n_layers = 2

decoder_n_layers = 2

dropout = 0.1

batch_size = 64

# Set checkpoint to load from; set to None if starting from scratch

loadFilename = None

checkpoint_iter = 4000

从检查点加载的示例代码

loadFilename = os.path.join(save_dir, model_name, corpus_name,

'{}-{}_{}'.format(encoder_n_layers, decoder_n_layers, hidden_size),

'{}_checkpoint.tar'.format(checkpoint_iter))

# Load model if a ``loadFilename`` is provided

if loadFilename:

# If loading on same machine the model was trained on

checkpoint = torch.load(loadFilename)

# If loading a model trained on GPU to CPU

#checkpoint = torch.load(loadFilename, map_location=torch.device('cpu'))

encoder_sd = checkpoint['en']

decoder_sd = checkpoint['de']

encoder_optimizer_sd = checkpoint['en_opt']

decoder_optimizer_sd = checkpoint['de_opt']

embedding_sd = checkpoint['embedding']

voc.__dict__ = checkpoint['voc_dict']

print('Building encoder and decoder ...')

# Initialize word embeddings

embedding = nn.Embedding(voc.num_words, hidden_size)

if loadFilename:

embedding.load_state_dict(embedding_sd)

# Initialize encoder & decoder models

encoder = EncoderRNN(hidden_size, embedding, encoder_n_layers, dropout)

decoder = LuongAttnDecoderRNN(attn_model, embedding, hidden_size, voc.num_words, decoder_n_layers, dropout)

if loadFilename:

encoder.load_state_dict(encoder_sd)

decoder.load_state_dict(decoder_sd)

# Use appropriate device

encoder = encoder.to(device)

decoder = decoder.to(device)

print('Models built and ready to go!')

Building encoder and decoder ...

Models built and ready to go!

运行训练#

如果您想训练模型,请运行以下代码块。

首先,我们设置训练参数,然后初始化优化器,最后调用 trainIters 函数来运行我们的训练迭代。

# Configure training/optimization

clip = 50.0

teacher_forcing_ratio = 1.0

learning_rate = 0.0001

decoder_learning_ratio = 5.0

n_iteration = 4000

print_every = 1

save_every = 500

# Ensure dropout layers are in train mode

encoder.train()

decoder.train()

# Initialize optimizers

print('Building optimizers ...')

encoder_optimizer = optim.Adam(encoder.parameters(), lr=learning_rate)

decoder_optimizer = optim.Adam(decoder.parameters(), lr=learning_rate * decoder_learning_ratio)

if loadFilename:

encoder_optimizer.load_state_dict(encoder_optimizer_sd)

decoder_optimizer.load_state_dict(decoder_optimizer_sd)

# If you have an accelerator, configure it to call

for state in encoder_optimizer.state.values():

for k, v in state.items():

if isinstance(v, torch.Tensor):

state[k] = v.to(device)

for state in decoder_optimizer.state.values():

for k, v in state.items():

if isinstance(v, torch.Tensor):

state[k] = v.to(device)

# Run training iterations

print("Starting Training!")

trainIters(model_name, voc, pairs, encoder, decoder, encoder_optimizer, decoder_optimizer,

embedding, encoder_n_layers, decoder_n_layers, save_dir, n_iteration, batch_size,

print_every, save_every, clip, corpus_name, loadFilename)

Building optimizers ...

Starting Training!

Initializing ...

Training...

Iteration: 1; Percent complete: 0.0%; Average loss: 8.9636

Iteration: 2; Percent complete: 0.1%; Average loss: 8.8313

Iteration: 3; Percent complete: 0.1%; Average loss: 8.6581

Iteration: 4; Percent complete: 0.1%; Average loss: 8.2725

Iteration: 5; Percent complete: 0.1%; Average loss: 7.9226

Iteration: 6; Percent complete: 0.1%; Average loss: 7.3283

Iteration: 7; Percent complete: 0.2%; Average loss: 6.9254

Iteration: 8; Percent complete: 0.2%; Average loss: 6.6599

Iteration: 9; Percent complete: 0.2%; Average loss: 6.5629

Iteration: 10; Percent complete: 0.2%; Average loss: 6.5117

Iteration: 11; Percent complete: 0.3%; Average loss: 6.2220

Iteration: 12; Percent complete: 0.3%; Average loss: 5.9812

Iteration: 13; Percent complete: 0.3%; Average loss: 5.9450

Iteration: 14; Percent complete: 0.4%; Average loss: 5.7474

Iteration: 15; Percent complete: 0.4%; Average loss: 5.6762

Iteration: 16; Percent complete: 0.4%; Average loss: 5.3747

Iteration: 17; Percent complete: 0.4%; Average loss: 5.1390

Iteration: 18; Percent complete: 0.4%; Average loss: 5.2316

Iteration: 19; Percent complete: 0.5%; Average loss: 4.9768

Iteration: 20; Percent complete: 0.5%; Average loss: 4.9931

Iteration: 21; Percent complete: 0.5%; Average loss: 4.9274

Iteration: 22; Percent complete: 0.5%; Average loss: 5.0186

Iteration: 23; Percent complete: 0.6%; Average loss: 4.9807

Iteration: 24; Percent complete: 0.6%; Average loss: 4.9375

Iteration: 25; Percent complete: 0.6%; Average loss: 5.0517

Iteration: 26; Percent complete: 0.7%; Average loss: 5.0525

Iteration: 27; Percent complete: 0.7%; Average loss: 5.0630

Iteration: 28; Percent complete: 0.7%; Average loss: 4.6687

Iteration: 29; Percent complete: 0.7%; Average loss: 4.6478

Iteration: 30; Percent complete: 0.8%; Average loss: 4.7522

Iteration: 31; Percent complete: 0.8%; Average loss: 4.9685

Iteration: 32; Percent complete: 0.8%; Average loss: 4.8710

Iteration: 33; Percent complete: 0.8%; Average loss: 4.7697

Iteration: 34; Percent complete: 0.9%; Average loss: 4.7266

Iteration: 35; Percent complete: 0.9%; Average loss: 4.8286

Iteration: 36; Percent complete: 0.9%; Average loss: 4.9682

Iteration: 37; Percent complete: 0.9%; Average loss: 4.9386

Iteration: 38; Percent complete: 0.9%; Average loss: 4.7891

Iteration: 39; Percent complete: 1.0%; Average loss: 4.7858

Iteration: 40; Percent complete: 1.0%; Average loss: 4.7050

Iteration: 41; Percent complete: 1.0%; Average loss: 4.7227

Iteration: 42; Percent complete: 1.1%; Average loss: 4.8539

Iteration: 43; Percent complete: 1.1%; Average loss: 4.8597

Iteration: 44; Percent complete: 1.1%; Average loss: 4.5262

Iteration: 45; Percent complete: 1.1%; Average loss: 4.6508

Iteration: 46; Percent complete: 1.1%; Average loss: 4.8285

Iteration: 47; Percent complete: 1.2%; Average loss: 4.5819

Iteration: 48; Percent complete: 1.2%; Average loss: 4.6844

Iteration: 49; Percent complete: 1.2%; Average loss: 4.6983

Iteration: 50; Percent complete: 1.2%; Average loss: 4.7229

Iteration: 51; Percent complete: 1.3%; Average loss: 4.4266

Iteration: 52; Percent complete: 1.3%; Average loss: 4.7121

Iteration: 53; Percent complete: 1.3%; Average loss: 4.5898

Iteration: 54; Percent complete: 1.4%; Average loss: 4.6904

Iteration: 55; Percent complete: 1.4%; Average loss: 4.8149

Iteration: 56; Percent complete: 1.4%; Average loss: 4.5183

Iteration: 57; Percent complete: 1.4%; Average loss: 4.5077

Iteration: 58; Percent complete: 1.5%; Average loss: 4.4994

Iteration: 59; Percent complete: 1.5%; Average loss: 4.6849

Iteration: 60; Percent complete: 1.5%; Average loss: 4.7306

Iteration: 61; Percent complete: 1.5%; Average loss: 4.5112

Iteration: 62; Percent complete: 1.6%; Average loss: 4.8184

Iteration: 63; Percent complete: 1.6%; Average loss: 4.6478

Iteration: 64; Percent complete: 1.6%; Average loss: 4.8283

Iteration: 65; Percent complete: 1.6%; Average loss: 4.5789

Iteration: 66; Percent complete: 1.7%; Average loss: 4.2820

Iteration: 67; Percent complete: 1.7%; Average loss: 4.7813

Iteration: 68; Percent complete: 1.7%; Average loss: 4.7474

Iteration: 69; Percent complete: 1.7%; Average loss: 4.3980

Iteration: 70; Percent complete: 1.8%; Average loss: 4.4391

Iteration: 71; Percent complete: 1.8%; Average loss: 4.4420

Iteration: 72; Percent complete: 1.8%; Average loss: 4.4624

Iteration: 73; Percent complete: 1.8%; Average loss: 4.5743

Iteration: 74; Percent complete: 1.8%; Average loss: 4.5554

Iteration: 75; Percent complete: 1.9%; Average loss: 4.5365

Iteration: 76; Percent complete: 1.9%; Average loss: 4.5938

Iteration: 77; Percent complete: 1.9%; Average loss: 4.3348

Iteration: 78; Percent complete: 1.9%; Average loss: 4.6910

Iteration: 79; Percent complete: 2.0%; Average loss: 4.4926

Iteration: 80; Percent complete: 2.0%; Average loss: 4.4112

Iteration: 81; Percent complete: 2.0%; Average loss: 4.4939

Iteration: 82; Percent complete: 2.1%; Average loss: 4.5741

Iteration: 83; Percent complete: 2.1%; Average loss: 4.5178

Iteration: 84; Percent complete: 2.1%; Average loss: 4.3083

Iteration: 85; Percent complete: 2.1%; Average loss: 4.4833

Iteration: 86; Percent complete: 2.1%; Average loss: 4.5240

Iteration: 87; Percent complete: 2.2%; Average loss: 4.5519

Iteration: 88; Percent complete: 2.2%; Average loss: 4.5280

Iteration: 89; Percent complete: 2.2%; Average loss: 4.4399

Iteration: 90; Percent complete: 2.2%; Average loss: 4.4387

Iteration: 91; Percent complete: 2.3%; Average loss: 4.4504

Iteration: 92; Percent complete: 2.3%; Average loss: 4.4118

Iteration: 93; Percent complete: 2.3%; Average loss: 4.2436

Iteration: 94; Percent complete: 2.4%; Average loss: 4.5039

Iteration: 95; Percent complete: 2.4%; Average loss: 4.7371

Iteration: 96; Percent complete: 2.4%; Average loss: 4.4701

Iteration: 97; Percent complete: 2.4%; Average loss: 4.6615

Iteration: 98; Percent complete: 2.5%; Average loss: 4.4491

Iteration: 99; Percent complete: 2.5%; Average loss: 4.4849

Iteration: 100; Percent complete: 2.5%; Average loss: 4.2803

Iteration: 101; Percent complete: 2.5%; Average loss: 4.5781

Iteration: 102; Percent complete: 2.5%; Average loss: 4.4069

Iteration: 103; Percent complete: 2.6%; Average loss: 4.0891

Iteration: 104; Percent complete: 2.6%; Average loss: 4.4922

Iteration: 105; Percent complete: 2.6%; Average loss: 4.1932

Iteration: 106; Percent complete: 2.6%; Average loss: 4.4610

Iteration: 107; Percent complete: 2.7%; Average loss: 4.3623

Iteration: 108; Percent complete: 2.7%; Average loss: 4.6201

Iteration: 109; Percent complete: 2.7%; Average loss: 4.3136

Iteration: 110; Percent complete: 2.8%; Average loss: 4.4397

Iteration: 111; Percent complete: 2.8%; Average loss: 4.5106

Iteration: 112; Percent complete: 2.8%; Average loss: 4.4162

Iteration: 113; Percent complete: 2.8%; Average loss: 4.4058

Iteration: 114; Percent complete: 2.9%; Average loss: 4.3092

Iteration: 115; Percent complete: 2.9%; Average loss: 4.4110

Iteration: 116; Percent complete: 2.9%; Average loss: 4.3312

Iteration: 117; Percent complete: 2.9%; Average loss: 4.5593

Iteration: 118; Percent complete: 2.9%; Average loss: 4.2946

Iteration: 119; Percent complete: 3.0%; Average loss: 4.2876

Iteration: 120; Percent complete: 3.0%; Average loss: 4.5015

Iteration: 121; Percent complete: 3.0%; Average loss: 4.2795

Iteration: 122; Percent complete: 3.0%; Average loss: 4.4189

Iteration: 123; Percent complete: 3.1%; Average loss: 4.4515

Iteration: 124; Percent complete: 3.1%; Average loss: 4.4198

Iteration: 125; Percent complete: 3.1%; Average loss: 4.5719

Iteration: 126; Percent complete: 3.1%; Average loss: 4.3101

Iteration: 127; Percent complete: 3.2%; Average loss: 4.3026

Iteration: 128; Percent complete: 3.2%; Average loss: 4.2357

Iteration: 129; Percent complete: 3.2%; Average loss: 4.2856

Iteration: 130; Percent complete: 3.2%; Average loss: 4.3964

Iteration: 131; Percent complete: 3.3%; Average loss: 4.2642

Iteration: 132; Percent complete: 3.3%; Average loss: 3.8943

Iteration: 133; Percent complete: 3.3%; Average loss: 3.9957

Iteration: 134; Percent complete: 3.4%; Average loss: 4.1629

Iteration: 135; Percent complete: 3.4%; Average loss: 4.5063

Iteration: 136; Percent complete: 3.4%; Average loss: 4.2987

Iteration: 137; Percent complete: 3.4%; Average loss: 4.5523

Iteration: 138; Percent complete: 3.5%; Average loss: 4.4045

Iteration: 139; Percent complete: 3.5%; Average loss: 4.3533

Iteration: 140; Percent complete: 3.5%; Average loss: 4.5834

Iteration: 141; Percent complete: 3.5%; Average loss: 4.1530

Iteration: 142; Percent complete: 3.5%; Average loss: 4.1735

Iteration: 143; Percent complete: 3.6%; Average loss: 4.2615

Iteration: 144; Percent complete: 3.6%; Average loss: 4.4184

Iteration: 145; Percent complete: 3.6%; Average loss: 4.4300

Iteration: 146; Percent complete: 3.6%; Average loss: 4.3787

Iteration: 147; Percent complete: 3.7%; Average loss: 4.2749

Iteration: 148; Percent complete: 3.7%; Average loss: 3.9847

Iteration: 149; Percent complete: 3.7%; Average loss: 4.4000

Iteration: 150; Percent complete: 3.8%; Average loss: 4.3535

Iteration: 151; Percent complete: 3.8%; Average loss: 4.2118

Iteration: 152; Percent complete: 3.8%; Average loss: 4.3036

Iteration: 153; Percent complete: 3.8%; Average loss: 4.1405

Iteration: 154; Percent complete: 3.9%; Average loss: 4.1459

Iteration: 155; Percent complete: 3.9%; Average loss: 4.0592

Iteration: 156; Percent complete: 3.9%; Average loss: 4.1635

Iteration: 157; Percent complete: 3.9%; Average loss: 4.2796

Iteration: 158; Percent complete: 4.0%; Average loss: 4.2391

Iteration: 159; Percent complete: 4.0%; Average loss: 4.3601

Iteration: 160; Percent complete: 4.0%; Average loss: 3.9695

Iteration: 161; Percent complete: 4.0%; Average loss: 4.3025

Iteration: 162; Percent complete: 4.0%; Average loss: 4.0594

Iteration: 163; Percent complete: 4.1%; Average loss: 4.3165

Iteration: 164; Percent complete: 4.1%; Average loss: 4.1809

Iteration: 165; Percent complete: 4.1%; Average loss: 4.0224

Iteration: 166; Percent complete: 4.2%; Average loss: 4.2015

Iteration: 167; Percent complete: 4.2%; Average loss: 4.3993

Iteration: 168; Percent complete: 4.2%; Average loss: 4.3080

Iteration: 169; Percent complete: 4.2%; Average loss: 4.1988

Iteration: 170; Percent complete: 4.2%; Average loss: 4.1355

Iteration: 171; Percent complete: 4.3%; Average loss: 4.1842

Iteration: 172; Percent complete: 4.3%; Average loss: 4.4067

Iteration: 173; Percent complete: 4.3%; Average loss: 4.2898

Iteration: 174; Percent complete: 4.3%; Average loss: 4.3039

Iteration: 175; Percent complete: 4.4%; Average loss: 4.1532

Iteration: 176; Percent complete: 4.4%; Average loss: 3.9877

Iteration: 177; Percent complete: 4.4%; Average loss: 4.0167

Iteration: 178; Percent complete: 4.5%; Average loss: 3.9475

Iteration: 179; Percent complete: 4.5%; Average loss: 4.1835

Iteration: 180; Percent complete: 4.5%; Average loss: 4.4099

Iteration: 181; Percent complete: 4.5%; Average loss: 4.4395

Iteration: 182; Percent complete: 4.5%; Average loss: 4.0188

Iteration: 183; Percent complete: 4.6%; Average loss: 4.2495

Iteration: 184; Percent complete: 4.6%; Average loss: 4.1611

Iteration: 185; Percent complete: 4.6%; Average loss: 4.3865

Iteration: 186; Percent complete: 4.7%; Average loss: 4.0871

Iteration: 187; Percent complete: 4.7%; Average loss: 4.2351

Iteration: 188; Percent complete: 4.7%; Average loss: 3.9684

Iteration: 189; Percent complete: 4.7%; Average loss: 4.4671

Iteration: 190; Percent complete: 4.8%; Average loss: 4.2609

Iteration: 191; Percent complete: 4.8%; Average loss: 4.1297

Iteration: 192; Percent complete: 4.8%; Average loss: 4.1708

Iteration: 193; Percent complete: 4.8%; Average loss: 4.0272

Iteration: 194; Percent complete: 4.9%; Average loss: 4.1511

Iteration: 195; Percent complete: 4.9%; Average loss: 4.0897

Iteration: 196; Percent complete: 4.9%; Average loss: 3.9471

Iteration: 197; Percent complete: 4.9%; Average loss: 4.2495

Iteration: 198; Percent complete: 5.0%; Average loss: 4.1860

Iteration: 199; Percent complete: 5.0%; Average loss: 3.9979

Iteration: 200; Percent complete: 5.0%; Average loss: 3.8809

Iteration: 201; Percent complete: 5.0%; Average loss: 4.2186

Iteration: 202; Percent complete: 5.1%; Average loss: 4.3366

Iteration: 203; Percent complete: 5.1%; Average loss: 4.0707

Iteration: 204; Percent complete: 5.1%; Average loss: 4.1218

Iteration: 205; Percent complete: 5.1%; Average loss: 3.8812

Iteration: 206; Percent complete: 5.1%; Average loss: 4.0558

Iteration: 207; Percent complete: 5.2%; Average loss: 4.0501

Iteration: 208; Percent complete: 5.2%; Average loss: 4.0757

Iteration: 209; Percent complete: 5.2%; Average loss: 3.9434

Iteration: 210; Percent complete: 5.2%; Average loss: 3.7664

Iteration: 211; Percent complete: 5.3%; Average loss: 3.9357

Iteration: 212; Percent complete: 5.3%; Average loss: 4.3763

Iteration: 213; Percent complete: 5.3%; Average loss: 4.2333

Iteration: 214; Percent complete: 5.3%; Average loss: 4.0965

Iteration: 215; Percent complete: 5.4%; Average loss: 3.8343

Iteration: 216; Percent complete: 5.4%; Average loss: 3.7086

Iteration: 217; Percent complete: 5.4%; Average loss: 4.3028

Iteration: 218; Percent complete: 5.5%; Average loss: 4.1869

Iteration: 219; Percent complete: 5.5%; Average loss: 4.0864

Iteration: 220; Percent complete: 5.5%; Average loss: 3.9577

Iteration: 221; Percent complete: 5.5%; Average loss: 3.9617

Iteration: 222; Percent complete: 5.5%; Average loss: 3.9971

Iteration: 223; Percent complete: 5.6%; Average loss: 3.7839

Iteration: 224; Percent complete: 5.6%; Average loss: 3.9297

Iteration: 225; Percent complete: 5.6%; Average loss: 4.2698

Iteration: 226; Percent complete: 5.7%; Average loss: 4.1036

Iteration: 227; Percent complete: 5.7%; Average loss: 4.1896

Iteration: 228; Percent complete: 5.7%; Average loss: 3.9086

Iteration: 229; Percent complete: 5.7%; Average loss: 3.7855

Iteration: 230; Percent complete: 5.8%; Average loss: 3.6887

Iteration: 231; Percent complete: 5.8%; Average loss: 4.0187

Iteration: 232; Percent complete: 5.8%; Average loss: 3.9063

Iteration: 233; Percent complete: 5.8%; Average loss: 4.0903

Iteration: 234; Percent complete: 5.9%; Average loss: 4.0305

Iteration: 235; Percent complete: 5.9%; Average loss: 4.1664

Iteration: 236; Percent complete: 5.9%; Average loss: 4.1239

Iteration: 237; Percent complete: 5.9%; Average loss: 3.8724

Iteration: 238; Percent complete: 5.9%; Average loss: 3.9636

Iteration: 239; Percent complete: 6.0%; Average loss: 4.2023

Iteration: 240; Percent complete: 6.0%; Average loss: 4.1441

Iteration: 241; Percent complete: 6.0%; Average loss: 4.1056

Iteration: 242; Percent complete: 6.0%; Average loss: 3.8382

Iteration: 243; Percent complete: 6.1%; Average loss: 3.8419

Iteration: 244; Percent complete: 6.1%; Average loss: 4.2301

Iteration: 245; Percent complete: 6.1%; Average loss: 4.0734

Iteration: 246; Percent complete: 6.2%; Average loss: 3.9312

Iteration: 247; Percent complete: 6.2%; Average loss: 3.8807

Iteration: 248; Percent complete: 6.2%; Average loss: 4.1320

Iteration: 249; Percent complete: 6.2%; Average loss: 3.9059

Iteration: 250; Percent complete: 6.2%; Average loss: 3.6572

Iteration: 251; Percent complete: 6.3%; Average loss: 4.1304

Iteration: 252; Percent complete: 6.3%; Average loss: 3.8158

Iteration: 253; Percent complete: 6.3%; Average loss: 3.8639

Iteration: 254; Percent complete: 6.3%; Average loss: 4.0487

Iteration: 255; Percent complete: 6.4%; Average loss: 3.9538

Iteration: 256; Percent complete: 6.4%; Average loss: 3.8879

Iteration: 257; Percent complete: 6.4%; Average loss: 3.8577

Iteration: 258; Percent complete: 6.5%; Average loss: 3.8389

Iteration: 259; Percent complete: 6.5%; Average loss: 3.9338

Iteration: 260; Percent complete: 6.5%; Average loss: 3.7612

Iteration: 261; Percent complete: 6.5%; Average loss: 3.9677

Iteration: 262; Percent complete: 6.6%; Average loss: 3.9856

Iteration: 263; Percent complete: 6.6%; Average loss: 3.9244

Iteration: 264; Percent complete: 6.6%; Average loss: 3.7804

Iteration: 265; Percent complete: 6.6%; Average loss: 3.8185

Iteration: 266; Percent complete: 6.7%; Average loss: 4.0429

Iteration: 267; Percent complete: 6.7%; Average loss: 4.1593

Iteration: 268; Percent complete: 6.7%; Average loss: 3.9855

Iteration: 269; Percent complete: 6.7%; Average loss: 4.0174

Iteration: 270; Percent complete: 6.8%; Average loss: 4.1623

Iteration: 271; Percent complete: 6.8%; Average loss: 4.0245

Iteration: 272; Percent complete: 6.8%; Average loss: 4.0824

Iteration: 273; Percent complete: 6.8%; Average loss: 3.9129

Iteration: 274; Percent complete: 6.9%; Average loss: 3.9432

Iteration: 275; Percent complete: 6.9%; Average loss: 3.8394

Iteration: 276; Percent complete: 6.9%; Average loss: 4.2996

Iteration: 277; Percent complete: 6.9%; Average loss: 3.9722

Iteration: 278; Percent complete: 7.0%; Average loss: 3.8118

Iteration: 279; Percent complete: 7.0%; Average loss: 3.8013

Iteration: 280; Percent complete: 7.0%; Average loss: 4.0696

Iteration: 281; Percent complete: 7.0%; Average loss: 3.6854

Iteration: 282; Percent complete: 7.0%; Average loss: 3.8799

Iteration: 283; Percent complete: 7.1%; Average loss: 3.8450

Iteration: 284; Percent complete: 7.1%; Average loss: 3.6209

Iteration: 285; Percent complete: 7.1%; Average loss: 3.7942

Iteration: 286; Percent complete: 7.1%; Average loss: 3.9372

Iteration: 287; Percent complete: 7.2%; Average loss: 4.0170

Iteration: 288; Percent complete: 7.2%; Average loss: 4.0383

Iteration: 289; Percent complete: 7.2%; Average loss: 4.0446

Iteration: 290; Percent complete: 7.2%; Average loss: 4.2003

Iteration: 291; Percent complete: 7.3%; Average loss: 4.2163

Iteration: 292; Percent complete: 7.3%; Average loss: 4.0547

Iteration: 293; Percent complete: 7.3%; Average loss: 3.7187

Iteration: 294; Percent complete: 7.3%; Average loss: 3.8356

Iteration: 295; Percent complete: 7.4%; Average loss: 3.7154

Iteration: 296; Percent complete: 7.4%; Average loss: 4.0915

Iteration: 297; Percent complete: 7.4%; Average loss: 4.0231

Iteration: 298; Percent complete: 7.4%; Average loss: 3.8031

Iteration: 299; Percent complete: 7.5%; Average loss: 3.9805

Iteration: 300; Percent complete: 7.5%; Average loss: 4.2603

Iteration: 301; Percent complete: 7.5%; Average loss: 3.9566

Iteration: 302; Percent complete: 7.5%; Average loss: 3.9662

Iteration: 303; Percent complete: 7.6%; Average loss: 3.8608

Iteration: 304; Percent complete: 7.6%; Average loss: 3.8439

Iteration: 305; Percent complete: 7.6%; Average loss: 4.1489

Iteration: 306; Percent complete: 7.6%; Average loss: 3.7840

Iteration: 307; Percent complete: 7.7%; Average loss: 3.8458

Iteration: 308; Percent complete: 7.7%; Average loss: 3.9981

Iteration: 309; Percent complete: 7.7%; Average loss: 4.0850

Iteration: 310; Percent complete: 7.8%; Average loss: 3.9528

Iteration: 311; Percent complete: 7.8%; Average loss: 4.1173

Iteration: 312; Percent complete: 7.8%; Average loss: 3.9922

Iteration: 313; Percent complete: 7.8%; Average loss: 3.8504

Iteration: 314; Percent complete: 7.8%; Average loss: 3.8157

Iteration: 315; Percent complete: 7.9%; Average loss: 3.9365

Iteration: 316; Percent complete: 7.9%; Average loss: 4.1556

Iteration: 317; Percent complete: 7.9%; Average loss: 4.0244

Iteration: 318; Percent complete: 8.0%; Average loss: 3.8088

Iteration: 319; Percent complete: 8.0%; Average loss: 3.8671

Iteration: 320; Percent complete: 8.0%; Average loss: 3.6664

Iteration: 321; Percent complete: 8.0%; Average loss: 4.1603

Iteration: 322; Percent complete: 8.1%; Average loss: 3.7484

Iteration: 323; Percent complete: 8.1%; Average loss: 3.6973

Iteration: 324; Percent complete: 8.1%; Average loss: 3.7960

Iteration: 325; Percent complete: 8.1%; Average loss: 4.1484

Iteration: 326; Percent complete: 8.2%; Average loss: 3.8513

Iteration: 327; Percent complete: 8.2%; Average loss: 3.9528

Iteration: 328; Percent complete: 8.2%; Average loss: 4.0718

Iteration: 329; Percent complete: 8.2%; Average loss: 3.7847

Iteration: 330; Percent complete: 8.2%; Average loss: 3.6424

Iteration: 331; Percent complete: 8.3%; Average loss: 3.9672

Iteration: 332; Percent complete: 8.3%; Average loss: 4.2551

Iteration: 333; Percent complete: 8.3%; Average loss: 3.8377

Iteration: 334; Percent complete: 8.3%; Average loss: 3.7914

Iteration: 335; Percent complete: 8.4%; Average loss: 3.7914

Iteration: 336; Percent complete: 8.4%; Average loss: 4.1176

Iteration: 337; Percent complete: 8.4%; Average loss: 3.6615

Iteration: 338; Percent complete: 8.5%; Average loss: 3.7038

Iteration: 339; Percent complete: 8.5%; Average loss: 3.7637

Iteration: 340; Percent complete: 8.5%; Average loss: 4.0111

Iteration: 341; Percent complete: 8.5%; Average loss: 3.6843

Iteration: 342; Percent complete: 8.6%; Average loss: 4.0023

Iteration: 343; Percent complete: 8.6%; Average loss: 3.6993

Iteration: 344; Percent complete: 8.6%; Average loss: 4.0587

Iteration: 345; Percent complete: 8.6%; Average loss: 4.0350

Iteration: 346; Percent complete: 8.6%; Average loss: 3.5817

Iteration: 347; Percent complete: 8.7%; Average loss: 3.9702

Iteration: 348; Percent complete: 8.7%; Average loss: 3.7566

Iteration: 349; Percent complete: 8.7%; Average loss: 3.6596

Iteration: 350; Percent complete: 8.8%; Average loss: 3.8225

Iteration: 351; Percent complete: 8.8%; Average loss: 3.8892

Iteration: 352; Percent complete: 8.8%; Average loss: 4.1239

Iteration: 353; Percent complete: 8.8%; Average loss: 3.7801

Iteration: 354; Percent complete: 8.8%; Average loss: 4.1708

Iteration: 355; Percent complete: 8.9%; Average loss: 3.7441

Iteration: 356; Percent complete: 8.9%; Average loss: 3.8402

Iteration: 357; Percent complete: 8.9%; Average loss: 3.6044

Iteration: 358; Percent complete: 8.9%; Average loss: 3.7062

Iteration: 359; Percent complete: 9.0%; Average loss: 3.7888

Iteration: 360; Percent complete: 9.0%; Average loss: 3.9617

Iteration: 361; Percent complete: 9.0%; Average loss: 3.7303

Iteration: 362; Percent complete: 9.0%; Average loss: 3.9753

Iteration: 363; Percent complete: 9.1%; Average loss: 3.7461

Iteration: 364; Percent complete: 9.1%; Average loss: 3.8818

Iteration: 365; Percent complete: 9.1%; Average loss: 3.6979

Iteration: 366; Percent complete: 9.2%; Average loss: 3.9812

Iteration: 367; Percent complete: 9.2%; Average loss: 3.7647

Iteration: 368; Percent complete: 9.2%; Average loss: 3.7670

Iteration: 369; Percent complete: 9.2%; Average loss: 3.7979

Iteration: 370; Percent complete: 9.2%; Average loss: 3.7923

Iteration: 371; Percent complete: 9.3%; Average loss: 4.3305

Iteration: 372; Percent complete: 9.3%; Average loss: 3.7388

Iteration: 373; Percent complete: 9.3%; Average loss: 4.0242

Iteration: 374; Percent complete: 9.3%; Average loss: 3.8018

Iteration: 375; Percent complete: 9.4%; Average loss: 3.9413

Iteration: 376; Percent complete: 9.4%; Average loss: 3.7064

Iteration: 377; Percent complete: 9.4%; Average loss: 3.9064

Iteration: 378; Percent complete: 9.4%; Average loss: 3.6743

Iteration: 379; Percent complete: 9.5%; Average loss: 3.6788

Iteration: 380; Percent complete: 9.5%; Average loss: 3.7493

Iteration: 381; Percent complete: 9.5%; Average loss: 3.4731

Iteration: 382; Percent complete: 9.6%; Average loss: 3.9488

Iteration: 383; Percent complete: 9.6%; Average loss: 3.8631

Iteration: 384; Percent complete: 9.6%; Average loss: 4.1793

Iteration: 385; Percent complete: 9.6%; Average loss: 3.4189

Iteration: 386; Percent complete: 9.7%; Average loss: 3.8420

Iteration: 387; Percent complete: 9.7%; Average loss: 4.0068

Iteration: 388; Percent complete: 9.7%; Average loss: 3.9350

Iteration: 389; Percent complete: 9.7%; Average loss: 3.6937

Iteration: 390; Percent complete: 9.8%; Average loss: 3.7071

Iteration: 391; Percent complete: 9.8%; Average loss: 3.9744

Iteration: 392; Percent complete: 9.8%; Average loss: 3.9900

Iteration: 393; Percent complete: 9.8%; Average loss: 3.6713

Iteration: 394; Percent complete: 9.8%; Average loss: 3.9216

Iteration: 395; Percent complete: 9.9%; Average loss: 3.7827

Iteration: 396; Percent complete: 9.9%; Average loss: 3.8361

Iteration: 397; Percent complete: 9.9%; Average loss: 3.8758

Iteration: 398; Percent complete: 10.0%; Average loss: 3.8970

Iteration: 399; Percent complete: 10.0%; Average loss: 3.8781

Iteration: 400; Percent complete: 10.0%; Average loss: 4.1731

Iteration: 401; Percent complete: 10.0%; Average loss: 3.8854

Iteration: 402; Percent complete: 10.1%; Average loss: 3.5846

Iteration: 403; Percent complete: 10.1%; Average loss: 3.7838

Iteration: 404; Percent complete: 10.1%; Average loss: 3.7122

Iteration: 405; Percent complete: 10.1%; Average loss: 3.6550

Iteration: 406; Percent complete: 10.2%; Average loss: 3.8002

Iteration: 407; Percent complete: 10.2%; Average loss: 3.8032

Iteration: 408; Percent complete: 10.2%; Average loss: 3.9027

Iteration: 409; Percent complete: 10.2%; Average loss: 3.8555

Iteration: 410; Percent complete: 10.2%; Average loss: 4.0888

Iteration: 411; Percent complete: 10.3%; Average loss: 3.8975

Iteration: 412; Percent complete: 10.3%; Average loss: 3.7005

Iteration: 413; Percent complete: 10.3%; Average loss: 3.7737

Iteration: 414; Percent complete: 10.3%; Average loss: 3.8863

Iteration: 415; Percent complete: 10.4%; Average loss: 4.1185

Iteration: 416; Percent complete: 10.4%; Average loss: 3.7875

Iteration: 417; Percent complete: 10.4%; Average loss: 3.7938

Iteration: 418; Percent complete: 10.4%; Average loss: 3.6301

Iteration: 419; Percent complete: 10.5%; Average loss: 4.0663

Iteration: 420; Percent complete: 10.5%; Average loss: 3.7130

Iteration: 421; Percent complete: 10.5%; Average loss: 3.9950

Iteration: 422; Percent complete: 10.5%; Average loss: 3.9411

Iteration: 423; Percent complete: 10.6%; Average loss: 3.6338

Iteration: 424; Percent complete: 10.6%; Average loss: 3.8034

Iteration: 425; Percent complete: 10.6%; Average loss: 3.7852

Iteration: 426; Percent complete: 10.7%; Average loss: 3.4949

Iteration: 427; Percent complete: 10.7%; Average loss: 3.9568

Iteration: 428; Percent complete: 10.7%; Average loss: 4.0003

Iteration: 429; Percent complete: 10.7%; Average loss: 3.7094

Iteration: 430; Percent complete: 10.8%; Average loss: 3.7977

Iteration: 431; Percent complete: 10.8%; Average loss: 3.7829

Iteration: 432; Percent complete: 10.8%; Average loss: 3.8627

Iteration: 433; Percent complete: 10.8%; Average loss: 3.7359

Iteration: 434; Percent complete: 10.8%; Average loss: 3.7843

Iteration: 435; Percent complete: 10.9%; Average loss: 3.9011

Iteration: 436; Percent complete: 10.9%; Average loss: 3.6779

Iteration: 437; Percent complete: 10.9%; Average loss: 3.7558

Iteration: 438; Percent complete: 10.9%; Average loss: 3.9018

Iteration: 439; Percent complete: 11.0%; Average loss: 3.5099

Iteration: 440; Percent complete: 11.0%; Average loss: 4.0219

Iteration: 441; Percent complete: 11.0%; Average loss: 3.7284

Iteration: 442; Percent complete: 11.1%; Average loss: 3.8568

Iteration: 443; Percent complete: 11.1%; Average loss: 3.8547

Iteration: 444; Percent complete: 11.1%; Average loss: 3.7587

Iteration: 445; Percent complete: 11.1%; Average loss: 4.0258

Iteration: 446; Percent complete: 11.2%; Average loss: 3.9936

Iteration: 447; Percent complete: 11.2%; Average loss: 3.6567

Iteration: 448; Percent complete: 11.2%; Average loss: 3.9529

Iteration: 449; Percent complete: 11.2%; Average loss: 3.5445

Iteration: 450; Percent complete: 11.2%; Average loss: 4.0308

Iteration: 451; Percent complete: 11.3%; Average loss: 3.6604

Iteration: 452; Percent complete: 11.3%; Average loss: 4.0699

Iteration: 453; Percent complete: 11.3%; Average loss: 3.7300

Iteration: 454; Percent complete: 11.3%; Average loss: 3.5589

Iteration: 455; Percent complete: 11.4%; Average loss: 3.6280

Iteration: 456; Percent complete: 11.4%; Average loss: 3.7808

Iteration: 457; Percent complete: 11.4%; Average loss: 3.6342

Iteration: 458; Percent complete: 11.5%; Average loss: 3.7980

Iteration: 459; Percent complete: 11.5%; Average loss: 3.8012

Iteration: 460; Percent complete: 11.5%; Average loss: 3.6375

Iteration: 461; Percent complete: 11.5%; Average loss: 3.7269

Iteration: 462; Percent complete: 11.6%; Average loss: 3.7273

Iteration: 463; Percent complete: 11.6%; Average loss: 3.5827

Iteration: 464; Percent complete: 11.6%; Average loss: 3.7723

Iteration: 465; Percent complete: 11.6%; Average loss: 3.6171

Iteration: 466; Percent complete: 11.7%; Average loss: 3.7302

Iteration: 467; Percent complete: 11.7%; Average loss: 3.7993

Iteration: 468; Percent complete: 11.7%; Average loss: 3.4514

Iteration: 469; Percent complete: 11.7%; Average loss: 3.7413

Iteration: 470; Percent complete: 11.8%; Average loss: 3.7956

Iteration: 471; Percent complete: 11.8%; Average loss: 4.0601

Iteration: 472; Percent complete: 11.8%; Average loss: 3.6446

Iteration: 473; Percent complete: 11.8%; Average loss: 3.8909

Iteration: 474; Percent complete: 11.8%; Average loss: 4.0277

Iteration: 475; Percent complete: 11.9%; Average loss: 3.7424

Iteration: 476; Percent complete: 11.9%; Average loss: 3.8220

Iteration: 477; Percent complete: 11.9%; Average loss: 3.7258

Iteration: 478; Percent complete: 11.9%; Average loss: 3.6878

Iteration: 479; Percent complete: 12.0%; Average loss: 3.6235

Iteration: 480; Percent complete: 12.0%; Average loss: 3.7311

Iteration: 481; Percent complete: 12.0%; Average loss: 3.7833

Iteration: 482; Percent complete: 12.0%; Average loss: 3.6307

Iteration: 483; Percent complete: 12.1%; Average loss: 3.7141

Iteration: 484; Percent complete: 12.1%; Average loss: 3.7625

Iteration: 485; Percent complete: 12.1%; Average loss: 3.7906

Iteration: 486; Percent complete: 12.2%; Average loss: 3.6643

Iteration: 487; Percent complete: 12.2%; Average loss: 3.8613

Iteration: 488; Percent complete: 12.2%; Average loss: 3.9939

Iteration: 489; Percent complete: 12.2%; Average loss: 3.7486

Iteration: 490; Percent complete: 12.2%; Average loss: 3.7170

Iteration: 491; Percent complete: 12.3%; Average loss: 3.5677

Iteration: 492; Percent complete: 12.3%; Average loss: 3.6018

Iteration: 493; Percent complete: 12.3%; Average loss: 3.6524

Iteration: 494; Percent complete: 12.3%; Average loss: 3.7476

Iteration: 495; Percent complete: 12.4%; Average loss: 3.5455

Iteration: 496; Percent complete: 12.4%; Average loss: 3.3100

Iteration: 497; Percent complete: 12.4%; Average loss: 3.7155

Iteration: 498; Percent complete: 12.4%; Average loss: 3.3803

Iteration: 499; Percent complete: 12.5%; Average loss: 3.6765

Iteration: 500; Percent complete: 12.5%; Average loss: 3.7840

Iteration: 501; Percent complete: 12.5%; Average loss: 3.5825

Iteration: 502; Percent complete: 12.6%; Average loss: 3.7416

Iteration: 503; Percent complete: 12.6%; Average loss: 3.4979

Iteration: 504; Percent complete: 12.6%; Average loss: 3.7383

Iteration: 505; Percent complete: 12.6%; Average loss: 3.8051

Iteration: 506; Percent complete: 12.7%; Average loss: 3.5802

Iteration: 507; Percent complete: 12.7%; Average loss: 3.7461

Iteration: 508; Percent complete: 12.7%; Average loss: 3.5987

Iteration: 509; Percent complete: 12.7%; Average loss: 3.6375