注意

单击 此处 下载完整的示例代码

音频数据增强¶

作者:Moto Hira

torchaudio 提供了多种增强音频数据的方法。

在本教程中,我们将探讨一种应用效果、滤波器、RIR(房间脉冲响应)和编解码器的方法。

最后,我们将对干净语音进行合成,使其听起来像是在电话中带有嘈杂的语音。

import torch

import torchaudio

import torchaudio.functional as F

print(torch.__version__)

print(torchaudio.__version__)

import matplotlib.pyplot as plt

2.10.0.dev20251013+cu126

2.8.0a0+1d65bbe

准备¶

首先,我们导入模块并下载本教程中使用的音频素材。

from IPython.display import Audio

from torchaudio.utils import _download_asset

SAMPLE_WAV = _download_asset("tutorial-assets/steam-train-whistle-daniel_simon.wav")

SAMPLE_RIR = _download_asset("tutorial-assets/Lab41-SRI-VOiCES-rm1-impulse-mc01-stu-clo-8000hz.wav")

SAMPLE_SPEECH = _download_asset("tutorial-assets/Lab41-SRI-VOiCES-src-sp0307-ch127535-sg0042-8000hz.wav")

SAMPLE_NOISE = _download_asset("tutorial-assets/Lab41-SRI-VOiCES-rm1-babb-mc01-stu-clo-8000hz.wav")

30.0%

59.9%

89.9%

100.0%

100.0%

100.0%

100.0%

加载数据¶

waveform1, sample_rate = torchaudio.load(SAMPLE_WAV, channels_first=False)

print(waveform1.shape, sample_rate)

torch.Size([109368, 2]) 44100

让我们来听听这段音频。

def plot_waveform(waveform, sample_rate, title="Waveform", xlim=None):

waveform = waveform.numpy()

num_channels, num_frames = waveform.shape

time_axis = torch.arange(0, num_frames) / sample_rate

figure, axes = plt.subplots(num_channels, 1)

if num_channels == 1:

axes = [axes]

for c in range(num_channels):

axes[c].plot(time_axis, waveform[c], linewidth=1)

axes[c].grid(True)

if num_channels > 1:

axes[c].set_ylabel(f"Channel {c+1}")

if xlim:

axes[c].set_xlim(xlim)

figure.suptitle(title)

def plot_specgram(waveform, sample_rate, title="Spectrogram", xlim=None):

waveform = waveform.numpy()

num_channels, _ = waveform.shape

figure, axes = plt.subplots(num_channels, 1)

if num_channels == 1:

axes = [axes]

for c in range(num_channels):

axes[c].specgram(waveform[c], Fs=sample_rate)

if num_channels > 1:

axes[c].set_ylabel(f"Channel {c+1}")

if xlim:

axes[c].set_xlim(xlim)

figure.suptitle(title)

plot_waveform(waveform1.T, sample_rate, title="Original", xlim=(-0.1, 3.2))

plot_specgram(waveform1.T, sample_rate, title="Original", xlim=(0, 3.04))

Audio(waveform1.T, rate=sample_rate)

模拟房间混响¶

卷积混响 是一种用于使干净音频听起来像是在不同环境中录制的技术。

例如,使用房间脉冲响应 (RIR),我们可以使干净的语音听起来像是说在会议室里。

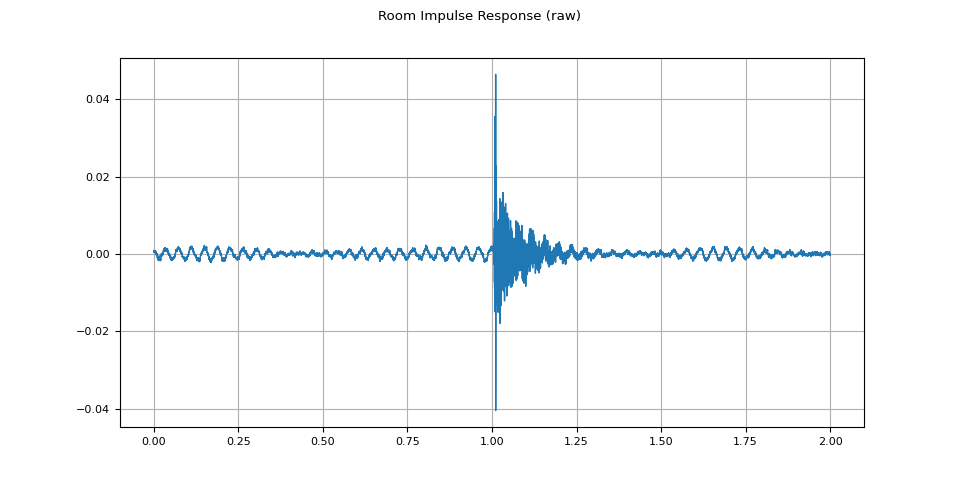

对于此过程,我们需要 RIR 数据。以下数据来自 VOiCES 数据集,但您可以录制自己的数据 — 只需打开麦克风并拍手即可。

rir_raw, sample_rate = torchaudio.load(SAMPLE_RIR)

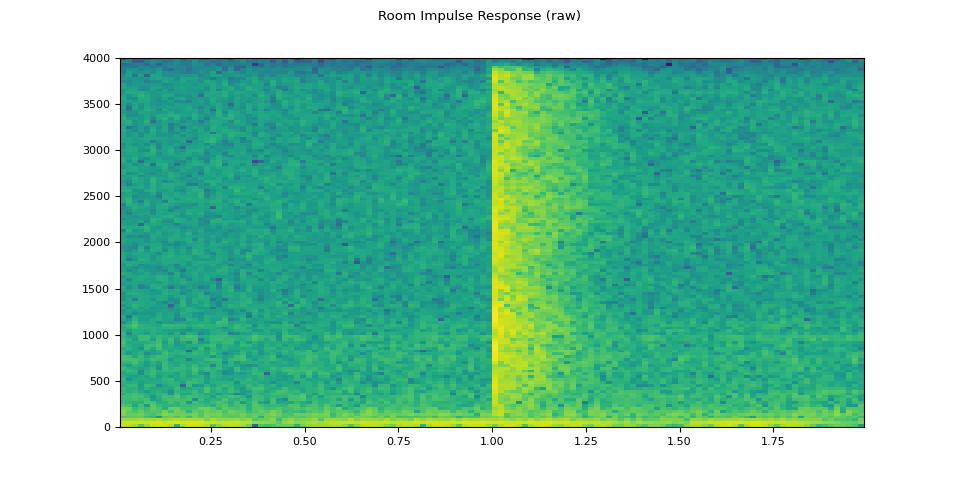

plot_waveform(rir_raw, sample_rate, title="Room Impulse Response (raw)")

plot_specgram(rir_raw, sample_rate, title="Room Impulse Response (raw)")

Audio(rir_raw, rate=sample_rate)

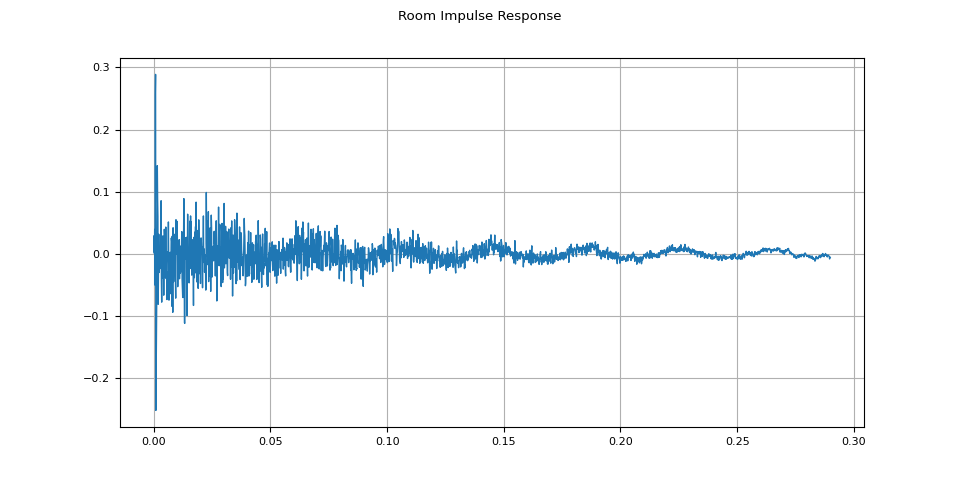

首先,我们需要清理 RIR。我们提取主要脉冲并按其功率进行归一化。

rir = rir_raw[:, int(sample_rate * 1.01) : int(sample_rate * 1.3)]

rir = rir / torch.linalg.vector_norm(rir, ord=2)

plot_waveform(rir, sample_rate, title="Room Impulse Response")

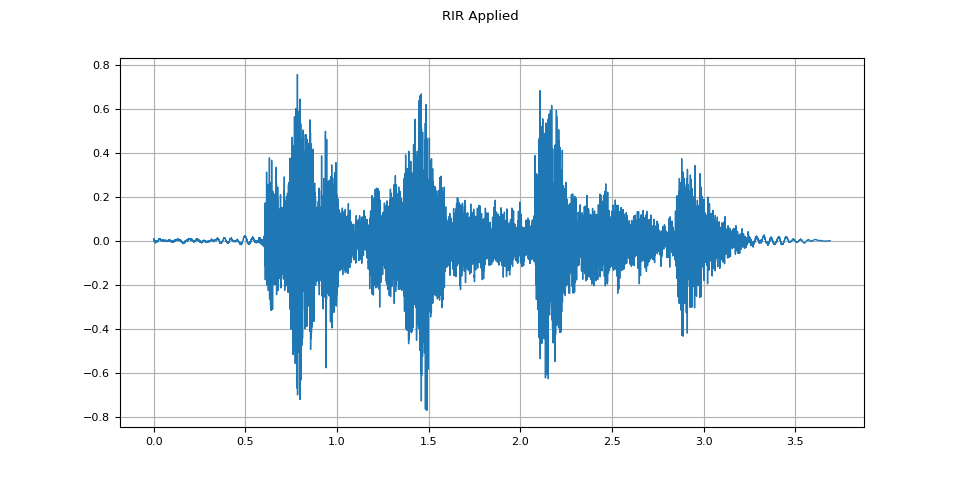

然后,使用 torchaudio.functional.fftconvolve(),我们将语音信号与 RIR 进行卷积。

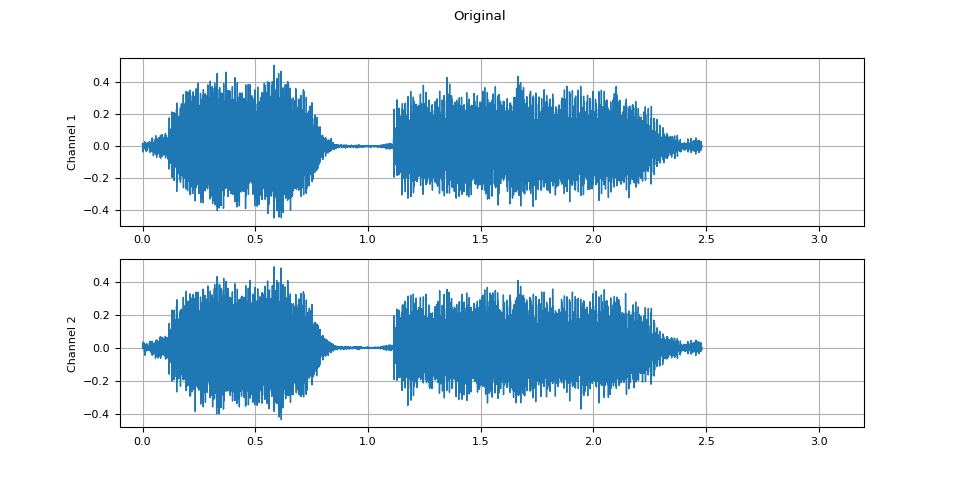

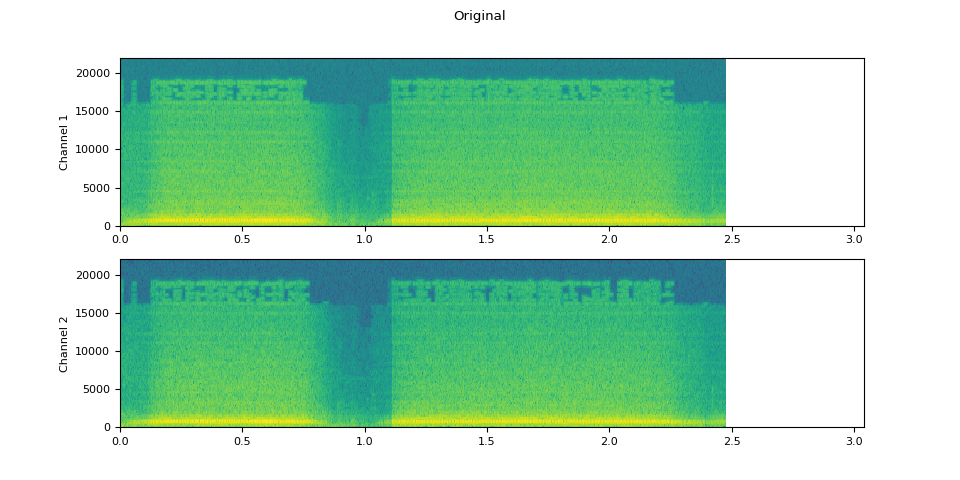

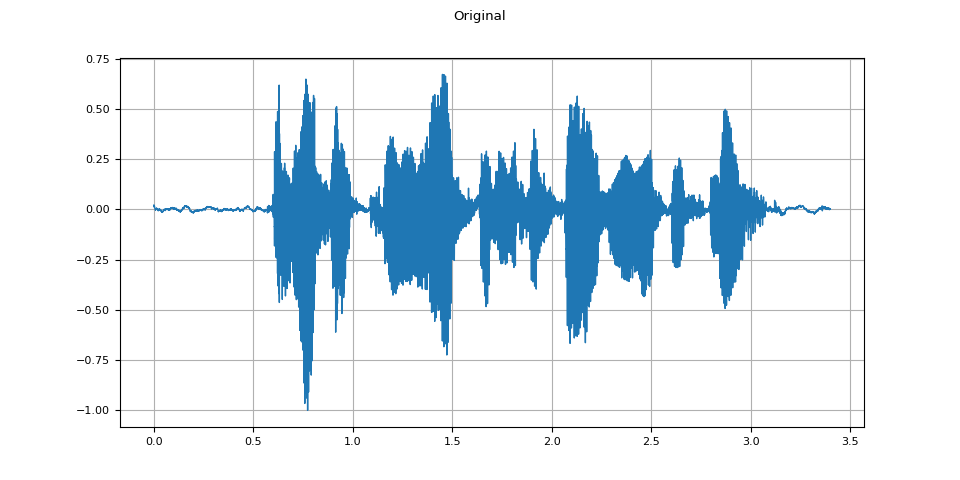

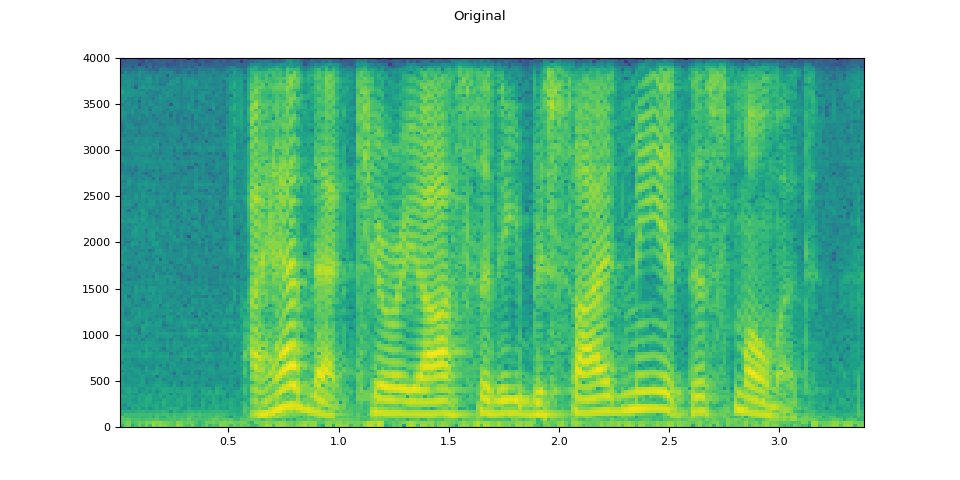

原始¶

plot_waveform(speech, sample_rate, title="Original")

plot_specgram(speech, sample_rate, title="Original")

Audio(speech, rate=sample_rate)

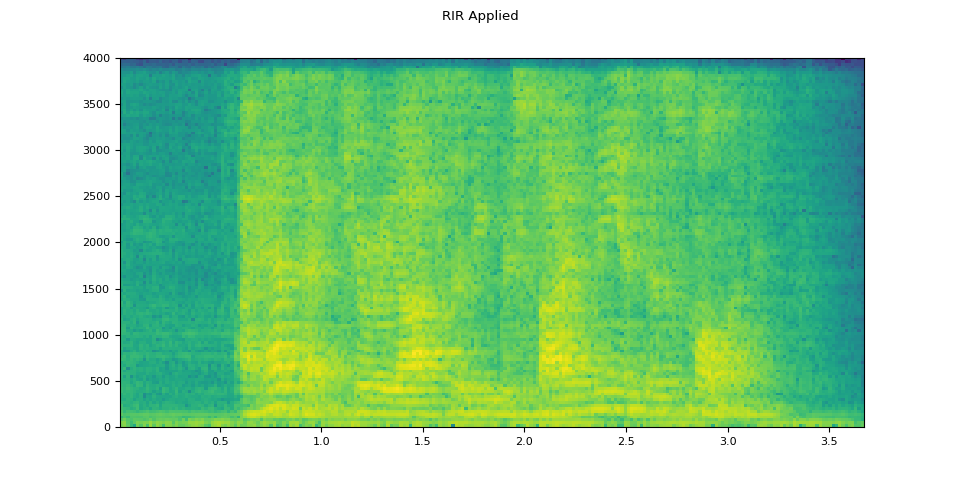

应用 RIR 后¶

plot_waveform(augmented, sample_rate, title="RIR Applied")

plot_specgram(augmented, sample_rate, title="RIR Applied")

Audio(augmented, rate=sample_rate)

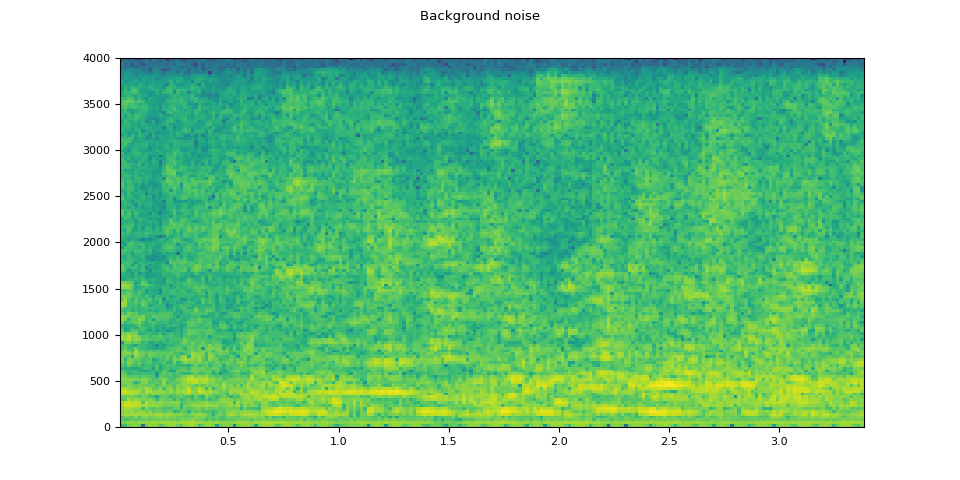

添加背景噪声¶

要为音频数据引入背景噪声,我们可以根据所需的信噪比 (SNR) [wikipedia] 将噪声 Tensor 添加到表示音频数据的 Tensor 中。SNR 决定了输出中音频数据相对于噪声的强度。

$$ \mathrm{SNR} = \frac{P_{signal}}{P_{noise}} $$

$$ \mathrm{SNR_{dB}} = 10 \log _{{10}} \mathrm {SNR} $$

要根据 SNR 向音频数据添加噪声,我们使用 torchaudio.functional.add_noise()。

speech, _ = torchaudio.load(SAMPLE_SPEECH)

noise, _ = torchaudio.load(SAMPLE_NOISE)

noise = noise[:, : speech.shape[1]]

snr_dbs = torch.tensor([20, 10, 3])

noisy_speeches = F.add_noise(speech, noise, snr_dbs)

背景噪声¶

plot_waveform(noise, sample_rate, title="Background noise")

plot_specgram(noise, sample_rate, title="Background noise")

Audio(noise, rate=sample_rate)

SNR 20 dB¶

snr_db, noisy_speech = snr_dbs[0], noisy_speeches[0:1]

plot_waveform(noisy_speech, sample_rate, title=f"SNR: {snr_db} [dB]")

plot_specgram(noisy_speech, sample_rate, title=f"SNR: {snr_db} [dB]")

Audio(noisy_speech, rate=sample_rate)

SNR 10 dB¶

snr_db, noisy_speech = snr_dbs[1], noisy_speeches[1:2]

plot_waveform(noisy_speech, sample_rate, title=f"SNR: {snr_db} [dB]")

plot_specgram(noisy_speech, sample_rate, title=f"SNR: {snr_db} [dB]")

Audio(noisy_speech, rate=sample_rate)

SNR 3 dB¶

snr_db, noisy_speech = snr_dbs[2], noisy_speeches[2:3]

plot_waveform(noisy_speech, sample_rate, title=f"SNR: {snr_db} [dB]")

plot_specgram(noisy_speech, sample_rate, title=f"SNR: {snr_db} [dB]")

Audio(noisy_speech, rate=sample_rate)

脚本总运行时间: ( 0 分 7.726 秒)

![SNR: 20 [dB]](../_images/sphx_glr_audio_data_augmentation_tutorial_012.png)

![SNR: 20 [dB]](../_images/sphx_glr_audio_data_augmentation_tutorial_013.png)

![SNR: 10 [dB]](../_images/sphx_glr_audio_data_augmentation_tutorial_014.png)

![SNR: 10 [dB]](../_images/sphx_glr_audio_data_augmentation_tutorial_015.png)

![SNR: 3 [dB]](../_images/sphx_glr_audio_data_augmentation_tutorial_016.png)

![SNR: 3 [dB]](../_images/sphx_glr_audio_data_augmentation_tutorial_017.png)