注意

转到末尾 下载完整的示例代码。

Pendulum: 使用 TorchRL 编写环境和 transforms#

创建时间:2023 年 11 月 09 日 | 最后更新:2025 年 01 月 27 日 | 最后验证:2024 年 11 月 05 日

创建环境(模拟器或物理控制系统的接口)是强化学习和控制工程中不可或缺的一部分。

TorchRL 提供了一套工具来在多种上下文中完成此操作。本教程演示了如何从头开始使用 PyTorch 和 TorchRL 编写一个倒摆模拟器。它受到 OpenAI-Gym/Farama-Gymnasium 控制库 中 Pendulum-v1 实现的自由启发。

简单倒摆#

主要学习内容

如何在 TorchRL 中设计环境:- 编写规格(输入、观察和奖励);- 实现行为:设置种子、重置和步进。

转换您的环境输入和输出,并编写您自己的 transforms;

如何使用

TensorDict在整个codebase中传递任意数据结构。在此过程中,我们将涉及 TorchRL 的三个关键组件

为了让您了解使用 TorchRL 的环境可以实现什么,我们将设计一个无状态环境。虽然有状态环境会跟踪遇到的最新物理状态并依赖于此来模拟状态到状态的转换,但无状态环境会在每一步都期望当前状态被提供给它,以及执行的动作。TorchRL 支持这两种类型的环境,但无状态环境更通用,因此涵盖了 TorchRL 环境 API 的更广泛功能。

对无状态环境进行建模可以让用户完全控制模拟器的输入和输出:可以随时重置实验或从外部主动修改动力学。然而,这假设我们对任务有一定的控制,而情况并非总是如此:解决我们无法控制当前状态的问题更具挑战性,但具有更广泛的应用集。

无状态环境的另一个优点是它们可以实现过渡模拟的批量执行。如果后端和实现允许,代数运算可以无缝地在标量、向量或张量上执行。本教程提供了此类示例。

本教程将按以下方式组织

我们将首先熟悉环境的属性:它的形状(

batch_size)、它的方法(主要是step()、reset()和set_seed())以及最后它的规格。在编写完模拟器后,我们将演示如何在训练过程中使用 transforms。

我们将探索遵循 TorchRL API 的新途径,包括:转换输入的可能性、模拟的矢量化执行以及通过模拟图进行反向传播的可能性。

最后,我们将训练一个简单的策略来解决我们实现的系统。

from collections import defaultdict

from typing import Optional

import numpy as np

import torch

import tqdm

from tensordict import TensorDict, TensorDictBase

from tensordict.nn import TensorDictModule

from torch import nn

from torchrl.data import BoundedTensorSpec, CompositeSpec, UnboundedContinuousTensorSpec

from torchrl.envs import (

CatTensors,

EnvBase,

Transform,

TransformedEnv,

UnsqueezeTransform,

)

from torchrl.envs.transforms.transforms import _apply_to_composite

from torchrl.envs.utils import check_env_specs, step_mdp

DEFAULT_X = np.pi

DEFAULT_Y = 1.0

设计新环境类时,您必须注意四件事

EnvBase._reset(),它编写了模拟器在(可能随机的)初始状态下的重置;EnvBase._step(),它编写了状态转换动态;EnvBase._set_seed`(),它实现了播种机制;环境规格。

首先,我们来描述一下当前的问题:我们希望模拟一个简单的倒摆,我们可以控制施加在其固定点的扭矩。我们的目标是将倒摆置于向上位置(约定为角度为 0)并使其在该位置保持静止。为了设计我们的动态系统,我们需要定义两个方程:遵循动作(施加的扭矩)的运动方程和构成我们目标函数的奖励方程。

对于运动方程,我们将根据以下公式更新角速度

其中 \(\dot{\theta}\) 是弧度/秒的角速度,\(g\) 是重力,\(L\) 是倒摆长度,\(m\) 是其质量,\(\theta\) 是其角度位置,\(u\) 是扭矩。然后根据以下公式更新角度位置

我们将奖励定义为

当角度接近 0(倒摆处于向上位置)、角速度接近 0(无运动)且扭矩也为 0 时,这将最大化。

编码动作的影响:_step()#

step 方法是首先要考虑的,因为它将编码我们感兴趣的模拟。在 TorchRL 中,EnvBase 类有一个 EnvBase.step() 方法,该方法接收一个 tensordict.TensorDict 实例,其中包含一个 "action" 条目,指示要执行的动作。

为了方便从该 tensordict 进行读写,并确保键与库的预期一致,模拟部分已委托给私有抽象方法 _step(),该方法从 tensordict 读取输入数据,并使用输出数据写入一个新的 tensordict。

_step() 方法应执行以下操作

读取输入键(例如

"action")并基于这些键执行模拟;检索观察、完成状态和奖励;

在新的

TensorDict中将观察值集合与奖励和完成状态写入相应的条目。

接下来,step() 方法会将 step() 的输出合并到输入 tensordict 中,以强制执行输入/输出一致性。

通常,对于有状态环境,这将如下所示

>>> policy(env.reset())

>>> print(tensordict)

TensorDict(

fields={

action: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.float32, is_shared=False),

done: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

observation: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([]),

device=cpu,

is_shared=False)

>>> env.step(tensordict)

>>> print(tensordict)

TensorDict(

fields={

action: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.float32, is_shared=False),

done: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

next: TensorDict(

fields={

done: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

observation: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

reward: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([]),

device=cpu,

is_shared=False),

observation: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([]),

device=cpu,

is_shared=False)

请注意,根 tensordict 没有改变,唯一的变化是出现了一个新的 "next" 条目,其中包含新信息。

在 Pendulum 示例中,我们的 _step() 方法将读取输入 tensordict 中相关的条目,并在将 "action" 键编码的力施加到倒摆上后,计算倒摆的位置和速度。我们计算倒摆 "new_th" 的新角度位置,作为先前位置 "th" 加上新速度 "new_thdot" 在时间间隔 dt 上的结果。

由于我们的目标是将倒摆转向上并使其保持静止,因此我们的 cost(负奖励)函数对于接近目标的位置和低速来说更低。事实上,我们希望阻止那些远离“向上”的位置和/或远离 0 的速度。

在我们的示例中,EnvBase._step() 被编码为静态方法,因为我们的环境是无状态的。在有状态的环境中,需要 self 参数,因为状态需要从环境中读取。

def _step(tensordict):

th, thdot = tensordict["th"], tensordict["thdot"] # th := theta

g_force = tensordict["params", "g"]

mass = tensordict["params", "m"]

length = tensordict["params", "l"]

dt = tensordict["params", "dt"]

u = tensordict["action"].squeeze(-1)

u = u.clamp(-tensordict["params", "max_torque"], tensordict["params", "max_torque"])

costs = angle_normalize(th) ** 2 + 0.1 * thdot**2 + 0.001 * (u**2)

new_thdot = (

thdot

+ (3 * g_force / (2 * length) * th.sin() + 3.0 / (mass * length**2) * u) * dt

)

new_thdot = new_thdot.clamp(

-tensordict["params", "max_speed"], tensordict["params", "max_speed"]

)

new_th = th + new_thdot * dt

reward = -costs.view(*tensordict.shape, 1)

done = torch.zeros_like(reward, dtype=torch.bool)

out = TensorDict(

{

"th": new_th,

"thdot": new_thdot,

"params": tensordict["params"],

"reward": reward,

"done": done,

},

tensordict.shape,

)

return out

def angle_normalize(x):

return ((x + torch.pi) % (2 * torch.pi)) - torch.pi

重置模拟器:_reset()#

我们需要关心的第二个方法是 _reset() 方法。与 _step() 类似,它应该在输出的 tensordict 中写入观察条目和可能的完成状态(如果省略了完成状态,则父方法 reset() 会将其填充为 False)。在某些上下文中,要求 _reset 方法接收调用它的函数发出的命令(例如,在多代理环境中,我们可能希望指示哪些代理需要重置)。这就是为什么 _reset() 方法也期望一个 tensordict 作为输入,尽管它可以是空的或 None。

父方法 EnvBase.reset() 会执行一些简单的检查,就像 EnvBase.step() 所做的那样,例如确保在输出 tensordict 中返回一个 "done" 状态,并且形状与规格要求匹配。

对我们来说,唯一重要的注意事项是 EnvBase._reset() 是否包含所有预期的观察。再次说明,由于我们正在处理一个无状态环境,我们将倒摆的配置传递给一个名为 "params" 的嵌套 tensordict。

在此示例中,我们不传递完成状态,因为对于 _reset() 来说这不是强制性的,而且我们的环境是非终止的,所以我们总是期望它为 False。

def _reset(self, tensordict):

if tensordict is None or tensordict.is_empty():

# if no ``tensordict`` is passed, we generate a single set of hyperparameters

# Otherwise, we assume that the input ``tensordict`` contains all the relevant

# parameters to get started.

tensordict = self.gen_params(batch_size=self.batch_size)

high_th = torch.tensor(DEFAULT_X, device=self.device)

high_thdot = torch.tensor(DEFAULT_Y, device=self.device)

low_th = -high_th

low_thdot = -high_thdot

# for non batch-locked environments, the input ``tensordict`` shape dictates the number

# of simulators run simultaneously. In other contexts, the initial

# random state's shape will depend upon the environment batch-size instead.

th = (

torch.rand(tensordict.shape, generator=self.rng, device=self.device)

* (high_th - low_th)

+ low_th

)

thdot = (

torch.rand(tensordict.shape, generator=self.rng, device=self.device)

* (high_thdot - low_thdot)

+ low_thdot

)

out = TensorDict(

{

"th": th,

"thdot": thdot,

"params": tensordict["params"],

},

batch_size=tensordict.shape,

)

return out

环境元数据:env.*_spec#

规格定义了环境的输入和输出域。准确定义运行时接收的张量很重要,因为它们通常用于在多处理和分布式设置中传递环境信息。它们还可以用于实例化惰性定义的神经网络和测试脚本,而无需实际查询环境(例如,对于真实的物理系统,这可能会很昂贵)。

有四个规格是我们必须在环境中编写的

EnvBase.observation_spec:这将是一个CompositeSpec实例,其中每个键都是一个观察值(CompositeSpec可以看作是规格的字典)。EnvBase.action_spec:它可以是任何类型的规格,但要求它对应于输入tensordict中的"action"条目;EnvBase.reward_spec:提供有关奖励空间的信息;EnvBase.done_spec:提供有关完成标志空间的信息。

TorchRL 规格组织在两个通用容器中:input_spec,包含 step 函数读取的信息的规格(分为 action_spec 包含动作,state_spec 包含所有其余部分),以及 output_spec,它编码 step 输出的规格(observation_spec、reward_spec 和 done_spec)。通常,您不应直接与 output_spec 和 input_spec 交互,而应只与它们的内容交互:observation_spec、reward_spec、done_spec、action_spec 和 state_spec。原因是规格以一种非平凡的方式组织在 output_spec 和 input_spec 中,并且不应直接修改其中任何一个。

换句话说,observation_spec 和相关属性是输出和输入规格容器内容的便捷快捷方式。

TorchRL 提供了多个 TensorSpec 子类 来编码环境的输入和输出特征。

规格形状#

环境规格的领先维度必须与环境批次大小匹配。这样做是为了强制执行环境的每个组件(包括其 transforms)都能准确表示预期的输入和输出形状。在有状态的环境中,这应该被准确编写。

对于非批次锁定环境,例如我们示例中的环境(见下文),这无关紧要,因为环境批次大小很可能为空。

def _make_spec(self, td_params):

# Under the hood, this will populate self.output_spec["observation"]

self.observation_spec = CompositeSpec(

th=BoundedTensorSpec(

low=-torch.pi,

high=torch.pi,

shape=(),

dtype=torch.float32,

),

thdot=BoundedTensorSpec(

low=-td_params["params", "max_speed"],

high=td_params["params", "max_speed"],

shape=(),

dtype=torch.float32,

),

# we need to add the ``params`` to the observation specs, as we want

# to pass it at each step during a rollout

params=make_composite_from_td(td_params["params"]),

shape=(),

)

# since the environment is stateless, we expect the previous output as input.

# For this, ``EnvBase`` expects some state_spec to be available

self.state_spec = self.observation_spec.clone()

# action-spec will be automatically wrapped in input_spec when

# `self.action_spec = spec` will be called supported

self.action_spec = BoundedTensorSpec(

low=-td_params["params", "max_torque"],

high=td_params["params", "max_torque"],

shape=(1,),

dtype=torch.float32,

)

self.reward_spec = UnboundedContinuousTensorSpec(shape=(*td_params.shape, 1))

def make_composite_from_td(td):

# custom function to convert a ``tensordict`` in a similar spec structure

# of unbounded values.

composite = CompositeSpec(

{

key: make_composite_from_td(tensor)

if isinstance(tensor, TensorDictBase)

else UnboundedContinuousTensorSpec(

dtype=tensor.dtype, device=tensor.device, shape=tensor.shape

)

for key, tensor in td.items()

},

shape=td.shape,

)

return composite

可复现的实验:播种#

在初始化实验时,播种环境是一项常见操作。EnvBase._set_seed() 的唯一目的是设置所包含模拟器的种子。如果可能,此操作不应调用 reset() 或与环境执行交互。父方法 EnvBase.set_seed() 包含一个机制,允许使用不同的伪随机且可复现的种子播种多个环境。

def _set_seed(self, seed: Optional[int]):

rng = torch.manual_seed(seed)

self.rng = rng

将所有内容打包:EnvBase 类#

我们终于可以将这些部分组合起来,设计我们的环境类。规格初始化需要在环境构造期间进行,因此我们必须在 PendulumEnv.__init__() 中调用 _make_spec() 方法。

我们添加一个静态方法 PendulumEnv.gen_params(),该方法确定性地生成一组将在执行期间使用的超参数。

def gen_params(g=10.0, batch_size=None) -> TensorDictBase:

"""Returns a ``tensordict`` containing the physical parameters such as gravitational force and torque or speed limits."""

if batch_size is None:

batch_size = []

td = TensorDict(

{

"params": TensorDict(

{

"max_speed": 8,

"max_torque": 2.0,

"dt": 0.05,

"g": g,

"m": 1.0,

"l": 1.0,

},

[],

)

},

[],

)

if batch_size:

td = td.expand(batch_size).contiguous()

return td

我们将环境定义为非batch_locked,方法是将 homonymous 属性设置为 False。这意味着我们**不会**强制输入 tensordict 具有与环境匹配的 batch-size。

以下代码将组合我们上面编写的部分。

class PendulumEnv(EnvBase):

metadata = {

"render_modes": ["human", "rgb_array"],

"render_fps": 30,

}

batch_locked = False

def __init__(self, td_params=None, seed=None, device="cpu"):

if td_params is None:

td_params = self.gen_params()

super().__init__(device=device, batch_size=[])

self._make_spec(td_params)

if seed is None:

seed = torch.empty((), dtype=torch.int64).random_().item()

self.set_seed(seed)

# Helpers: _make_step and gen_params

gen_params = staticmethod(gen_params)

_make_spec = _make_spec

# Mandatory methods: _step, _reset and _set_seed

_reset = _reset

_step = staticmethod(_step)

_set_seed = _set_seed

测试我们的环境#

TorchRL 提供了一个简单的函数 check_env_specs() 来检查(转换后的)环境的输入/输出结构是否与其规格相匹配。让我们试一试

env = PendulumEnv()

check_env_specs(env)

/usr/local/lib/python3.10/dist-packages/torchrl/data/tensor_specs.py:6911: DeprecationWarning:

The BoundedTensorSpec has been deprecated and will be removed in v0.8. Please use Bounded instead.

/usr/local/lib/python3.10/dist-packages/torchrl/data/tensor_specs.py:6911: DeprecationWarning:

The UnboundedContinuousTensorSpec has been deprecated and will be removed in v0.8. Please use Unbounded instead.

/usr/local/lib/python3.10/dist-packages/torchrl/data/tensor_specs.py:6911: DeprecationWarning:

The CompositeSpec has been deprecated and will be removed in v0.8. Please use Composite instead.

2025-10-15 19:14:56,019 [torchrl][INFO] check_env_specs succeeded! [END]

我们可以查看我们的规格,以便直观地表示环境签名

print("observation_spec:", env.observation_spec)

print("state_spec:", env.state_spec)

print("reward_spec:", env.reward_spec)

observation_spec: CompositeSpec(

th: BoundedContinuous(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

thdot: BoundedContinuous(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

params: CompositeSpec(

max_speed: UnboundedDiscrete(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.int64, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.int64, contiguous=True)),

device=cpu,

dtype=torch.int64,

domain=discrete),

max_torque: UnboundedContinuous(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

dt: UnboundedContinuous(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

g: UnboundedContinuous(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

m: UnboundedContinuous(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

l: UnboundedContinuous(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

device=cpu,

shape=torch.Size([]),

data_cls=None),

device=cpu,

shape=torch.Size([]),

data_cls=None)

state_spec: CompositeSpec(

th: BoundedContinuous(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

thdot: BoundedContinuous(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

params: CompositeSpec(

max_speed: UnboundedDiscrete(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.int64, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.int64, contiguous=True)),

device=cpu,

dtype=torch.int64,

domain=discrete),

max_torque: UnboundedContinuous(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

dt: UnboundedContinuous(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

g: UnboundedContinuous(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

m: UnboundedContinuous(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

l: UnboundedContinuous(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

device=cpu,

shape=torch.Size([]),

data_cls=None),

device=cpu,

shape=torch.Size([]),

data_cls=None)

reward_spec: UnboundedContinuous(

shape=torch.Size([1]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous)

我们也可以执行几个命令来检查输出结构是否与预期相符。

reset tensordict TensorDict(

fields={

done: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

params: TensorDict(

fields={

dt: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

g: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

l: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

m: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

max_speed: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.int64, is_shared=False),

max_torque: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False),

terminated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

th: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

thdot: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False)

我们可以运行 env.rand_step() 以从 action_spec 域随机生成一个动作。**必须**传入一个包含超参数和当前状态的 tensordict,因为我们的环境是无状态的。在有状态的环境中,env.rand_step() 也能完美工作。

td = env.rand_step(td)

print("random step tensordict", td)

random step tensordict TensorDict(

fields={

action: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.float32, is_shared=False),

done: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

next: TensorDict(

fields={

done: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

params: TensorDict(

fields={

dt: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

g: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

l: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

m: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

max_speed: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.int64, is_shared=False),

max_torque: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False),

reward: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

th: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

thdot: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False),

params: TensorDict(

fields={

dt: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

g: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

l: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

m: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

max_speed: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.int64, is_shared=False),

max_torque: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False),

terminated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

th: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

thdot: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False)

转换环境#

为无状态模拟器编写环境 transforms 比有状态模拟器稍微复杂一些:转换一个需要在下一次迭代中读取的输出条目,需要在下一次调用 meth.step() 之前应用逆变换。这是一个展示 TorchRL transforms 所有功能的理想场景!

例如,在以下转换的环境中,我们将 ["th", "thdot"] 条目进行 unsqueeze 操作,以便能够沿着最后一个维度堆叠它们。我们还将其作为 in_keys_inv 传递,以便在它们作为输入传递到下一次迭代时将它们挤压回原始形状。

env = TransformedEnv(

env,

# ``Unsqueeze`` the observations that we will concatenate

UnsqueezeTransform(

dim=-1,

in_keys=["th", "thdot"],

in_keys_inv=["th", "thdot"],

),

)

编写自定义 transforms#

TorchRL 的 transforms 可能无法涵盖环境执行后用户想要执行的所有操作。编写 transform 不需要太多努力。与环境设计一样,编写 transform 有两个步骤

正确处理动态(前向和反向);

调整环境规格。

Transform 可以在两种场景中使用:它本身可以用作 Module。它也可以用作附加到 TransformedEnv。类的结构允许在不同上下文中自定义行为。

一个 Transform 的骨架可以总结如下

class Transform(nn.Module):

def forward(self, tensordict):

...

def _apply_transform(self, tensordict):

...

def _step(self, tensordict):

...

def _call(self, tensordict):

...

def inv(self, tensordict):

...

def _inv_apply_transform(self, tensordict):

...

有三个入口点(forward()、_step() 和 inv()),它们都接收 tensordict.TensorDict 实例。前两个最终会通过 in_keys 指定的键,并对每个键调用 _apply_transform()。如果提供了 Transform.out_keys(否则 in_keys 将被转换值更新),结果将被写入 Transform.out_keys 指向的条目。如果需要执行逆变换,将执行类似的数据流,但使用 Transform.inv() 和 Transform._inv_apply_transform() 方法,并在 in_keys_inv 和 out_keys_inv 键列表之间进行。下图总结了环境和回放缓冲区的数据流。

Transform API

在某些情况下,transform 不会以单元方式处理键的子集,而是会对父环境执行某些操作或与整个输入 tensordict 进行交互。在这些情况下,应重写 _call() 和 forward() 方法,并可以跳过 _apply_transform() 方法。

让我们编写新的 transforms 来计算位置角度的 sine 和 cosine 值,因为这些值比原始角度值更有助于我们学习策略。

class SinTransform(Transform):

def _apply_transform(self, obs: torch.Tensor) -> None:

return obs.sin()

# The transform must also modify the data at reset time

def _reset(

self, tensordict: TensorDictBase, tensordict_reset: TensorDictBase

) -> TensorDictBase:

return self._call(tensordict_reset)

# _apply_to_composite will execute the observation spec transform across all

# in_keys/out_keys pairs and write the result in the observation_spec which

# is of type ``Composite``

@_apply_to_composite

def transform_observation_spec(self, observation_spec):

return BoundedTensorSpec(

low=-1,

high=1,

shape=observation_spec.shape,

dtype=observation_spec.dtype,

device=observation_spec.device,

)

class CosTransform(Transform):

def _apply_transform(self, obs: torch.Tensor) -> None:

return obs.cos()

# The transform must also modify the data at reset time

def _reset(

self, tensordict: TensorDictBase, tensordict_reset: TensorDictBase

) -> TensorDictBase:

return self._call(tensordict_reset)

# _apply_to_composite will execute the observation spec transform across all

# in_keys/out_keys pairs and write the result in the observation_spec which

# is of type ``Composite``

@_apply_to_composite

def transform_observation_spec(self, observation_spec):

return BoundedTensorSpec(

low=-1,

high=1,

shape=observation_spec.shape,

dtype=observation_spec.dtype,

device=observation_spec.device,

)

t_sin = SinTransform(in_keys=["th"], out_keys=["sin"])

t_cos = CosTransform(in_keys=["th"], out_keys=["cos"])

env.append_transform(t_sin)

env.append_transform(t_cos)

TransformedEnv(

env=PendulumEnv(),

transform=Compose(

UnsqueezeTransform(dim=-1, in_keys=['th', 'thdot'], out_keys=['th', 'thdot'], in_keys_inv=['th', 'thdot'], out_keys_inv=['th', 'thdot']),

SinTransform(keys=['th']),

CosTransform(keys=['th'])))

将观察值连接到“observation”条目。del_keys=False 确保我们为下一次迭代保留这些值。

cat_transform = CatTensors(

in_keys=["sin", "cos", "thdot"], dim=-1, out_key="observation", del_keys=False

)

env.append_transform(cat_transform)

TransformedEnv(

env=PendulumEnv(),

transform=Compose(

UnsqueezeTransform(dim=-1, in_keys=['th', 'thdot'], out_keys=['th', 'thdot'], in_keys_inv=['th', 'thdot'], out_keys_inv=['th', 'thdot']),

SinTransform(keys=['th']),

CosTransform(keys=['th']),

CatTensors(in_keys=['cos', 'sin', 'thdot'], out_key=observation)))

再次,让我们检查我们的环境规格是否与收到的内容匹配

2025-10-15 19:14:56,054 [torchrl][INFO] check_env_specs succeeded! [END]

执行 rollout#

执行 rollout 是连续的简单步骤

重置环境

直到某个条件满足

根据策略计算动作

使用此动作执行一步

收集数据

进行一次

MDP步骤

收集数据并返回

这些操作已被方便地封装在 rollout() 方法中,我们在此下方提供了一个简化版本。

def simple_rollout(steps=100):

# preallocate:

data = TensorDict({}, [steps])

# reset

_data = env.reset()

for i in range(steps):

_data["action"] = env.action_spec.rand()

_data = env.step(_data)

data[i] = _data

_data = step_mdp(_data, keep_other=True)

return data

print("data from rollout:", simple_rollout(100))

data from rollout: TensorDict(

fields={

action: Tensor(shape=torch.Size([100, 1]), device=cpu, dtype=torch.float32, is_shared=False),

cos: Tensor(shape=torch.Size([100, 1]), device=cpu, dtype=torch.float32, is_shared=False),

done: Tensor(shape=torch.Size([100, 1]), device=cpu, dtype=torch.bool, is_shared=False),

next: TensorDict(

fields={

cos: Tensor(shape=torch.Size([100, 1]), device=cpu, dtype=torch.float32, is_shared=False),

done: Tensor(shape=torch.Size([100, 1]), device=cpu, dtype=torch.bool, is_shared=False),

observation: Tensor(shape=torch.Size([100, 3]), device=cpu, dtype=torch.float32, is_shared=False),

params: TensorDict(

fields={

dt: Tensor(shape=torch.Size([100]), device=cpu, dtype=torch.float32, is_shared=False),

g: Tensor(shape=torch.Size([100]), device=cpu, dtype=torch.float32, is_shared=False),

l: Tensor(shape=torch.Size([100]), device=cpu, dtype=torch.float32, is_shared=False),

m: Tensor(shape=torch.Size([100]), device=cpu, dtype=torch.float32, is_shared=False),

max_speed: Tensor(shape=torch.Size([100]), device=cpu, dtype=torch.int64, is_shared=False),

max_torque: Tensor(shape=torch.Size([100]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([100]),

device=None,

is_shared=False),

reward: Tensor(shape=torch.Size([100, 1]), device=cpu, dtype=torch.float32, is_shared=False),

sin: Tensor(shape=torch.Size([100, 1]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([100, 1]), device=cpu, dtype=torch.bool, is_shared=False),

th: Tensor(shape=torch.Size([100, 1]), device=cpu, dtype=torch.float32, is_shared=False),

thdot: Tensor(shape=torch.Size([100, 1]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([100]),

device=None,

is_shared=False),

observation: Tensor(shape=torch.Size([100, 3]), device=cpu, dtype=torch.float32, is_shared=False),

params: TensorDict(

fields={

dt: Tensor(shape=torch.Size([100]), device=cpu, dtype=torch.float32, is_shared=False),

g: Tensor(shape=torch.Size([100]), device=cpu, dtype=torch.float32, is_shared=False),

l: Tensor(shape=torch.Size([100]), device=cpu, dtype=torch.float32, is_shared=False),

m: Tensor(shape=torch.Size([100]), device=cpu, dtype=torch.float32, is_shared=False),

max_speed: Tensor(shape=torch.Size([100]), device=cpu, dtype=torch.int64, is_shared=False),

max_torque: Tensor(shape=torch.Size([100]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([100]),

device=None,

is_shared=False),

sin: Tensor(shape=torch.Size([100, 1]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([100, 1]), device=cpu, dtype=torch.bool, is_shared=False),

th: Tensor(shape=torch.Size([100, 1]), device=cpu, dtype=torch.float32, is_shared=False),

thdot: Tensor(shape=torch.Size([100, 1]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([100]),

device=None,

is_shared=False)

批量计算#

我们教程中最后一个未探索的方面是我们可以在 TorchRL 中批量计算的能力。因为我们的环境不对输入数据形状做出任何假设,所以我们可以无缝地对其进行批量数据执行。更好的是:对于非批次锁定环境,如我们的 Pendulum,我们可以动态更改批次大小而无需重新创建环境。为此,我们只需生成所需形状的参数。

reset (batch size of 10) TensorDict(

fields={

cos: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.float32, is_shared=False),

done: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.bool, is_shared=False),

observation: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.float32, is_shared=False),

params: TensorDict(

fields={

dt: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False),

g: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False),

l: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False),

m: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False),

max_speed: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.int64, is_shared=False),

max_torque: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([10]),

device=None,

is_shared=False),

sin: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.bool, is_shared=False),

th: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.float32, is_shared=False),

thdot: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([10]),

device=None,

is_shared=False)

rand step (batch size of 10) TensorDict(

fields={

action: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.float32, is_shared=False),

cos: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.float32, is_shared=False),

done: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.bool, is_shared=False),

next: TensorDict(

fields={

cos: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.float32, is_shared=False),

done: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.bool, is_shared=False),

observation: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.float32, is_shared=False),

params: TensorDict(

fields={

dt: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False),

g: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False),

l: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False),

m: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False),

max_speed: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.int64, is_shared=False),

max_torque: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([10]),

device=None,

is_shared=False),

reward: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.float32, is_shared=False),

sin: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.bool, is_shared=False),

th: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.float32, is_shared=False),

thdot: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([10]),

device=None,

is_shared=False),

observation: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.float32, is_shared=False),

params: TensorDict(

fields={

dt: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False),

g: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False),

l: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False),

m: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False),

max_speed: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.int64, is_shared=False),

max_torque: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([10]),

device=None,

is_shared=False),

sin: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.bool, is_shared=False),

th: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.float32, is_shared=False),

thdot: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([10]),

device=None,

is_shared=False)

使用批量数据执行 rollout 需要我们在 rollout 函数之外重置环境,因为我们需要动态定义 batch_size,而 rollout() 不支持此功能。

rollout = env.rollout(

3,

auto_reset=False, # we're executing the reset out of the ``rollout`` call

tensordict=env.reset(env.gen_params(batch_size=[batch_size])),

)

print("rollout of len 3 (batch size of 10):", rollout)

rollout of len 3 (batch size of 10): TensorDict(

fields={

action: Tensor(shape=torch.Size([10, 3, 1]), device=cpu, dtype=torch.float32, is_shared=False),

cos: Tensor(shape=torch.Size([10, 3, 1]), device=cpu, dtype=torch.float32, is_shared=False),

done: Tensor(shape=torch.Size([10, 3, 1]), device=cpu, dtype=torch.bool, is_shared=False),

next: TensorDict(

fields={

cos: Tensor(shape=torch.Size([10, 3, 1]), device=cpu, dtype=torch.float32, is_shared=False),

done: Tensor(shape=torch.Size([10, 3, 1]), device=cpu, dtype=torch.bool, is_shared=False),

observation: Tensor(shape=torch.Size([10, 3, 3]), device=cpu, dtype=torch.float32, is_shared=False),

params: TensorDict(

fields={

dt: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.float32, is_shared=False),

g: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.float32, is_shared=False),

l: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.float32, is_shared=False),

m: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.float32, is_shared=False),

max_speed: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.int64, is_shared=False),

max_torque: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([10, 3]),

device=None,

is_shared=False),

reward: Tensor(shape=torch.Size([10, 3, 1]), device=cpu, dtype=torch.float32, is_shared=False),

sin: Tensor(shape=torch.Size([10, 3, 1]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([10, 3, 1]), device=cpu, dtype=torch.bool, is_shared=False),

th: Tensor(shape=torch.Size([10, 3, 1]), device=cpu, dtype=torch.float32, is_shared=False),

thdot: Tensor(shape=torch.Size([10, 3, 1]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([10, 3]),

device=None,

is_shared=False),

observation: Tensor(shape=torch.Size([10, 3, 3]), device=cpu, dtype=torch.float32, is_shared=False),

params: TensorDict(

fields={

dt: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.float32, is_shared=False),

g: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.float32, is_shared=False),

l: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.float32, is_shared=False),

m: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.float32, is_shared=False),

max_speed: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.int64, is_shared=False),

max_torque: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([10, 3]),

device=None,

is_shared=False),

sin: Tensor(shape=torch.Size([10, 3, 1]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([10, 3, 1]), device=cpu, dtype=torch.bool, is_shared=False),

th: Tensor(shape=torch.Size([10, 3, 1]), device=cpu, dtype=torch.float32, is_shared=False),

thdot: Tensor(shape=torch.Size([10, 3, 1]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([10, 3]),

device=None,

is_shared=False)

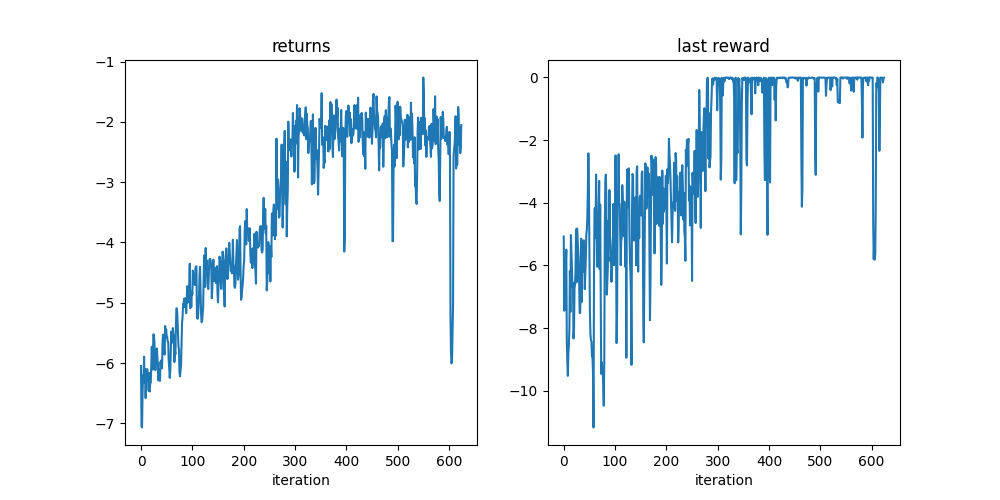

训练一个简单策略#

在此示例中,我们将使用奖励作为可微分目标(例如负损失)来训练一个简单的策略。我们将利用我们的动态系统是完全可微分的事实,通过轨迹回报进行反向传播,并直接调整我们的策略权重以最大化此值。当然,在许多情况下,我们所做的许多假设并不成立,例如可微分系统和对底层机制的完全访问。

尽管如此,这是一个非常简单的示例,它展示了如何使用 TorchRL 中的自定义环境来编写训练循环。

让我们先编写策略网络

torch.manual_seed(0)

env.set_seed(0)

net = nn.Sequential(

nn.LazyLinear(64),

nn.Tanh(),

nn.LazyLinear(64),

nn.Tanh(),

nn.LazyLinear(64),

nn.Tanh(),

nn.LazyLinear(1),

)

policy = TensorDictModule(

net,

in_keys=["observation"],

out_keys=["action"],

)

以及我们的优化器

optim = torch.optim.Adam(policy.parameters(), lr=2e-3)

训练循环#

我们将依次

生成一条轨迹

对奖励求和

通过由这些操作定义的图进行反向传播

裁剪梯度范数并执行优化步骤

重复

在训练循环结束时,我们应该得到一个接近 0 的最终奖励,这表明倒摆已达到向上且静止的期望状态。

batch_size = 32

pbar = tqdm.tqdm(range(20_000 // batch_size))

scheduler = torch.optim.lr_scheduler.CosineAnnealingLR(optim, 20_000)

logs = defaultdict(list)

for _ in pbar:

init_td = env.reset(env.gen_params(batch_size=[batch_size]))

rollout = env.rollout(100, policy, tensordict=init_td, auto_reset=False)

traj_return = rollout["next", "reward"].mean()

(-traj_return).backward()

gn = torch.nn.utils.clip_grad_norm_(net.parameters(), 1.0)

optim.step()

optim.zero_grad()

pbar.set_description(

f"reward: {traj_return: 4.4f}, "

f"last reward: {rollout[..., -1]['next', 'reward'].mean(): 4.4f}, gradient norm: {gn: 4.4}"

)

logs["return"].append(traj_return.item())

logs["last_reward"].append(rollout[..., -1]["next", "reward"].mean().item())

scheduler.step()

def plot():

import matplotlib

from matplotlib import pyplot as plt

is_ipython = "inline" in matplotlib.get_backend()

if is_ipython:

from IPython import display

with plt.ion():

plt.figure(figsize=(10, 5))

plt.subplot(1, 2, 1)

plt.plot(logs["return"])

plt.title("returns")

plt.xlabel("iteration")

plt.subplot(1, 2, 2)

plt.plot(logs["last_reward"])

plt.title("last reward")

plt.xlabel("iteration")

if is_ipython:

display.display(plt.gcf())

display.clear_output(wait=True)

plt.show()

plot()

0%| | 0/625 [00:00<?, ?it/s]

reward: -6.0488, last reward: -5.0748, gradient norm: 8.519: 0%| | 0/625 [00:00<?, ?it/s]

reward: -6.0488, last reward: -5.0748, gradient norm: 8.519: 0%| | 1/625 [00:00<03:27, 3.01it/s]

reward: -7.0499, last reward: -7.4472, gradient norm: 5.073: 0%| | 1/625 [00:00<03:27, 3.01it/s]

reward: -7.0499, last reward: -7.4472, gradient norm: 5.073: 0%| | 2/625 [00:00<02:41, 3.85it/s]

reward: -7.0685, last reward: -7.0408, gradient norm: 5.552: 0%| | 2/625 [00:00<02:41, 3.85it/s]

reward: -7.0685, last reward: -7.0408, gradient norm: 5.552: 0%| | 3/625 [00:00<02:26, 4.25it/s]

reward: -6.5154, last reward: -5.9086, gradient norm: 2.527: 0%| | 3/625 [00:00<02:26, 4.25it/s]

reward: -6.5154, last reward: -5.9086, gradient norm: 2.527: 1%| | 4/625 [00:00<02:18, 4.47it/s]

reward: -6.2006, last reward: -5.9385, gradient norm: 8.155: 1%| | 4/625 [00:01<02:18, 4.47it/s]

reward: -6.2006, last reward: -5.9385, gradient norm: 8.155: 1%| | 5/625 [00:01<02:14, 4.61it/s]

reward: -6.2568, last reward: -5.4981, gradient norm: 6.223: 1%| | 5/625 [00:01<02:14, 4.61it/s]

reward: -6.2568, last reward: -5.4981, gradient norm: 6.223: 1%| | 6/625 [00:01<02:11, 4.70it/s]

reward: -5.8929, last reward: -8.4491, gradient norm: 4.581: 1%| | 6/625 [00:01<02:11, 4.70it/s]

reward: -5.8929, last reward: -8.4491, gradient norm: 4.581: 1%| | 7/625 [00:01<02:09, 4.76it/s]

reward: -6.3233, last reward: -9.0664, gradient norm: 7.596: 1%| | 7/625 [00:01<02:09, 4.76it/s]

reward: -6.3233, last reward: -9.0664, gradient norm: 7.596: 1%|▏ | 8/625 [00:01<02:08, 4.80it/s]

reward: -6.1021, last reward: -9.5263, gradient norm: 0.9579: 1%|▏ | 8/625 [00:01<02:08, 4.80it/s]

reward: -6.1021, last reward: -9.5263, gradient norm: 0.9579: 1%|▏ | 9/625 [00:01<02:07, 4.83it/s]

reward: -6.5807, last reward: -8.8075, gradient norm: 3.212: 1%|▏ | 9/625 [00:02<02:07, 4.83it/s]

reward: -6.5807, last reward: -8.8075, gradient norm: 3.212: 2%|▏ | 10/625 [00:02<02:06, 4.85it/s]

reward: -6.2009, last reward: -8.5525, gradient norm: 2.914: 2%|▏ | 10/625 [00:02<02:06, 4.85it/s]

reward: -6.2009, last reward: -8.5525, gradient norm: 2.914: 2%|▏ | 11/625 [00:02<02:06, 4.86it/s]

reward: -6.2894, last reward: -8.0115, gradient norm: 52.06: 2%|▏ | 11/625 [00:02<02:06, 4.86it/s]

reward: -6.2894, last reward: -8.0115, gradient norm: 52.06: 2%|▏ | 12/625 [00:02<02:05, 4.88it/s]

reward: -6.0977, last reward: -6.1845, gradient norm: 18.09: 2%|▏ | 12/625 [00:02<02:05, 4.88it/s]

reward: -6.0977, last reward: -6.1845, gradient norm: 18.09: 2%|▏ | 13/625 [00:02<02:05, 4.88it/s]

reward: -6.1830, last reward: -7.4858, gradient norm: 5.233: 2%|▏ | 13/625 [00:02<02:05, 4.88it/s]

reward: -6.1830, last reward: -7.4858, gradient norm: 5.233: 2%|▏ | 14/625 [00:02<02:05, 4.88it/s]

reward: -6.2863, last reward: -5.0297, gradient norm: 1.464: 2%|▏ | 14/625 [00:03<02:05, 4.88it/s]

reward: -6.2863, last reward: -5.0297, gradient norm: 1.464: 2%|▏ | 15/625 [00:03<02:04, 4.88it/s]

reward: -6.4617, last reward: -5.5997, gradient norm: 2.903: 2%|▏ | 15/625 [00:03<02:04, 4.88it/s]

reward: -6.4617, last reward: -5.5997, gradient norm: 2.903: 3%|▎ | 16/625 [00:03<02:04, 4.89it/s]

reward: -6.1647, last reward: -6.0777, gradient norm: 4.918: 3%|▎ | 16/625 [00:03<02:04, 4.89it/s]

reward: -6.1647, last reward: -6.0777, gradient norm: 4.918: 3%|▎ | 17/625 [00:03<02:04, 4.89it/s]

reward: -6.4709, last reward: -6.6813, gradient norm: 0.8319: 3%|▎ | 17/625 [00:03<02:04, 4.89it/s]

reward: -6.4709, last reward: -6.6813, gradient norm: 0.8319: 3%|▎ | 18/625 [00:03<02:03, 4.90it/s]

reward: -6.3221, last reward: -6.5577, gradient norm: 0.8415: 3%|▎ | 18/625 [00:04<02:03, 4.90it/s]

reward: -6.3221, last reward: -6.5577, gradient norm: 0.8415: 3%|▎ | 19/625 [00:04<02:03, 4.90it/s]

reward: -6.3229, last reward: -8.3322, gradient norm: 27.31: 3%|▎ | 19/625 [00:04<02:03, 4.90it/s]

reward: -6.3229, last reward: -8.3322, gradient norm: 27.31: 3%|▎ | 20/625 [00:04<02:03, 4.89it/s]

reward: -6.0258, last reward: -8.0581, gradient norm: 12.32: 3%|▎ | 20/625 [00:04<02:03, 4.89it/s]

reward: -6.0258, last reward: -8.0581, gradient norm: 12.32: 3%|▎ | 21/625 [00:04<02:03, 4.90it/s]

reward: -5.7295, last reward: -6.7230, gradient norm: 24.23: 3%|▎ | 21/625 [00:04<02:03, 4.90it/s]

reward: -5.7295, last reward: -6.7230, gradient norm: 24.23: 4%|▎ | 22/625 [00:04<02:03, 4.90it/s]

reward: -6.0265, last reward: -6.6077, gradient norm: 52.82: 4%|▎ | 22/625 [00:04<02:03, 4.90it/s]

reward: -6.0265, last reward: -6.6077, gradient norm: 52.82: 4%|▎ | 23/625 [00:04<02:02, 4.90it/s]

reward: -6.1081, last reward: -6.1347, gradient norm: 31.16: 4%|▎ | 23/625 [00:05<02:02, 4.90it/s]

reward: -6.1081, last reward: -6.1347, gradient norm: 31.16: 4%|▍ | 24/625 [00:05<02:02, 4.90it/s]

reward: -5.5231, last reward: -4.8435, gradient norm: 11.51: 4%|▍ | 24/625 [00:05<02:02, 4.90it/s]

reward: -5.5231, last reward: -4.8435, gradient norm: 11.51: 4%|▍ | 25/625 [00:05<02:02, 4.90it/s]

reward: -5.5310, last reward: -6.5397, gradient norm: 13.18: 4%|▍ | 25/625 [00:05<02:02, 4.90it/s]

reward: -5.5310, last reward: -6.5397, gradient norm: 13.18: 4%|▍ | 26/625 [00:05<02:02, 4.90it/s]

reward: -5.6382, last reward: -4.8204, gradient norm: 10.72: 4%|▍ | 26/625 [00:05<02:02, 4.90it/s]

reward: -5.6382, last reward: -4.8204, gradient norm: 10.72: 4%|▍ | 27/625 [00:05<02:02, 4.90it/s]

reward: -5.8162, last reward: -5.1618, gradient norm: 10.44: 4%|▍ | 27/625 [00:05<02:02, 4.90it/s]

reward: -5.8162, last reward: -5.1618, gradient norm: 10.44: 4%|▍ | 28/625 [00:05<02:01, 4.90it/s]

reward: -6.1180, last reward: -5.4640, gradient norm: 7.744: 4%|▍ | 28/625 [00:06<02:01, 4.90it/s]

reward: -6.1180, last reward: -5.4640, gradient norm: 7.744: 5%|▍ | 29/625 [00:06<02:01, 4.90it/s]

reward: -5.8759, last reward: -5.7826, gradient norm: 1.796: 5%|▍ | 29/625 [00:06<02:01, 4.90it/s]

reward: -5.8759, last reward: -5.7826, gradient norm: 1.796: 5%|▍ | 30/625 [00:06<02:01, 4.90it/s]

reward: -5.8296, last reward: -6.4808, gradient norm: 2.25: 5%|▍ | 30/625 [00:06<02:01, 4.90it/s]

reward: -5.8296, last reward: -6.4808, gradient norm: 2.25: 5%|▍ | 31/625 [00:06<02:01, 4.90it/s]

reward: -5.7578, last reward: -7.5124, gradient norm: 30.52: 5%|▍ | 31/625 [00:06<02:01, 4.90it/s]

reward: -5.7578, last reward: -7.5124, gradient norm: 30.52: 5%|▌ | 32/625 [00:06<02:01, 4.90it/s]

reward: -5.9313, last reward: -7.5212, gradient norm: 7.652: 5%|▌ | 32/625 [00:06<02:01, 4.90it/s]

reward: -5.9313, last reward: -7.5212, gradient norm: 7.652: 5%|▌ | 33/625 [00:06<02:00, 4.90it/s]

reward: -6.0223, last reward: -6.6343, gradient norm: 4.224: 5%|▌ | 33/625 [00:07<02:00, 4.90it/s]

reward: -6.0223, last reward: -6.6343, gradient norm: 4.224: 5%|▌ | 34/625 [00:07<02:23, 4.11it/s]

reward: -6.2886, last reward: -5.1441, gradient norm: 3.539: 5%|▌ | 34/625 [00:07<02:23, 4.11it/s]

reward: -6.2886, last reward: -5.1441, gradient norm: 3.539: 6%|▌ | 35/625 [00:07<02:16, 4.31it/s]

reward: -6.1060, last reward: -7.1638, gradient norm: 2.407: 6%|▌ | 35/625 [00:07<02:16, 4.31it/s]

reward: -6.1060, last reward: -7.1638, gradient norm: 2.407: 6%|▌ | 36/625 [00:07<02:11, 4.46it/s]

reward: -6.2230, last reward: -5.2917, gradient norm: 5.425: 6%|▌ | 36/625 [00:07<02:11, 4.46it/s]

reward: -6.2230, last reward: -5.2917, gradient norm: 5.425: 6%|▌ | 37/625 [00:07<02:08, 4.58it/s]

reward: -6.2950, last reward: -6.2126, gradient norm: 6.035: 6%|▌ | 37/625 [00:08<02:08, 4.58it/s]

reward: -6.2950, last reward: -6.2126, gradient norm: 6.035: 6%|▌ | 38/625 [00:08<02:05, 4.67it/s]

reward: -5.9786, last reward: -5.8757, gradient norm: 2.098: 6%|▌ | 38/625 [00:08<02:05, 4.67it/s]

reward: -5.9786, last reward: -5.8757, gradient norm: 2.098: 6%|▌ | 39/625 [00:08<02:03, 4.73it/s]

reward: -6.0730, last reward: -5.1952, gradient norm: 3.982: 6%|▌ | 39/625 [00:08<02:03, 4.73it/s]

reward: -6.0730, last reward: -5.1952, gradient norm: 3.982: 6%|▋ | 40/625 [00:08<02:02, 4.76it/s]

reward: -5.9481, last reward: -5.7122, gradient norm: 4.42: 6%|▋ | 40/625 [00:08<02:02, 4.76it/s]

reward: -5.9481, last reward: -5.7122, gradient norm: 4.42: 7%|▋ | 41/625 [00:08<02:01, 4.80it/s]

reward: -6.0875, last reward: -6.7567, gradient norm: 7.728: 7%|▋ | 41/625 [00:08<02:01, 4.80it/s]

reward: -6.0875, last reward: -6.7567, gradient norm: 7.728: 7%|▋ | 42/625 [00:08<02:00, 4.83it/s]

reward: -5.6301, last reward: -6.2249, gradient norm: 9.824: 7%|▋ | 42/625 [00:09<02:00, 4.83it/s]

reward: -5.6301, last reward: -6.2249, gradient norm: 9.824: 7%|▋ | 43/625 [00:09<02:00, 4.85it/s]

reward: -5.5281, last reward: -5.7749, gradient norm: 7.223: 7%|▋ | 43/625 [00:09<02:00, 4.85it/s]

reward: -5.5281, last reward: -5.7749, gradient norm: 7.223: 7%|▋ | 44/625 [00:09<01:59, 4.85it/s]

reward: -5.5904, last reward: -5.0048, gradient norm: 11.73: 7%|▋ | 44/625 [00:09<01:59, 4.85it/s]

reward: -5.5904, last reward: -5.0048, gradient norm: 11.73: 7%|▋ | 45/625 [00:09<01:59, 4.85it/s]

reward: -5.7882, last reward: -4.8660, gradient norm: 2.094: 7%|▋ | 45/625 [00:09<01:59, 4.85it/s]

reward: -5.7882, last reward: -4.8660, gradient norm: 2.094: 7%|▋ | 46/625 [00:09<01:59, 4.85it/s]

reward: -5.8592, last reward: -4.4848, gradient norm: 30.4: 7%|▋ | 46/625 [00:09<01:59, 4.85it/s]

reward: -5.8592, last reward: -4.4848, gradient norm: 30.4: 8%|▊ | 47/625 [00:09<01:58, 4.87it/s]

reward: -5.3849, last reward: -3.5828, gradient norm: 2.244: 8%|▊ | 47/625 [00:10<01:58, 4.87it/s]

reward: -5.3849, last reward: -3.5828, gradient norm: 2.244: 8%|▊ | 48/625 [00:10<01:58, 4.87it/s]

reward: -5.5785, last reward: -2.4216, gradient norm: 0.8946: 8%|▊ | 48/625 [00:10<01:58, 4.87it/s]

reward: -5.5785, last reward: -2.4216, gradient norm: 0.8946: 8%|▊ | 49/625 [00:10<01:58, 4.87it/s]

reward: -5.4433, last reward: -3.4306, gradient norm: 16.48: 8%|▊ | 49/625 [00:10<01:58, 4.87it/s]

reward: -5.4433, last reward: -3.4306, gradient norm: 16.48: 8%|▊ | 50/625 [00:10<01:58, 4.86it/s]

reward: -5.5546, last reward: -5.3443, gradient norm: 8.319: 8%|▊ | 50/625 [00:10<01:58, 4.86it/s]

reward: -5.5546, last reward: -5.3443, gradient norm: 8.319: 8%|▊ | 51/625 [00:10<01:57, 4.87it/s]

reward: -5.5681, last reward: -7.5266, gradient norm: 5.593: 8%|▊ | 51/625 [00:10<01:57, 4.87it/s]

reward: -5.5681, last reward: -7.5266, gradient norm: 5.593: 8%|▊ | 52/625 [00:10<01:58, 4.86it/s]

reward: -5.6418, last reward: -8.1904, gradient norm: 12.34: 8%|▊ | 52/625 [00:11<01:58, 4.86it/s]

reward: -5.6418, last reward: -8.1904, gradient norm: 12.34: 8%|▊ | 53/625 [00:11<01:57, 4.87it/s]

reward: -5.6517, last reward: -8.3856, gradient norm: 4.565: 8%|▊ | 53/625 [00:11<01:57, 4.87it/s]

reward: -5.6517, last reward: -8.3856, gradient norm: 4.565: 9%|▊ | 54/625 [00:11<01:57, 4.87it/s]

reward: -5.9653, last reward: -8.4339, gradient norm: 12.73: 9%|▊ | 54/625 [00:11<01:57, 4.87it/s]

reward: -5.9653, last reward: -8.4339, gradient norm: 12.73: 9%|▉ | 55/625 [00:11<01:57, 4.86it/s]

reward: -6.0832, last reward: -8.9027, gradient norm: 6.07: 9%|▉ | 55/625 [00:11<01:57, 4.86it/s]

reward: -6.0832, last reward: -8.9027, gradient norm: 6.07: 9%|▉ | 56/625 [00:11<01:57, 4.86it/s]

reward: -6.2454, last reward: -8.9134, gradient norm: 9.312: 9%|▉ | 56/625 [00:11<01:57, 4.86it/s]

reward: -6.2454, last reward: -8.9134, gradient norm: 9.312: 9%|▉ | 57/625 [00:11<01:56, 4.86it/s]

reward: -6.1343, last reward: -9.4171, gradient norm: 16.74: 9%|▉ | 57/625 [00:12<01:56, 4.86it/s]

reward: -6.1343, last reward: -9.4171, gradient norm: 16.74: 9%|▉ | 58/625 [00:12<01:56, 4.86it/s]

reward: -5.7796, last reward: -11.1745, gradient norm: 20.83: 9%|▉ | 58/625 [00:12<01:56, 4.86it/s]

reward: -5.7796, last reward: -11.1745, gradient norm: 20.83: 9%|▉ | 59/625 [00:12<01:56, 4.85it/s]

reward: -5.4783, last reward: -6.2441, gradient norm: 8.777: 9%|▉ | 59/625 [00:12<01:56, 4.85it/s]

reward: -5.4783, last reward: -6.2441, gradient norm: 8.777: 10%|▉ | 60/625 [00:12<01:56, 4.85it/s]

reward: -5.5816, last reward: -4.1932, gradient norm: 6.328: 10%|▉ | 60/625 [00:12<01:56, 4.85it/s]

reward: -5.5816, last reward: -4.1932, gradient norm: 6.328: 10%|▉ | 61/625 [00:12<01:56, 4.85it/s]

reward: -5.6604, last reward: -4.1629, gradient norm: 3.516: 10%|▉ | 61/625 [00:12<01:56, 4.85it/s]

reward: -5.6604, last reward: -4.1629, gradient norm: 3.516: 10%|▉ | 62/625 [00:12<01:55, 4.86it/s]

reward: -5.4195, last reward: -5.1296, gradient norm: 8.378: 10%|▉ | 62/625 [00:13<01:55, 4.86it/s]

reward: -5.4195, last reward: -5.1296, gradient norm: 8.378: 10%|█ | 63/625 [00:13<01:55, 4.87it/s]

reward: -5.5165, last reward: -3.0986, gradient norm: 17.72: 10%|█ | 63/625 [00:13<01:55, 4.87it/s]

reward: -5.5165, last reward: -3.0986, gradient norm: 17.72: 10%|█ | 64/625 [00:13<01:54, 4.88it/s]

reward: -5.5596, last reward: -4.2442, gradient norm: 11.38: 10%|█ | 64/625 [00:13<01:54, 4.88it/s]

reward: -5.5596, last reward: -4.2442, gradient norm: 11.38: 10%|█ | 65/625 [00:13<01:54, 4.89it/s]

reward: -5.9834, last reward: -6.0432, gradient norm: 8.038: 10%|█ | 65/625 [00:13<01:54, 4.89it/s]

reward: -5.9834, last reward: -6.0432, gradient norm: 8.038: 11%|█ | 66/625 [00:13<01:54, 4.89it/s]

reward: -5.7958, last reward: -5.1525, gradient norm: 8.564: 11%|█ | 66/625 [00:13<01:54, 4.89it/s]

reward: -5.7958, last reward: -5.1525, gradient norm: 8.564: 11%|█ | 67/625 [00:13<01:54, 4.89it/s]

reward: -5.8544, last reward: -5.2747, gradient norm: 7.632: 11%|█ | 67/625 [00:14<01:54, 4.89it/s]

reward: -5.8544, last reward: -5.2747, gradient norm: 7.632: 11%|█ | 68/625 [00:14<01:54, 4.88it/s]

reward: -5.3922, last reward: -4.5267, gradient norm: 18.13: 11%|█ | 68/625 [00:14<01:54, 4.88it/s]

reward: -5.3922, last reward: -4.5267, gradient norm: 18.13: 11%|█ | 69/625 [00:14<01:53, 4.88it/s]

reward: -5.0917, last reward: -3.3025, gradient norm: 2.33: 11%|█ | 69/625 [00:14<01:53, 4.88it/s]

reward: -5.0917, last reward: -3.3025, gradient norm: 2.33: 11%|█ | 70/625 [00:14<01:53, 4.88it/s]

reward: -5.0968, last reward: -6.1214, gradient norm: 11.27: 11%|█ | 70/625 [00:14<01:53, 4.88it/s]

reward: -5.0968, last reward: -6.1214, gradient norm: 11.27: 11%|█▏ | 71/625 [00:14<01:53, 4.88it/s]

reward: -5.2523, last reward: -4.0580, gradient norm: 22.2: 11%|█▏ | 71/625 [00:15<01:53, 4.88it/s]

reward: -5.2523, last reward: -4.0580, gradient norm: 22.2: 12%|█▏ | 72/625 [00:15<01:53, 4.89it/s]

reward: -5.4829, last reward: -6.6886, gradient norm: 12.37: 12%|█▏ | 72/625 [00:15<01:53, 4.89it/s]

reward: -5.4829, last reward: -6.6886, gradient norm: 12.37: 12%|█▏ | 73/625 [00:15<01:52, 4.89it/s]

reward: -5.7293, last reward: -9.4615, gradient norm: 15.07: 12%|█▏ | 73/625 [00:15<01:52, 4.89it/s]

reward: -5.7293, last reward: -9.4615, gradient norm: 15.07: 12%|█▏ | 74/625 [00:15<01:52, 4.89it/s]

reward: -5.7735, last reward: -9.0859, gradient norm: 892.4: 12%|█▏ | 74/625 [00:15<01:52, 4.89it/s]

reward: -5.7735, last reward: -9.0859, gradient norm: 892.4: 12%|█▏ | 75/625 [00:15<01:52, 4.88it/s]

reward: -6.1616, last reward: -9.2996, gradient norm: 9.569: 12%|█▏ | 75/625 [00:15<01:52, 4.88it/s]

reward: -6.1616, last reward: -9.2996, gradient norm: 9.569: 12%|█▏ | 76/625 [00:15<01:52, 4.89it/s]

reward: -6.2202, last reward: -9.3199, gradient norm: 8.919: 12%|█▏ | 76/625 [00:16<01:52, 4.89it/s]

reward: -6.2202, last reward: -9.3199, gradient norm: 8.919: 12%|█▏ | 77/625 [00:16<01:52, 4.89it/s]

reward: -6.1349, last reward: -9.9361, gradient norm: 10.06: 12%|█▏ | 77/625 [00:16<01:52, 4.89it/s]

reward: -6.1349, last reward: -9.9361, gradient norm: 10.06: 12%|█▏ | 78/625 [00:16<01:51, 4.89it/s]

reward: -6.0374, last reward: -10.4791, gradient norm: 45.37: 12%|█▏ | 78/625 [00:16<01:51, 4.89it/s]

reward: -6.0374, last reward: -10.4791, gradient norm: 45.37: 13%|█▎ | 79/625 [00:16<01:51, 4.89it/s]

reward: -5.6990, last reward: -9.0426, gradient norm: 32.93: 13%|█▎ | 79/625 [00:16<01:51, 4.89it/s]

reward: -5.6990, last reward: -9.0426, gradient norm: 32.93: 13%|█▎ | 80/625 [00:16<01:51, 4.88it/s]

reward: -5.3303, last reward: -4.9148, gradient norm: 307.4: 13%|█▎ | 80/625 [00:16<01:51, 4.88it/s]

reward: -5.3303, last reward: -4.9148, gradient norm: 307.4: 13%|█▎ | 81/625 [00:16<01:51, 4.88it/s]

reward: -5.2291, last reward: -3.3632, gradient norm: 2.828: 13%|█▎ | 81/625 [00:17<01:51, 4.88it/s]

reward: -5.2291, last reward: -3.3632, gradient norm: 2.828: 13%|█▎ | 82/625 [00:17<01:51, 4.88it/s]

reward: -5.0228, last reward: -3.1018, gradient norm: 32.56: 13%|█▎ | 82/625 [00:17<01:51, 4.88it/s]

reward: -5.0228, last reward: -3.1018, gradient norm: 32.56: 13%|█▎ | 83/625 [00:17<01:50, 4.89it/s]

reward: -5.0364, last reward: -3.8503, gradient norm: 8.948: 13%|█▎ | 83/625 [00:17<01:50, 4.89it/s]

reward: -5.0364, last reward: -3.8503, gradient norm: 8.948: 13%|█▎ | 84/625 [00:17<01:51, 4.87it/s]

reward: -4.9341, last reward: -6.9319, gradient norm: 119.2: 13%|█▎ | 84/625 [00:17<01:51, 4.87it/s]

reward: -4.9341, last reward: -6.9319, gradient norm: 119.2: 14%|█▎ | 85/625 [00:17<01:51, 4.86it/s]

reward: -5.0693, last reward: -6.4436, gradient norm: 5.28: 14%|█▎ | 85/625 [00:17<01:51, 4.86it/s]

reward: -5.0693, last reward: -6.4436, gradient norm: 5.28: 14%|█▍ | 86/625 [00:17<01:50, 4.86it/s]

reward: -4.9258, last reward: -6.0461, gradient norm: 4.376: 14%|█▍ | 86/625 [00:18<01:50, 4.86it/s]

reward: -4.9258, last reward: -6.0461, gradient norm: 4.376: 14%|█▍ | 87/625 [00:18<01:50, 4.87it/s]

reward: -4.9910, last reward: -4.5681, gradient norm: 25.14: 14%|█▍ | 87/625 [00:18<01:50, 4.87it/s]

reward: -4.9910, last reward: -4.5681, gradient norm: 25.14: 14%|█▍ | 88/625 [00:18<01:50, 4.87it/s]

reward: -5.1716, last reward: -5.3157, gradient norm: 15.5: 14%|█▍ | 88/625 [00:18<01:50, 4.87it/s]

reward: -5.1716, last reward: -5.3157, gradient norm: 15.5: 14%|█▍ | 89/625 [00:18<01:49, 4.88it/s]

reward: -4.9816, last reward: -3.5950, gradient norm: 7.403: 14%|█▍ | 89/625 [00:18<01:49, 4.88it/s]

reward: -4.9816, last reward: -3.5950, gradient norm: 7.403: 14%|█▍ | 90/625 [00:18<01:49, 4.88it/s]

reward: -4.7252, last reward: -4.8815, gradient norm: 10.07: 14%|█▍ | 90/625 [00:18<01:49, 4.88it/s]

reward: -4.7252, last reward: -4.8815, gradient norm: 10.07: 15%|█▍ | 91/625 [00:18<01:49, 4.88it/s]

reward: -4.9986, last reward: -5.8680, gradient norm: 14.26: 15%|█▍ | 91/625 [00:19<01:49, 4.88it/s]

reward: -4.9986, last reward: -5.8680, gradient norm: 14.26: 15%|█▍ | 92/625 [00:19<01:49, 4.88it/s]

reward: -4.9029, last reward: -5.7132, gradient norm: 21.65: 15%|█▍ | 92/625 [00:19<01:49, 4.88it/s]

reward: -4.9029, last reward: -5.7132, gradient norm: 21.65: 15%|█▍ | 93/625 [00:19<01:49, 4.88it/s]

reward: -4.7814, last reward: -6.5231, gradient norm: 27.4: 15%|█▍ | 93/625 [00:19<01:49, 4.88it/s]

reward: -4.7814, last reward: -6.5231, gradient norm: 27.4: 15%|█▌ | 94/625 [00:19<01:49, 4.87it/s]

reward: -4.7013, last reward: -6.0821, gradient norm: 22.53: 15%|█▌ | 94/625 [00:19<01:49, 4.87it/s]

reward: -4.7013, last reward: -6.0821, gradient norm: 22.53: 15%|█▌ | 95/625 [00:19<01:49, 4.86it/s]

reward: -4.3526, last reward: -5.3718, gradient norm: 28.77: 15%|█▌ | 95/625 [00:19<01:49, 4.86it/s]

reward: -4.3526, last reward: -5.3718, gradient norm: 28.77: 15%|█▌ | 96/625 [00:19<01:49, 4.85it/s]

reward: -5.0901, last reward: -5.0493, gradient norm: 8.428: 15%|█▌ | 96/625 [00:20<01:49, 4.85it/s]

reward: -5.0901, last reward: -5.0493, gradient norm: 8.428: 16%|█▌ | 97/625 [00:20<01:49, 4.84it/s]

reward: -4.9341, last reward: -4.0375, gradient norm: 17.1: 16%|█▌ | 97/625 [00:20<01:49, 4.84it/s]

reward: -4.9341, last reward: -4.0375, gradient norm: 17.1: 16%|█▌ | 98/625 [00:20<01:48, 4.84it/s]

reward: -5.0707, last reward: -5.9903, gradient norm: 12.01: 16%|█▌ | 98/625 [00:20<01:48, 4.84it/s]

reward: -5.0707, last reward: -5.9903, gradient norm: 12.01: 16%|█▌ | 99/625 [00:20<01:48, 4.84it/s]

reward: -4.8171, last reward: -4.1591, gradient norm: 47.69: 16%|█▌ | 99/625 [00:20<01:48, 4.84it/s]

reward: -4.8171, last reward: -4.1591, gradient norm: 47.69: 16%|█▌ | 100/625 [00:20<01:48, 4.84it/s]

reward: -4.8621, last reward: -4.1783, gradient norm: 9.28: 16%|█▌ | 100/625 [00:20<01:48, 4.84it/s]

reward: -4.8621, last reward: -4.1783, gradient norm: 9.28: 16%|█▌ | 101/625 [00:20<01:48, 4.84it/s]

reward: -4.4683, last reward: -2.4896, gradient norm: 10.58: 16%|█▌ | 101/625 [00:21<01:48, 4.84it/s]

reward: -4.4683, last reward: -2.4896, gradient norm: 10.58: 16%|█▋ | 102/625 [00:21<01:47, 4.84it/s]

reward: -4.5413, last reward: -5.7029, gradient norm: 8.056: 16%|█▋ | 102/625 [00:21<01:47, 4.84it/s]

reward: -4.5413, last reward: -5.7029, gradient norm: 8.056: 16%|█▋ | 103/625 [00:21<01:47, 4.85it/s]

reward: -4.6580, last reward: -8.4799, gradient norm: 34.32: 16%|█▋ | 103/625 [00:21<01:47, 4.85it/s]

reward: -4.6580, last reward: -8.4799, gradient norm: 34.32: 17%|█▋ | 104/625 [00:21<01:47, 4.85it/s]

reward: -4.6693, last reward: -7.4469, gradient norm: 81.33: 17%|█▋ | 104/625 [00:21<01:47, 4.85it/s]

reward: -4.6693, last reward: -7.4469, gradient norm: 81.33: 17%|█▋ | 105/625 [00:21<01:47, 4.85it/s]

reward: -4.7061, last reward: -3.6757, gradient norm: 13.94: 17%|█▋ | 105/625 [00:21<01:47, 4.85it/s]

reward: -4.7061, last reward: -3.6757, gradient norm: 13.94: 17%|█▋ | 106/625 [00:21<01:46, 4.86it/s]

reward: -4.4342, last reward: -3.6883, gradient norm: 26.25: 17%|█▋ | 106/625 [00:22<01:46, 4.86it/s]

reward: -4.4342, last reward: -3.6883, gradient norm: 26.25: 17%|█▋ | 107/625 [00:22<01:46, 4.86it/s]

reward: -4.3992, last reward: -2.4497, gradient norm: 15.67: 17%|█▋ | 107/625 [00:22<01:46, 4.86it/s]

reward: -4.3992, last reward: -2.4497, gradient norm: 15.67: 17%|█▋ | 108/625 [00:22<01:46, 4.87it/s]

reward: -4.3980, last reward: -4.0425, gradient norm: 13.06: 17%|█▋ | 108/625 [00:22<01:46, 4.87it/s]

reward: -4.3980, last reward: -4.0425, gradient norm: 13.06: 17%|█▋ | 109/625 [00:22<01:45, 4.87it/s]

reward: -5.2514, last reward: -4.0430, gradient norm: 8.778: 17%|█▋ | 109/625 [00:22<01:45, 4.87it/s]

reward: -5.2514, last reward: -4.0430, gradient norm: 8.778: 18%|█▊ | 110/625 [00:22<01:45, 4.87it/s]

reward: -5.2656, last reward: -5.0365, gradient norm: 8.68: 18%|█▊ | 110/625 [00:23<01:45, 4.87it/s]

reward: -5.2656, last reward: -5.0365, gradient norm: 8.68: 18%|█▊ | 111/625 [00:23<01:45, 4.86it/s]

reward: -5.2567, last reward: -5.9920, gradient norm: 11.66: 18%|█▊ | 111/625 [00:23<01:45, 4.86it/s]

reward: -5.2567, last reward: -5.9920, gradient norm: 11.66: 18%|█▊ | 112/625 [00:23<01:45, 4.87it/s]

reward: -5.0847, last reward: -5.2160, gradient norm: 12.61: 18%|█▊ | 112/625 [00:23<01:45, 4.87it/s]

reward: -5.0847, last reward: -5.2160, gradient norm: 12.61: 18%|█▊ | 113/625 [00:23<01:44, 4.88it/s]

reward: -4.8941, last reward: -5.0903, gradient norm: 14.7: 18%|█▊ | 113/625 [00:23<01:44, 4.88it/s]

reward: -4.8941, last reward: -5.0903, gradient norm: 14.7: 18%|█▊ | 114/625 [00:23<01:44, 4.88it/s]

reward: -4.5529, last reward: -3.4350, gradient norm: 24.5: 18%|█▊ | 114/625 [00:23<01:44, 4.88it/s]

reward: -4.5529, last reward: -3.4350, gradient norm: 24.5: 18%|█▊ | 115/625 [00:23<01:45, 4.85it/s]

reward: -4.4047, last reward: -3.9059, gradient norm: 11.8: 18%|█▊ | 115/625 [00:24<01:45, 4.85it/s]

reward: -4.4047, last reward: -3.9059, gradient norm: 11.8: 19%|█▊ | 116/625 [00:24<01:44, 4.85it/s]

reward: -4.7905, last reward: -4.2659, gradient norm: 14.6: 19%|█▊ | 116/625 [00:24<01:44, 4.85it/s]

reward: -4.7905, last reward: -4.2659, gradient norm: 14.6: 19%|█▊ | 117/625 [00:24<01:44, 4.86it/s]

reward: -5.1685, last reward: -5.0558, gradient norm: 2.069: 19%|█▊ | 117/625 [00:24<01:44, 4.86it/s]

reward: -5.1685, last reward: -5.0558, gradient norm: 2.069: 19%|█▉ | 118/625 [00:24<01:44, 4.87it/s]

reward: -5.3224, last reward: -3.9649, gradient norm: 22.7: 19%|█▉ | 118/625 [00:24<01:44, 4.87it/s]

reward: -5.3224, last reward: -3.9649, gradient norm: 22.7: 19%|█▉ | 119/625 [00:24<01:43, 4.87it/s]

reward: -5.3083, last reward: -4.9055, gradient norm: 13.3: 19%|█▉ | 119/625 [00:24<01:43, 4.87it/s]

reward: -5.3083, last reward: -4.9055, gradient norm: 13.3: 19%|█▉ | 120/625 [00:24<01:43, 4.87it/s]

reward: -5.1928, last reward: -6.0475, gradient norm: 59.18: 19%|█▉ | 120/625 [00:25<01:43, 4.87it/s]

reward: -5.1928, last reward: -6.0475, gradient norm: 59.18: 19%|█▉ | 121/625 [00:25<01:43, 4.87it/s]

reward: -5.0833, last reward: -4.8086, gradient norm: 20.01: 19%|█▉ | 121/625 [00:25<01:43, 4.87it/s]

reward: -5.0833, last reward: -4.8086, gradient norm: 20.01: 20%|█▉ | 122/625 [00:25<01:43, 4.87it/s]

reward: -4.6719, last reward: -8.9463, gradient norm: 54.76: 20%|█▉ | 122/625 [00:25<01:43, 4.87it/s]

reward: -4.6719, last reward: -8.9463, gradient norm: 54.76: 20%|█▉ | 123/625 [00:25<01:43, 4.86it/s]

reward: -4.2157, last reward: -3.4610, gradient norm: 10.41: 20%|█▉ | 123/625 [00:25<01:43, 4.86it/s]

reward: -4.2157, last reward: -3.4610, gradient norm: 10.41: 20%|█▉ | 124/625 [00:25<01:43, 4.86it/s]

reward: -4.4119, last reward: -2.9298, gradient norm: 50.3: 20%|█▉ | 124/625 [00:25<01:43, 4.86it/s]

reward: -4.4119, last reward: -2.9298, gradient norm: 50.3: 20%|██ | 125/625 [00:25<01:42, 4.86it/s]

reward: -4.7378, last reward: -4.1409, gradient norm: 12.45: 20%|██ | 125/625 [00:26<01:42, 4.86it/s]

reward: -4.7378, last reward: -4.1409, gradient norm: 12.45: 20%|██ | 126/625 [00:26<01:42, 4.86it/s]

reward: -4.0920, last reward: -4.0036, gradient norm: 17.08: 20%|██ | 126/625 [00:26<01:42, 4.86it/s]

reward: -4.0920, last reward: -4.0036, gradient norm: 17.08: 20%|██ | 127/625 [00:26<01:42, 4.86it/s]

reward: -4.4453, last reward: -2.8994, gradient norm: 26.63: 20%|██ | 127/625 [00:26<01:42, 4.86it/s]

reward: -4.4453, last reward: -2.8994, gradient norm: 26.63: 20%|██ | 128/625 [00:26<01:42, 4.85it/s]

reward: -4.2940, last reward: -4.9240, gradient norm: 113.7: 20%|██ | 128/625 [00:26<01:42, 4.85it/s]

reward: -4.2940, last reward: -4.9240, gradient norm: 113.7: 21%|██ | 129/625 [00:26<01:42, 4.85it/s]

reward: -4.4657, last reward: -5.8249, gradient norm: 15.75: 21%|██ | 129/625 [00:26<01:42, 4.85it/s]

reward: -4.4657, last reward: -5.8249, gradient norm: 15.75: 21%|██ | 130/625 [00:26<01:42, 4.85it/s]

reward: -4.6821, last reward: -6.2320, gradient norm: 24.59: 21%|██ | 130/625 [00:27<01:42, 4.85it/s]

reward: -4.6821, last reward: -6.2320, gradient norm: 24.59: 21%|██ | 131/625 [00:27<01:41, 4.85it/s]

reward: -4.7717, last reward: -7.0348, gradient norm: 21.43: 21%|██ | 131/625 [00:27<01:41, 4.85it/s]

reward: -4.7717, last reward: -7.0348, gradient norm: 21.43: 21%|██ | 132/625 [00:27<01:41, 4.85it/s]

reward: -4.5923, last reward: -9.1746, gradient norm: 38.4: 21%|██ | 132/625 [00:27<01:41, 4.85it/s]

reward: -4.5923, last reward: -9.1746, gradient norm: 38.4: 21%|██▏ | 133/625 [00:27<01:41, 4.86it/s]

reward: -4.2964, last reward: -4.3941, gradient norm: 7.475: 21%|██▏ | 133/625 [00:27<01:41, 4.86it/s]

reward: -4.2964, last reward: -4.3941, gradient norm: 7.475: 21%|██▏ | 134/625 [00:27<01:41, 4.85it/s]

reward: -4.2730, last reward: -3.0781, gradient norm: 22.33: 21%|██▏ | 134/625 [00:27<01:41, 4.85it/s]

reward: -4.2730, last reward: -3.0781, gradient norm: 22.33: 22%|██▏ | 135/625 [00:27<01:41, 4.85it/s]

reward: -4.2718, last reward: -3.1451, gradient norm: 8.063: 22%|██▏ | 135/625 [00:28<01:41, 4.85it/s]

reward: -4.2718, last reward: -3.1451, gradient norm: 8.063: 22%|██▏ | 136/625 [00:28<01:40, 4.86it/s]

reward: -4.3199, last reward: -5.0931, gradient norm: 131.1: 22%|██▏ | 136/625 [00:28<01:40, 4.86it/s]

reward: -4.3199, last reward: -5.0931, gradient norm: 131.1: 22%|██▏ | 137/625 [00:28<01:59, 4.09it/s]

reward: -4.4474, last reward: -5.2053, gradient norm: 22.13: 22%|██▏ | 137/625 [00:28<01:59, 4.09it/s]

reward: -4.4474, last reward: -5.2053, gradient norm: 22.13: 22%|██▏ | 138/625 [00:28<01:53, 4.29it/s]

reward: -4.9233, last reward: -3.8841, gradient norm: 6.794: 22%|██▏ | 138/625 [00:28<01:53, 4.29it/s]

reward: -4.9233, last reward: -3.8841, gradient norm: 6.794: 22%|██▏ | 139/625 [00:28<01:49, 4.45it/s]

reward: -4.7412, last reward: -4.6784, gradient norm: 15.88: 22%|██▏ | 139/625 [00:29<01:49, 4.45it/s]

reward: -4.7412, last reward: -4.6784, gradient norm: 15.88: 22%|██▏ | 140/625 [00:29<01:46, 4.57it/s]

reward: -4.4236, last reward: -3.8232, gradient norm: 95.06: 22%|██▏ | 140/625 [00:29<01:46, 4.57it/s]

reward: -4.4236, last reward: -3.8232, gradient norm: 95.06: 23%|██▎ | 141/625 [00:29<01:43, 4.66it/s]

reward: -4.2859, last reward: -5.9936, gradient norm: 19.62: 23%|██▎ | 141/625 [00:29<01:43, 4.66it/s]

reward: -4.2859, last reward: -5.9936, gradient norm: 19.62: 23%|██▎ | 142/625 [00:29<01:42, 4.71it/s]

reward: -4.4756, last reward: -3.0061, gradient norm: 58.42: 23%|██▎ | 142/625 [00:29<01:42, 4.71it/s]

reward: -4.4756, last reward: -3.0061, gradient norm: 58.42: 23%|██▎ | 143/625 [00:29<01:41, 4.76it/s]

reward: -4.6419, last reward: -2.8358, gradient norm: 21.94: 23%|██▎ | 143/625 [00:29<01:41, 4.76it/s]

reward: -4.6419, last reward: -2.8358, gradient norm: 21.94: 23%|██▎ | 144/625 [00:29<01:40, 4.80it/s]

reward: -4.5489, last reward: -4.8108, gradient norm: 26.27: 23%|██▎ | 144/625 [00:30<01:40, 4.80it/s]

reward: -4.5489, last reward: -4.8108, gradient norm: 26.27: 23%|██▎ | 145/625 [00:30<01:39, 4.82it/s]

reward: -4.4234, last reward: -6.1971, gradient norm: 24.6: 23%|██▎ | 145/625 [00:30<01:39, 4.82it/s]

reward: -4.4234, last reward: -6.1971, gradient norm: 24.6: 23%|██▎ | 146/625 [00:30<01:39, 4.83it/s]

reward: -4.6739, last reward: -4.1551, gradient norm: 8.242: 23%|██▎ | 146/625 [00:30<01:39, 4.83it/s]

reward: -4.6739, last reward: -4.1551, gradient norm: 8.242: 24%|██▎ | 147/625 [00:30<01:38, 4.83it/s]

reward: -4.4584, last reward: -5.1256, gradient norm: 4.714: 24%|██▎ | 147/625 [00:30<01:38, 4.83it/s]

reward: -4.4584, last reward: -5.1256, gradient norm: 4.714: 24%|██▎ | 148/625 [00:30<01:38, 4.85it/s]

reward: -4.3930, last reward: -3.8382, gradient norm: 2.931: 24%|██▎ | 148/625 [00:30<01:38, 4.85it/s]

reward: -4.3930, last reward: -3.8382, gradient norm: 2.931: 24%|██▍ | 149/625 [00:30<01:38, 4.86it/s]

reward: -4.8215, last reward: -3.7751, gradient norm: 12.4: 24%|██▍ | 149/625 [00:31<01:38, 4.86it/s]

reward: -4.8215, last reward: -3.7751, gradient norm: 12.4: 24%|██▍ | 150/625 [00:31<01:37, 4.86it/s]

reward: -4.9927, last reward: -4.0620, gradient norm: 9.91: 24%|██▍ | 150/625 [00:31<01:37, 4.86it/s]

reward: -4.9927, last reward: -4.0620, gradient norm: 9.91: 24%|██▍ | 151/625 [00:31<01:37, 4.87it/s]

reward: -4.7118, last reward: -4.4055, gradient norm: 14.72: 24%|██▍ | 151/625 [00:31<01:37, 4.87it/s]

reward: -4.7118, last reward: -4.4055, gradient norm: 14.72: 24%|██▍ | 152/625 [00:31<01:37, 4.87it/s]

reward: -4.5860, last reward: -3.0642, gradient norm: 12.02: 24%|██▍ | 152/625 [00:31<01:37, 4.87it/s]

reward: -4.5860, last reward: -3.0642, gradient norm: 12.02: 24%|██▍ | 153/625 [00:31<01:36, 4.87it/s]

reward: -4.2358, last reward: -3.0014, gradient norm: 20.68: 24%|██▍ | 153/625 [00:31<01:36, 4.87it/s]

reward: -4.2358, last reward: -3.0014, gradient norm: 20.68: 25%|██▍ | 154/625 [00:31<01:36, 4.87it/s]

reward: -4.3053, last reward: -4.5390, gradient norm: 14.11: 25%|██▍ | 154/625 [00:32<01:36, 4.87it/s]

reward: -4.3053, last reward: -4.5390, gradient norm: 14.11: 25%|██▍ | 155/625 [00:32<01:36, 4.88it/s]

reward: -4.4845, last reward: -7.6566, gradient norm: 51.89: 25%|██▍ | 155/625 [00:32<01:36, 4.88it/s]

reward: -4.4845, last reward: -7.6566, gradient norm: 51.89: 25%|██▍ | 156/625 [00:32<01:36, 4.87it/s]

reward: -4.7679, last reward: -8.4566, gradient norm: 19.11: 25%|██▍ | 156/625 [00:32<01:36, 4.87it/s]

reward: -4.7679, last reward: -8.4566, gradient norm: 19.11: 25%|██▌ | 157/625 [00:32<01:36, 4.87it/s]

reward: -4.6030, last reward: -6.4867, gradient norm: 24.21: 25%|██▌ | 157/625 [00:32<01:36, 4.87it/s]

reward: -4.6030, last reward: -6.4867, gradient norm: 24.21: 25%|██▌ | 158/625 [00:32<01:36, 4.86it/s]

reward: -4.3156, last reward: -4.3057, gradient norm: 26.15: 25%|██▌ | 158/625 [00:33<01:36, 4.86it/s]

reward: -4.3156, last reward: -4.3057, gradient norm: 26.15: 25%|██▌ | 159/625 [00:33<01:35, 4.87it/s]

reward: -4.1515, last reward: -2.7400, gradient norm: 46.67: 25%|██▌ | 159/625 [00:33<01:35, 4.87it/s]

reward: -4.1515, last reward: -2.7400, gradient norm: 46.67: 26%|██▌ | 160/625 [00:33<01:35, 4.87it/s]

reward: -4.1984, last reward: -3.1343, gradient norm: 10.44: 26%|██▌ | 160/625 [00:33<01:35, 4.87it/s]

reward: -4.1984, last reward: -3.1343, gradient norm: 10.44: 26%|██▌ | 161/625 [00:33<01:35, 4.88it/s]

reward: -4.7794, last reward: -4.1895, gradient norm: 15.07: 26%|██▌ | 161/625 [00:33<01:35, 4.88it/s]

reward: -4.7794, last reward: -4.1895, gradient norm: 15.07: 26%|██▌ | 162/625 [00:33<01:34, 4.88it/s]

reward: -4.8227, last reward: -3.9495, gradient norm: 10.96: 26%|██▌ | 162/625 [00:33<01:34, 4.88it/s]

reward: -4.8227, last reward: -3.9495, gradient norm: 10.96: 26%|██▌ | 163/625 [00:33<01:34, 4.88it/s]

reward: -5.0627, last reward: -2.8677, gradient norm: 8.216: 26%|██▌ | 163/625 [00:34<01:34, 4.88it/s]

reward: -5.0627, last reward: -2.8677, gradient norm: 8.216: 26%|██▌ | 164/625 [00:34<01:34, 4.88it/s]

reward: -4.3039, last reward: -3.8106, gradient norm: 15.09: 26%|██▌ | 164/625 [00:34<01:34, 4.88it/s]

reward: -4.3039, last reward: -3.8106, gradient norm: 15.09: 26%|██▋ | 165/625 [00:34<01:34, 4.88it/s]

reward: -4.2623, last reward: -3.6619, gradient norm: 22.77: 26%|██▋ | 165/625 [00:34<01:34, 4.88it/s]

reward: -4.2623, last reward: -3.6619, gradient norm: 22.77: 27%|██▋ | 166/625 [00:34<01:34, 4.88it/s]

reward: -4.0987, last reward: -3.0736, gradient norm: 20.92: 27%|██▋ | 166/625 [00:34<01:34, 4.88it/s]

reward: -4.0987, last reward: -3.0736, gradient norm: 20.92: 27%|██▋ | 167/625 [00:34<01:33, 4.88it/s]

reward: -4.3893, last reward: -5.3442, gradient norm: 9.876: 27%|██▋ | 167/625 [00:34<01:33, 4.88it/s]

reward: -4.3893, last reward: -5.3442, gradient norm: 9.876: 27%|██▋ | 168/625 [00:34<01:33, 4.88it/s]

reward: -4.6078, last reward: -7.7466, gradient norm: 16.06: 27%|██▋ | 168/625 [00:35<01:33, 4.88it/s]

reward: -4.6078, last reward: -7.7466, gradient norm: 16.06: 27%|██▋ | 169/625 [00:35<01:33, 4.89it/s]

reward: -4.5928, last reward: -6.5101, gradient norm: 20.69: 27%|██▋ | 169/625 [00:35<01:33, 4.89it/s]

reward: -4.5928, last reward: -6.5101, gradient norm: 20.69: 27%|██▋ | 170/625 [00:35<01:32, 4.89it/s]

reward: -4.3683, last reward: -3.9307, gradient norm: 78.59: 27%|██▋ | 170/625 [00:35<01:32, 4.89it/s]

reward: -4.3683, last reward: -3.9307, gradient norm: 78.59: 27%|██▋ | 171/625 [00:35<01:32, 4.89it/s]

reward: -4.1301, last reward: -2.4966, gradient norm: 41.21: 27%|██▋ | 171/625 [00:35<01:32, 4.89it/s]

reward: -4.1301, last reward: -2.4966, gradient norm: 41.21: 28%|██▊ | 172/625 [00:35<01:32, 4.88it/s]

reward: -4.0062, last reward: -2.8255, gradient norm: 4.798: 28%|██▊ | 172/625 [00:35<01:32, 4.88it/s]

reward: -4.0062, last reward: -2.8255, gradient norm: 4.798: 28%|██▊ | 173/625 [00:35<01:32, 4.86it/s]

reward: -4.1558, last reward: -3.7388, gradient norm: 214.8: 28%|██▊ | 173/625 [00:36<01:32, 4.86it/s]

reward: -4.1558, last reward: -3.7388, gradient norm: 214.8: 28%|██▊ | 174/625 [00:36<01:32, 4.87it/s]

reward: -4.2803, last reward: -3.7403, gradient norm: 15.82: 28%|██▊ | 174/625 [00:36<01:32, 4.87it/s]

reward: -4.2803, last reward: -3.7403, gradient norm: 15.82: 28%|██▊ | 175/625 [00:36<01:32, 4.87it/s]

reward: -4.4744, last reward: -2.6246, gradient norm: 8.711: 28%|██▊ | 175/625 [00:36<01:32, 4.87it/s]

reward: -4.4744, last reward: -2.6246, gradient norm: 8.711: 28%|██▊ | 176/625 [00:36<01:32, 4.87it/s]