CosineAnnealingLR#

- class torch.optim.lr_scheduler.CosineAnnealingLR(optimizer, T_max, eta_min=0.0, last_epoch=-1)[source]#

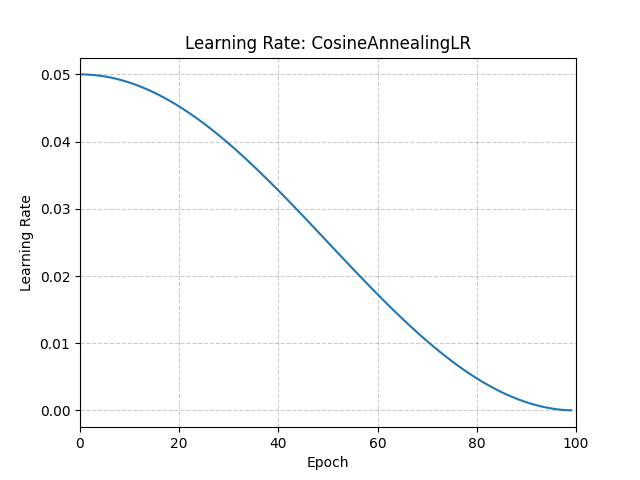

使用余弦退火调度设置每个参数组的学习率。

The learning rate is updated recursively using

This implements a recursive approximation of the closed-form schedule proposed in SGDR: Stochastic Gradient Descent with Warm Restarts

其中

is the learning rate at step

is the number of epochs since the last restart

is the maximum number of epochs in a cycle

注意

Although SGDR includes periodic restarts, this implementation performs cosine annealing without restarts, so and increases monotonically with each call to

step().- 参数

示例

>>> num_epochs = 100 >>> scheduler = CosineAnnealingLR(optimizer, T_max=num_epochs) >>> for epoch in range(num_epochs): >>> train(...) >>> validate(...) >>> scheduler.step()

- load_state_dict(state_dict)[source]#

加载调度器的状态。

- 参数

state_dict (dict) – scheduler state. Should be an object returned from a call to

state_dict().