CosineAnnealingWarmRestarts#

- class torch.optim.lr_scheduler.CosineAnnealingWarmRestarts(optimizer, T_0, T_mult=1, eta_min=0.0, last_epoch=-1)[源代码]#

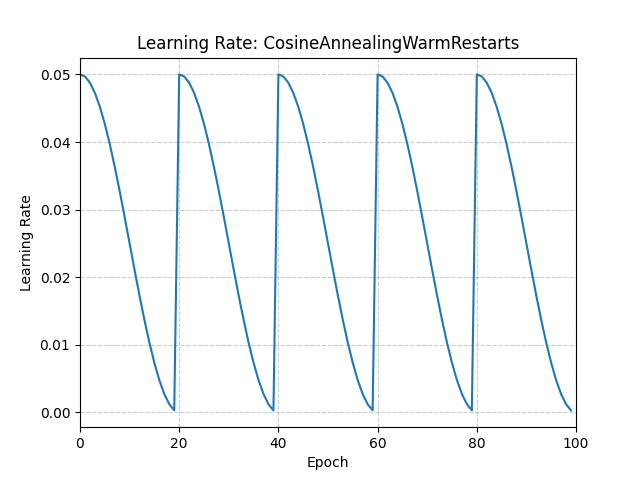

使用余弦退火调度设置每个参数组的学习率。

其中 设置为初始学习率, 是自上次重启以来的 epoch 数,而 是 SGDR 中两次热重启之间的 epoch 数。

当 时,设置为 。当重启后 时,设置为 。

在 SGDR: Stochastic Gradient Descent with Warm Restarts 中有提出。

- 参数

示例

>>> optimizer = torch.optim.SGD(model.parameters(), lr=0.05) >>> scheduler = torch.optim.lr_scheduler.CosineAnnealingWarmRestarts( ... optimizer, T_0=20 ... ) >>> for epoch in range(100): >>> train(...) >>> validate(...) >>> scheduler.step()

- load_state_dict(state_dict)[源代码]#

加载调度器的状态。

- 参数

state_dict (dict) – 调度器的状态。应该是一个从调用

state_dict()返回的对象。

- step(epoch=None)[源代码]#

Step 可以在每次 batch 更新后调用。

示例

>>> scheduler = CosineAnnealingWarmRestarts(optimizer, T_0, T_mult) >>> iters = len(dataloader) >>> for epoch in range(20): >>> for i, sample in enumerate(dataloader): >>> inputs, labels = sample['inputs'], sample['labels'] >>> optimizer.zero_grad() >>> outputs = net(inputs) >>> loss = criterion(outputs, labels) >>> loss.backward() >>> optimizer.step() >>> scheduler.step(epoch + i / iters)

此函数可以以交错的方式调用。

示例

>>> scheduler = CosineAnnealingWarmRestarts(optimizer, T_0, T_mult) >>> for epoch in range(20): >>> scheduler.step() >>> scheduler.step(26) >>> scheduler.step() # scheduler.step(27), instead of scheduler(20)