远程引用协议#

创建于:2019年11月20日 | 最后更新于:2025年04月27日

本文档描述了远程引用协议的设计细节,并回顾了不同场景下的消息流。请确保您熟悉 分布式 RPC 框架 后再继续阅读。

背景#

RRef 是 Remote REFerence 的缩写。它是一个指向本地或远程工作器上对象的引用,并在后台透明地处理引用计数。从概念上讲,它可以被视为一个分布式共享指针。应用程序可以通过调用 remote() 来创建 RRef。每个 RRef 由 remote() 调用的被调用方工作器(即所有者)拥有,并且可以被多个用户使用。所有者存储真实数据并跟踪全局引用计数。每个 RRef 都可以通过一个全局唯一的 RRefId 来标识,该 ID 在 remote() 调用发起方处创建时分配。

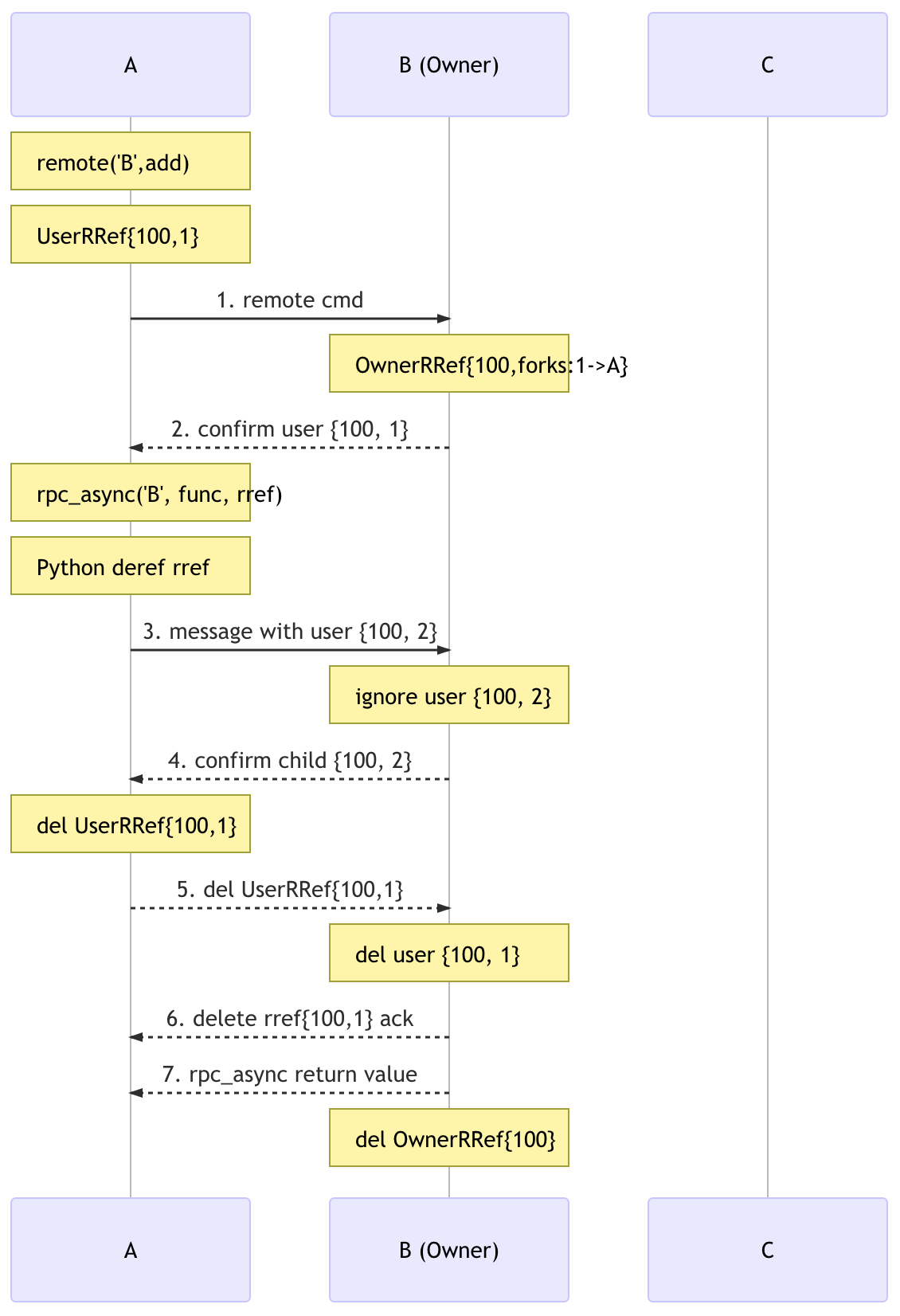

在所有者工作器上,只有一个 OwnerRRef 实例,其中包含真实数据,而在用户工作器上,可以根据需要创建任意数量的 UserRRef,并且 UserRRef 不持有数据。在所有者上的所有使用都将使用全局唯一的 RRefId 来检索唯一的 OwnerRRef 实例。当 UserRRef 用作 rpc_sync()、rpc_async() 或 remote() 调用中的参数或返回值时,将会创建一个 UserRRef,并且所有者将收到通知以更新引用计数。当全局上没有任何 UserRRef 实例并且所有者上也没有对 OwnerRRef 的引用时,OwnerRRef 及其数据将被删除。

假设#

RRef 协议的设计基于以下假设。

短暂的网络故障:RRef 设计通过重试消息来处理短暂的网络故障。它无法处理节点崩溃或永久性网络分区。当发生这些事件时,应用程序应关闭所有工作器,回滚到之前的检查点,然后恢复训练。

非幂等的 UDF:我们假设提供给

rpc_sync()、rpc_async()或remote()的用户函数 (UDF) 不是幂等的,因此不能重试。但是,内部 RRef 控制消息是幂等的,并在消息失败时重试。消息乱序投递:我们不假设任何节点对之间的消息投递顺序,因为发送方和接收方都使用了多个线程。消息的处理顺序没有保证。

RRef 生命周期#

该协议的目标是在适当的时候删除 OwnerRRef。删除 OwnerRRef 的合适时机是当没有活动的 UserRRef 实例,并且用户代码也不持有对 OwnerRRef 的引用时。棘手的部分是如何确定是否存在任何活动的 UserRRef 实例。

设计推理#

用户可以在三种情况下获得 UserRRef:

从所有者那里收到

UserRRef。从另一个用户那里收到

UserRRef。创建一个由另一个工作器拥有的新

UserRRef。

情况 1 最简单,所有者将 RRef 传递给用户,其中所有者调用 rpc_sync()、rpc_async() 或 remote() 并将其 RRef 作为参数。在这种情况下,用户端将创建一个新的 UserRRef。由于所有者是调用者,它可以轻松更新其在 OwnerRRef 上的本地引用计数。

唯一的要求是任何 UserRRef 在销毁时必须通知所有者。因此,我们需要第一个保证:

G1. 当任何 UserRRef 被删除时,所有者将收到通知。

由于消息可能延迟或乱序到达,我们需要另一个保证,以确保删除消息不会过早处理。如果 A 向 B 发送一条涉及 RRef 的消息,我们将 A 上的 RRef 称为(父 RRef),将 B 上的 RRef 称为(子 RRef)。

G2. 父 RRef 在被所有者确认子 RRef 之前不会被删除。

在情况 2 和 3 中,所有者可能只对 RRef 分叉图有部分或完全不知情。例如,RRef 可能在一个用户上创建,在所有者收到任何 RPC 调用之前,创建者用户可能已经与其他用户共享了 RRef,并且这些用户可能进一步共享 RRef。一个不变的规则是,任何 RRef 的分叉图始终是一棵树,因为分叉 RRef 总是会在被调用方(除非被调用方是所有者)上创建一个新的 UserRRef 实例,因此每个 RRef 都有一个唯一的父级。

所有者对树中任何 UserRRef 的视图有三个阶段:

1) unknown -> 2) known -> 3) deleted.

所有者对整个树的视图不断变化。当所有者认为没有活动的 UserRRef 实例时,它会删除其 OwnerRRef 实例,即当 OwnerRRef 被删除时,所有 UserRRef 实例可能已经被实际删除,也可能是未知的。危险的情况是某些分叉未知而其他分叉已被删除。

G2 默认保证了任何父 UserRRef 在所有者知道其所有子 UserRRef 实例之前不会被删除。但是,子 UserRRef 在所有者知道其父 UserRRef 之前可能已被删除。

考虑以下示例,其中 OwnerRRef 分叉到 A,然后 A 分叉到 Y,Y 分叉到 Z。

OwnerRRef -> A -> Y -> Z

如果 Z 的所有消息,包括删除消息,都在 Y 的消息之前被所有者处理。所有者将在知道 Y 存在之前就得知 Z 被删除。尽管如此,这并不会导致任何问题。因为,至少 Y 的一个祖先(A)会保持活动状态,它将阻止所有者删除 OwnerRRef。更具体地说,如果所有者不知道 Y,则由于 G2,A 不会被删除,并且所有者知道 A,因为它就是 A 的父级。

如果 RRef 是在用户上创建的,情况会稍微复杂一些。

OwnerRRef

^

|

A -> Y -> Z

如果 Z 对 UserRRef 调用 to_here(),那么在 Z 被删除时,所有者至少知道 A,因为否则 to_here() 将不会完成。如果 Z 没有调用 to_here(),则所有者可能在收到来自 A 和 Y 的任何消息之前就收到了 Z 的所有消息。在这种情况下,由于 OwnerRRef 的真实数据尚未创建,因此也没有什么可以删除的。这与 Z 完全不存在的情况相同。因此,仍然是可以的。

实现#

G1 通过在 UserRRef 析构函数中发送删除消息来实现。为了提供 G2,父 UserRRef 在被分叉时被放入一个上下文中,并由新的 ForkId 索引。父 UserRRef 仅在收到子节点的确认消息 (ACK) 后才从上下文中移除,而子节点仅在得到所有者的确认后才会发送 ACK。

协议场景#

现在让我们在四种场景中讨论上述设计如何转化为协议。